Title says it all. Apparently, some think this is not the case, but it is a straightforward application of Bayes’ theorem. When I first learned of Bayes’ theorem years ago, I thought of this point. Back then I also believed that stereotypes are irrelevant when one has individualized information. Alas, it is incorrect. Neven Sesardic in his excellent and highly recommended book Making Sense of Heritability (2005; download) explained it very clearly, so I will quote his account in full:

A standard reaction to the suggestion that there might be psychological differences between groups is to exclaim “So what?” Whatever these differences, whatever their origin, people should still be treated as individuals, and this is the end of the matter.

There are several problems with this reasoning. First of all, group membership is often a part of an individual’s identity. Therefore, it may not be easy for individuals to accept the fact of a group difference if it does not reflect well on their group. Of course, whichever characteristic we take, there will usually be much overlap, the difference will be only statistical (between group averages), any group will have many individuals that outscore most members of other groups, yet individuals belonging to the lowest-scoring group may find it difficult to live with this fact. It is not likely that the situation will become tolerable even if it is shown that it is not product of social injustice. As Nathan Glazer said: “But how can a group accept an inferior place in society, even if good reasons for it are put forth? It cannot” (Glazer 1994: 16). In addition, to the extent that the difference turns out to be heritable there will be more reason to think that it will not go away so easily (see chapter 5). It will not be readily eliminable through social engineering. It will be modifiable in principle, but not locally modifiable (see section 5.3 for the explanation of these terms). All this could make it even more difficult to accept it.

Next, the statement that people should be treated as individuals is certainly a useful reminder that in many contexts direct knowledge about a particular person eclipses the informativeness of any additional statistical data, and often makes the collection of this kind of data pointless. The statement is fine as far as it goes, but it should not be pushed too far. If it is understood as saying that it is a fallacy to use the information about an individual’s group membership to infer something about that individual, the statement is simply wrong. Exactly the opposite is true: it is a fallacy not to take this information into account.

Suppose we are interested in whether John has characteristic F. Evidence E (directly relevant for the question at hand) indicates that the probability of John having F is p. But suppose we also happen to know that John is a member of group G. Now elementary probability theory tells us that if we want to get the best estimate of the probability that John has F we have to bring the group information to bear on the issue. In calculating the desired probability we have to take into account (a) that John is a member of G, and (b) what proportion of G has F. Neglecting these two pieces of information would mean discarding potentially relevant information. (It would amount to violating what Carnap called “the requirement of total evidence.”) It may well happen that in the light of this additional information we would be forced to revise our estimate of probability from p to p∗. Disregarding group membership is at the core of the so-called “base rate fallacy,” which I will describe using Tversky and Kahneman’s taxicab scenario (Tversky & Kahneman 1980).

In a small city, in which there are 90 green taxis and 10 blue taxis, there was a hit-and-run accident involving a taxi. There is also an eyewitness who told the police that the taxi was blue. The witness’s reliability is 0.8, which means that, when he was tested for his ability to recognize the color of the car under the circumstances similar to those at the accident scene, his statements were correct 80 percent of the time. To reduce verbiage, let me introduce some abbreviations: B = the taxi was blue; G = the taxi was green; WB = witness said that the taxi was blue.

What we know about the whole situation is the following:

(1) p(B) = 0.1 (the prior probability of B, before the witness’s statement is taken into account)

(2) p(G) = 0.9 (the prior probability of G)

(3) p(WB/B) = 0.8 (the reliability of the witness, or the probability of WB, given B)

(4) p(WB/G) = 0.2 (the probability of WB, given G)

Now, given all this information, what is the probability that the taxi was blue in that particular situation? Basically we want to find p(B/WB), the posterior probability of B, i.e., the probability of B after WB is taken into account. People often conclude, wrongly, that this probability is 0.8. They fail to take into consideration that the proportion of blue taxis is pretty low (10 percent), and that the true probability must reflect that fact. A simple rule of elementary probability, Bayes’ theorem, gives the formula to be applied here:

p(B/WB) = p(B) × p(WB/B) / [p(B) × p(WB/B) + p(G) × p(WB/G)].

Therefore, the correct value for p(B/WB) is 0.31, which shows that the usual guess (0.8 or close to it) is wide of the mark.

It is easier to understand that 0.31 is the correct answer by looking at Figure 6.1. Imagine that the situation with the accident and the witness repeats itself 100 times. Obviously, we can expect that the taxi involved in the accident will be blue in 10 cases (10 percent), while in the remaining 90 cases it will be green. Now consider these two different kinds of cases separately. In the top section (blue taxis), the witness recognizes the true color of the car 80 percent of the times, which means in 8 out of 10 cases. In the bottom section (green taxis), he again recognizes the true color of the car 80 percent of the times, which here means in 72 out of 90 cases. Now count all those cases where the witness declares that the taxi is blue, and see how often he is right about it. Then simply divide the number of times he is right when he says “blue” with the overall number of times he says “blue,” and this will immediately give you p(B/WB). The witness gives the answer “blue” 8 times in the upper section (when the taxi is indeed blue), and 18 times in the bottom section (when the taxi is actually green). Therefore, our probability is: 8/(8 + 18) = 0.31.It may all seem puzzling. How can it be that the witness says the taxi is blue, his reliability as a witness is 0.8, and yet the probability that the taxi is blue is only 0.31? Actually there is nothing wrong with the reasoning. It is the lower prior frequency of blue taxis that brings down the probability of the taxi being blue, and that is that. Bayes’ theorem is a mathematical truth. Its application in this kind of situation is beyond dispute. Any remaining doubt will be dispelled by inspecting Figure 6.1 and seeing that if you trust the witness when he says “blue” you will indeed be more often wrong than right. But notice that you have excellent reasons to trust the witness if he says “green” because in that case he will be right 97 percent of the time! It all follows from the difference in prior probabilities for “blue” and “green.” There is a consensus that neglecting prior probabilities (or base rates) is a logical fallacy.

But if neglecting prior probabilities is a fallacy in the taxicab example, then it cannot stop being a fallacy in other contexts. Oddly enough, many people’s judgment actually changes with context, particularly when it comes to inferences involving social groups. The same move of neglecting base rates that was previously condemned as the violation of elementary probability rules is now praised as reasonable, whereas applying the Bayes’ theorem (previously recommended) is now criticized as a sign of irrationality, prejudice and bigotry.

A good example is racial or ethnic profiling,30 the practice that is almost universally denounced as ill advised, silly, and serving no useful purpose. This is surprising because the inference underlying this practice has the same logical structure as the taxicab situation. Let me try to show this by representing it in the same format as Figure 6.1. But first I will present an example with some imagined data to prepare the ground for the probability question and for the discussion of group profiling.

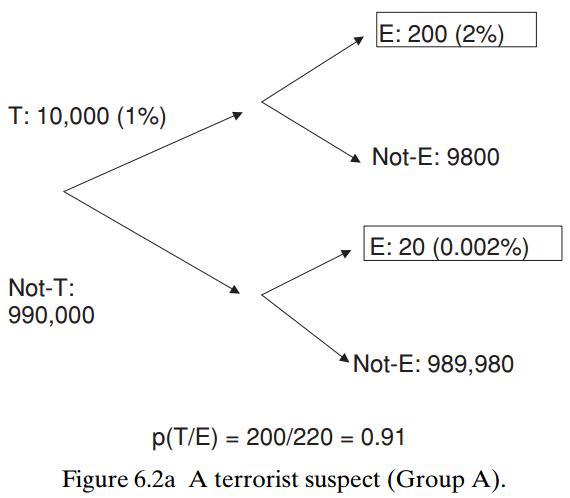

Suppose that there is a suspicious characteristic E such that 2 percent terrorists (T) have E but only 0.002 percent non-terrorists (−T) have E. This already gives us two probabilities: p(E/T) = 0.02; p(E/−T) = 0.00002. How useful is E for recognizing terrorists? How likely is it that someone is T if he has E? What is p(T/E)? Bayes’ theorem tells us that the answer depends on the percentage of terrorists in a population. (Clearly, if everybody is a terrorist, then p(T/E) = 1; if no one is a terrorist, then p(T/E) = 0; if some people are T and some −T, then 1 > p(T/E) > 0.) To activate the group question, suppose that there are two groups, A and B, that have different percentages of terrorists (1 in 100, and 1 in 10,000, respectively). This translates into different probabilities of an arbitrary member of a group being a terrorist. In group A, p(T) = 0.01 but in group B, p(T) = 0.0001. Now for the central question: what will p(T/E) be in A and in B? Figures 6.2a and 6.2b provide the answer.

In group A, the probability of a person with characteristic E being a terrorist is 0.91. In group B, this probability is 0.09 (more than ten times lower). The group membership matters, and it matters a lot.

Test your intuitions with a thought experiment: in an airport, you see a person belonging to group A and another person from group B. Both have suspicious trait E but they go in opposite directions. Whom will you follow and perhaps report to the police? Will you (a) go by probabilities and focus on A (committing the sin of racial or ethnic profiling), or (b) follow political correctness and flip a coin (and feel good about it)? It would be wrong to protest here and refuse to focus on A by pointing out that most As are not terrorists. This is true but irrelevant. Most As that have E are terrorists (91 percent of them, to be precise), and this is what counts. Compare that with the other group, where out of all Bs that have E, less than 10 percent are terrorists.

To recapitulate, since the two situations (the taxicab example and the social groups example) are similar in all relevant aspects, consistency requires the same answer. But the resolute answer is already given in the first situation. All competent people speak with one voice here, and agree that in this kind of situation the witness’s statement is only part of the relevant evidence. The proportion of blue cars must also be taken into account to get the correct probability that the taxi involved in the accident was blue. Therefore, there is no choice but to draw the corresponding conclusion in the second case. E is only part of the relevant evidence. The proportion of terrorists in group A (or B) must also be taken into account to get the correct probability that an individual from group A (or B) is a terrorist.

The “must” here is a conditional “must,” not a categorical imperative. That is, you must take into account prior probabilities if you want to know the true posterior probability. But sometimes there may be other considerations, besides the aim to know the true probability. For instance, it may be thought unfair or morally unacceptable to treat members of group A differently from members of group B. After all, As belong to their ethnic group without any decision on their part, and it could be argued that it is unjust to treat every A as more suspect just because a very small proportion of terrorists among As happens to be higher than an even lower proportion of terrorists among Bs. Why should some people be inconvenienced and treated worse than others only because they share a group characteristic, which they did not choose, which they cannot change, and which is in itself morally irrelevant?

I recognize the force of this question. It pulls in the opposite direction from Bayes’ theorem, urging us not to take into account prior probabilities. The question which of the two reasons (the Bayesian or the moral one) should prevail is very complex, and there is no doubt that the answer varies widely, depending on the specific circumstances and also on the answerer. I will not enter that debate at all because it would take us too far away from our subject.

The point to remember is that when many people say that “an individual can’t be judged by his group mean” (Gould 1977: 247), that “as individuals we are all unique and population statistics do not apply” (Venter 2000), that “a person should not be judged as a member of a group but as an individual” (Herrnstein & Murray 1994: 550), these statements sound nice and are likely to be well received but they conflict with the hard fact that a group membership sometimes does matter. If scholars wear their scientific hats when denying or disregarding this fact, I am afraid that rather than convincing the public they will more probably damage the credibility of science.

It is of course an empirical question how often and how much the group information is relevant for judgments about individuals in particular situations, but before we address this complicated issue in specific cases, we should first get rid of the wrong but popular idea that taking group membership into consideration (when thinking about individuals) is in itself irrational or morally condemnable, or both. On the contrary, in certain decisions about individuals, people “would have to be either saints or idiots not to be influenced by the collective statistics” (Genovese 1995: 333).

[…]

Lee Jussim (politically incorrect social psychologist; blog) in his interesting book Social perception and social reality (2012; download), notes the same fact. In fact, he spends an entire chapter on the question of how people integrate stereotypes with individualized information and whether this increases accuracy. He begins:

Stereotypes and Person Perception: How Should People Judge Individuals?

should?

“Should” might mean many things. It might mean, “What would be the most moral thing to do?” Or, “What would be the legal thing to do, or the most socially acceptable thing to do, or the least off ensive thing to do?” I do not use it here, however, to mean any of these things. Instead, I use the term “should” here to mean “what would lead people to be most accurate?” It is possible that being as accurate as possible would be considered by some people to be immoral or even illegal (see Chapters 10 and 15). Indeed, a wonderful turn of phrase, “forbidden base-rates,” was coined (Tetlock, 2002 ) to capture the very idea that, sometimes, many people would be outraged by the use of general information about groups to reach judgments that would be as accurate as possible (a “base-rate” is the overall prevalence of some characteristic in a group, usually presented as a percentage; e.g., “0.7 % of Americans are in prison” is a base-rate reflecting Americans’ likelihood of being in prison). The focus in this chapter is exclusively on accuracy and not on morality or legality.

Philip Tetlock (famous for his forecasting tournaments) in the quoted article above, writes:

The SVPM [The sacred value-protection model] maintains that categorical proscriptions on cognition can also be triggered by blocking the implementation of relational schemata in sensitive domains. For example, forbidden base rates can be defined as any statistical generalization that devout Bayesians would not hesitate to insert into their likelihood computations but that deeply offends a moral community. In late 20th-century America, egalitarian movements struggled to purge racial discrimination and its residual effects from society (Sniderman & Tetlock, 1986). This goal was justified in communal-sharing terms (“we all belong to the same national family”) and in equality-matching terms (“let’s rectify an inequitable relationship”). Either way, individual or corporate actors who use statistical generalizations (about crime, academic achievement, etc.) to justify disadvantaging already disadvantaged populations are less likely to be lauded as savvy intuitive statisticians than they are to be condemned for their moral insensitivity.

So this is not some exotic idea, it is recognized by several experts.

I don’t have any particular opinion regarding the morality of using involuntary group memberships in one’s assessments, but in terms of epistemic rationality (making correct judgments), the case is clear: one must take into account group memberships when making judgments about individuals. Of course, some individualized information may have a much stronger evidential value than the mere fact of group membership.

By the way, this fact means that studies that try to show bias of raters/employers based on e.g. sending fake resumes with members of two racial groups with equal features (e.g. educational attainment), do not demonstrate bias. This is even if there was no such thing as affirmative action. It is even true in some cases after selection for a fair criterion.