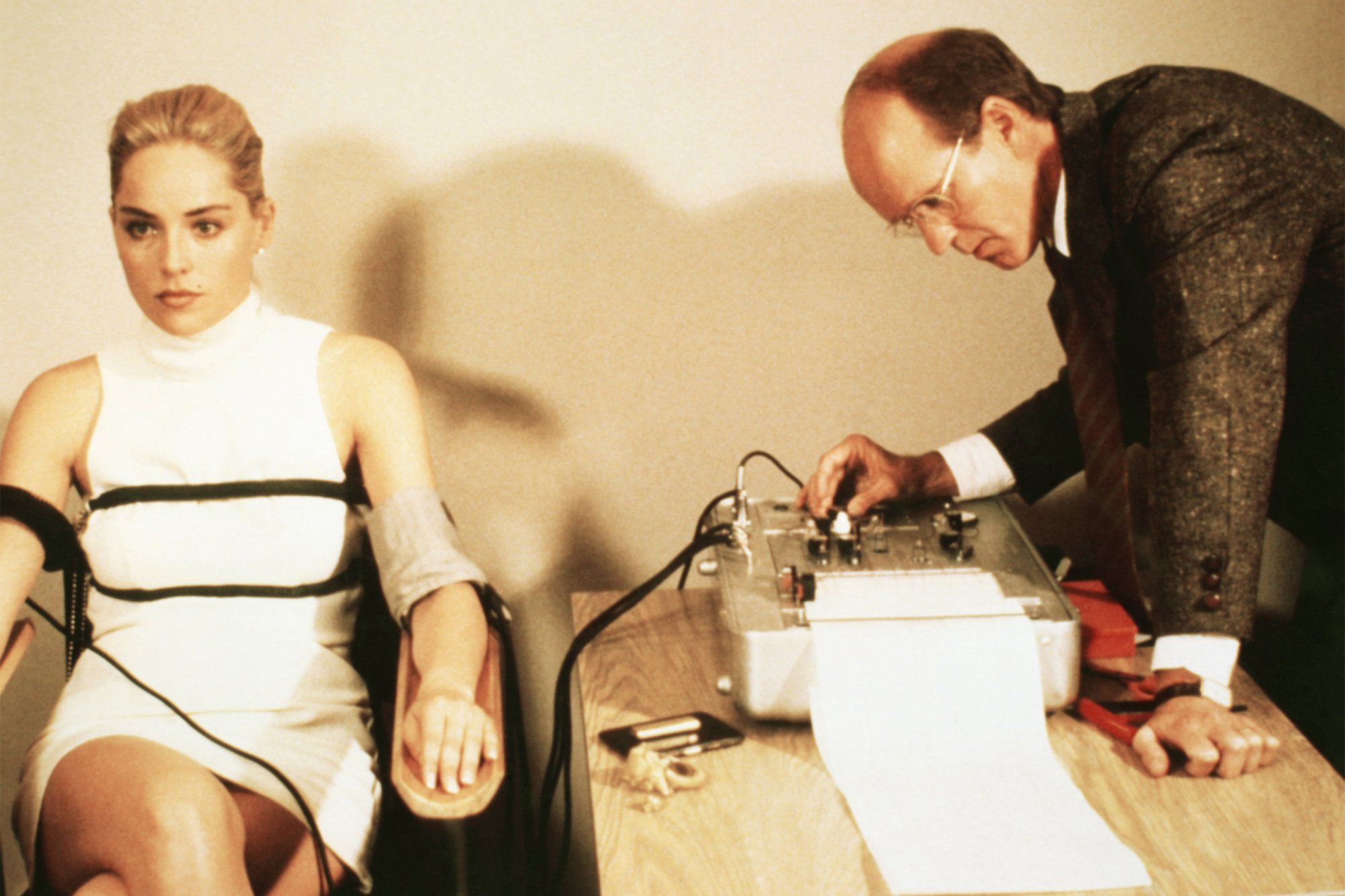

What if lying was impossible? You’ve surely seen lie detectors in movies, and heard about them being used in court rooms with questionable validity data.

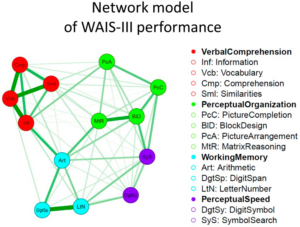

But what if we could make them work? I think we can. There’s two technologies that can probably and absolutely tell whether someone is lying. Functional MRI are not exactly new technology, having been already abused by neuroscientists with bad statistics for several decades. However, that is because they were working with tiny samples. These days we have relatively massive samples in the 10,000’s. If we put a lot of people into MRI’s while asking them questions, could we train an AI/ML model to tell whether someone is lying? I think the answer is “yes, certainly”. The idea is not that new: here’s a review from 2009, and 2014, and 2021.

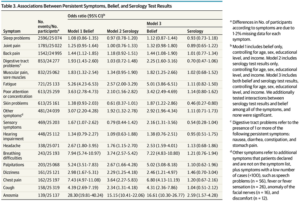

The 2021 review finds 52 articles that tried using fMRI. The typical setup is that researchers hand subjects some playing cards. They provide them with some instructions about which cards they should claim to have in their hand. Sometimes there is a monetary reward to increase ecological validity (realism as opposed to weird lab effect). The reviews, however, are rather atrocious. What we would like to see is some measure of accuracy being meta-analyzed, say, AUC (a metric where 1.00 = perfect, 0.50 = no signal). For lie detectors to be useful in real life court settings, they should probably have an AUC of at least 0.90. For comparison, this team tried to predict who would die when admitted to a medical ward. 5% of patients died. By feeding various medications and physical symptoms into the model, it could achieve AUC = 0.92. This sounds impressive, but in reality, there was only a 22% chance that the patient died when the model predicted this using an optimal threshold. That’s too low to be useful in courts, but the critical thing to understand is that the ability of a model to predict a death or a lie depends on how common these are. Since the police usually only charge people they think are guilty, the proportion of people who end up in court who are actually guilty is very high, say 90%. In this scenario, a hypothetical lie detector with AUC = 0.90 would probably do quite well in predicting who is lying, but it would also do relatively poorly at predicting who isn’t lying.

So can we get results even above AUC = 0.90? I think we can. Here’s a 2022 conference paper claiming AUC = .98 which resulted in an accuracy of 94%. That’s close to the 5% error rate that social scientists usually accept. And here’s the best part: the study didn’t even use fMRI, it only used videos of faces! So imagine a future study with 10x the sample size that used both fMRI and video data. I think it’s practically guaranteed the AUC will get to ~1.00 within a decade.

What to do in a post-lying?

Suppose my speculations are correct. There will be an obvious ‘civil rights’ movement to outlaw these technologies. The usual suspects will claim that lie detectors are racist, fMRI is racist etc. Since various lines of evidence indicate that the usual racial hierarchy is also found in rates of lying, Blacks will be found the least truthful whenever someone does a study, and an uproar will predictably follow. But suppose that somehow the technology does not get banned and that courts will accept it. What will this mean? I think quite a lot. Most criminal trials involve people who are usually guilty, and the trial is merely a formalia test whether the defense can strike a deal (if allowed in that country), whether the jury can be irrationally swayed (maybe they are also criminals or share demographics with the defendant), or whether the police made some kind of paperwork mistake that the defense can use like in the O. J. Simpson case. However, if the court can simply demand that the defendant participate in a lie detector, this legal show can be skipped. This would obviously save a lot of money and time. If the police were also allowed to use this technology in their regular work, they would use it on everybody they bring in for questioning. As per assumption, there is no way to fool the machine, the police’s work would become a lot easier. They no longer have to prove things with forensic evidence, they just have to find the right suspect in the social network of the victim. For the ordinary law-abiding citizen, then, this would mean a lot fewer criminals on the streets. What’s not to like?

We can also approach it from another perspective. Suppose you are a dissident of some regime. Maybe your country has been taken over by your enemies, and they have now outlawed your political ideas. All the police of this regime have to do is to bring every person in for questioning once in a while and ask them about a variety of crimes: “Do you oppose the current regime?” “No” DING DING, and into jail you go. Might seem insane and unthinkable now, but consider the Chinese approach to social credits already as of 2019:

“Once discredited, limited everywhere” is the message China’s government announced in a report, released in early March, to the 23 million citizens it had banned from purchasing plane or train tickets the previous year. These citizens, the government declared, had proven themselves to be “untrustworthy”, perhaps by spreading false information, forgetting to pay a bill on time, or even failing to walk a dog on a leash. As a result, their freedom of movement has been revoked.

Naturally, the Chinese government would be an early adopter of this lie detection technology. It would be mandatory at first for recruitment into high ranking roles, then later broadened to access to universities, occupations etc.. The questions asked would be basic things like “Do you have sympathy for Tibetan resistance?”, “Are you a Western spy?”, “Would you like to see a regime change in China?”. You can’t lie, so any non-conformist would be easily barred from these positions. A new untouchable class.

Perfect lie detection, then, is a two-edged sword. On the one hand, limited use of it would probably be a boon to regular people given a tolerant system. Violent criminals would go away very fast, people wouldn’t even try to do fraud, politicians could be subject to lie tests during campaigns to ensure they were honest, major court cases between corporations could be decided quickly by querying the leaders. What’s not to like? But an abusive government with this system would be truly totalitarian, gaining even the ability to police your thoughts that you never expressed verbally. There would be no way out of such a system. It’s impossible to build a resistance movement under such conditions. I think it is likely that a future AI dictatorship would immediately implement such a system. Why not?

With this in mind, you might ask: is the technology worth it?