I don’t have time to provide extensive citations for this post, so some things are cited from memory. You should be able to locate the relevant literature, but otherwise just ask.

- Haier, R. J. (2016). The Neuroscience of Intelligence (1 edition). New York, NY: Cambridge University Press.

Because I’m writing a neuroscience-related paper or two, it seemed like a good idea to read the recent book by Richard Haier. Haier is a rare breed of a combined neuroscientist and intelligence researcher, and he’s also the past ISIR president.

Haier is refreshingly honest about what the purpose of intelligence research is:

The challenge of neuroscience is to identify the brain processes necessary for intelligence and discover how they develop. Why is this important? The ultimate purpose of all intelligence research is to enhance intelligence.

While many talk about how important it is to understand something, understanding something is arguably just a preliminary goal on the road to what we really want: control it. In general, one can read the history of science as man’s attempt to control nature, and this requires having some rudimentary understanding of it. The understanding does not need to be causally deep, as long as one can make above chance level predictions. Newtonian physics is not the right model of how the world works, but it’s good enough to get to the Moon and building skyscrapers. Animal breeders historically had no good idea about how genetics worked, but they knew that when you breed stuff, you tend to get similar offspring to the stuff you bred, no matter whether this is corn or dogs.

Criticism of intelligence boosting studies

Haier criticizes a number of studies that attempted to raise intelligence. However, his criticisms are not quite good. For example, in reply to the n-back training paradigm, he spends about a page covering criticism of administration of one validation test:

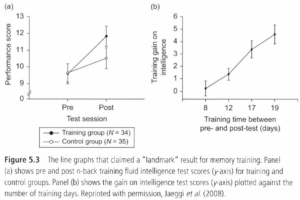

The first devastating critique came quickly (Moody, 2009). Dr. Moody pointed out several serious flaws in the PNAS cover article that rendered the results uninterpretable. The most important was that the BOMAT used to assess fluid reasoning was administered in a flawed manner. The items are arranged from easy ones to very difficult ones. Normally, the test-taker is given 45 minutes to complete as many of the 29 problems as possible. This important fact was omitted from the PNAS report. The PNAS study allowed only 10 minutes to complete the test, so any improvement was limited to relatively easy items because the time limit precluded getting to the harder items that are most predictive of Gf, especially in a sample of college students with restricted range. This non-standard administration of the test transformed the BOMAT from a test of fluid intelligence to a test of easy visual analogies with, at best, an unknown relationship to fluid intelligence. Interestingly, the one training group that was tested on the RAPM showed no improvement. A crucial difference between the two tests is that the BOMAT requires the test-taker to keep 14 visual figures in working memory to solve each problem, whereas the RAPM requires holding only eight in working memory (one element in each matrix is blank until the problem is solved). Thus, performance on the BOMAT is more heavily dependent on working memory. This is the exact nature of the n-back task, especially as the version used for training included the spatial position of matrix elements quite similar to the format used in the BOMAT problems (see Textbox 5.1). As noted by Moody, “Rather than being ‘entirely different’ from the test items on the BOMAT, this [n-back] task seems well-designed to facilitate performance on that test.” When this flaw is considered along with the small samples and issues surrounding small change scores of single tests, it is hard to understand the peer review and editorial processes that led to a featured publication in PNAS which claimed an extraordinary finding that was contrary to the weight of evidence from hundreds of previous reports.

But he puts little emphasis on the fact that the original study had n=69 and p=.01 or something (judging from the confidence intervals):

Given publication bias and methodological degrees of freedom, and this is very poor evidence indeed. It requires no elaborate explanation of scoring of tests.

Haier does not cover the general history of attempts to increase intelligence, and this is a mistake because those don’t read history make the same mistakes over and over again. History supplies ample evidence that can inform our prior. I can’t think of any particular reason not to briefly cover this history given that Spitz wrote a pretty good book on the topic.

WM/brain training is just the latest fad in a long series of cheap tricks to improve intelligence or other test performance. The pattern is:

- Small study, usually with marginal effects by NHST standards (p close to .05)

- Widespread media and political attention

- Follow-up/replication research takes 1-3 decades and is always negative.

- The results from (3) are usually ignored, and the fad continues until it slowly dies because researchers get bored of it, and switch to the next fad. Sometimes this dying can take a very long time.

[See also: Hsu’s more general pattern.]

Some immediate examples I can think of:

- Early interventions for low intelligence children: endless variations. Proponents always cite early, tiny studies (these are: Perry Preschool Project (1962), The Abecedarian Project (1972), The Milwaukee Project (1960s)), and neglect large negative replications (e.g. Head Start Impact Study).

- Interventions targeted at autistic, otherwise damaged and the regular dull children. History of full of charlatans pandering miracle treatments to sad parents (see Spitz’ review).

- Pygmalion/self-fulfilling prophecy effects. Usually people only mention a single study from 1968. Seeing the pattern here?

- Stereotype threat. Actually this is not even a boosting effect, but is widely understood in that way. This one is still in stage.

- Mozart effect.

- Hard to read fonts.

I think readers would better appreciate the current studies if they knew the historical record – 0% success rate despite >80 years of trying with massive funding and political support. Haier does kind of get to this stage, but only after going over the papers and not as explicitly as I do above:

Speaking of independent replication, none of the three studies discussed so far (the Mozart Effect, n-back training, and computer training) included any replication attempt in the original reports. There are other interesting commonalities among these studies. Each claimed a finding that overturned long-standing findings from many previous studies. Each study was based on small samples. Each study measured putative cognitive gains with single test scores rather that extracting a latent factor like g from multiple measures. Each study’s primary author was a young investigator and the more senior authors had few previous publications that depended on psychometric assessment of intelligence. In retrospect, is it surprising that numerous subsequent studies by independent, experienced investigators failed to replicate the original claims? There is a certain eagerness about showing that intelligence is malleable and can be increased with relatively simple interventions. This eagerness requires researchers to be extra cautious. Peer-reviewed publication of extraordinary claims requires extraordinary evidence, which is not apparent in Figures 5.1, 5.3, and 5.4. In my view, basic requirements for publication of “landmark” findings would start with replication data included along with original findings. This would save many years of effort and expense trying to replicate provocative claims based on fundamentally flawed studies and weak results. It is a modest proposal, but probably unrealistic given academic pressures to publish and obtain grants.

Meta-analyses are a good tool to estimate the entire body of evidence, but they feed on published studies, and when published studies produce biased effect size estimates due to researcher degrees of freedom and publication bias (+ small samples), the meta-analyses will also tend to do this too. One can adjust for the publication bias to some degree, but this works best when there’s a large body of research. One cannot adjust for researcher degrees of freedom. For a matter as important as increasing intelligence, there is only one truly convincing kind of evidence: large-scale, pre-registered trials with public data access. Preferably they should be announced in advance (see Registered Reports). This way it’s hard to just ‘forget’ to publish the results (common), swap outcomes (also common), and use creative statistics to get the precious p < .05 (probably common too).

Statistics of (neuro)science

In general, I think Haier should have started the book with a brief introduction to the replication crisis and the relevant statistics: Why do we have it, how do we fix it. This would of course also mean that he would have to be a lot more cautious in describing many of the earlier studies presented in the preceding chapters. Most of these studies were done on tiny samples and we know that they suffer publication bias because we have a huge meta-analysis of brain size x intelligence showing the decline effect. There is no reason to expect the other reported associations will hold up any better or at all.

Haier spends too much time noting apparent sex differences in small studies. These claims are virtually always based on the NHST subgroup fallacy – the idea that if some association is p < alpha for one group, and p > alpha for another group, then we can conclude there’s an 1/0 interaction effect such that there is an effect in one population, and none in the other.

To be fair, Haier does lay out 3 laws:

-

No story about the brain is simple.

-

No one study is definitive.

-

It takes many years to sort out conflicting and inconsistent findings and establish a compelling weight of evidence.

which kind of make the same point. Tiny samples make every study non-conclusive (2), and we have to wait for the eventual replications and meta-analyses (3). Tiny samples combined with inappropriate NHST statistics give rise to pseudo-interactions in the literature which make (1) seem truer than it is (cf. the situational specificity hypothesis in I/O psych). Not to say that the brain is not complicated, but no need to add spurious interactions to the mix. This is not hypothetical: many papers reported such sex ‘interactions’ for brain size x intelligence, but the large meta-analysis by Pietschnig et al found no such moderator effect.

Beware meta-regression too. This is just regular regression and has the same problems: if you have few studies, say k=15, use weights (study SE), and try out many predictors — sex, age, subtest, country, year, … –, then it’s easy to find false positive moderators. An early meta-analysis did in fact identify sex as a moderator (which Haier cites approvingly), and this turned out not to be so.

Going forward

I think the best approach to working out the neurobiology of intelligence is:

-

Compile large, public-use datasets. We are getting there with some recent additions, but they are not public use: 1) PING dataset n=1500, 2) Human Connectome n=1200, 3) Brain Genomics Superstruct Project n=1570. Funding for neuroscience, almost any science, should be contingent on contributing the data to a large, public repository. Ideally, this should be a single, standardized dataset. Linguistics has a good precedent to follow: “The World Atlas of Language Structures (WALS) is a large database of structural (phonological, grammatical, lexical) properties of languages gathered from descriptive materials (such as reference grammars) by a team of 55 authors.”.

-

Include many diverse measures of neurobiology so we can do multivariate studies. Right now, the literature is extremely fragmented and no one knows the degree to which specific reported associations overlap or are merely proxies for each other. One can do meta-analytic modeling based on summary statistics, but this approach is very limited.

-

Clean up the existing literature by including measures reported in the literature first. While many of these may be positive positives, a reported p < alpha finding is better evidence than nothing. Similarly: p > alpha findings in the literature may be false negatives. Only by testing them in large samples can we know. It would be bad to miss an important predictor just because some early study with 20 persons failed to find an association.

Actually boosting intelligence

After discussing nootropics (cognitive enhancing drugs, in his terms), Haier writes:

If I had to bet, the most likely path toward enhancing intelligence would be a genetic one. In Chapter 2 we discussed Doogie mice, a strain bred to learn maze problem-solving faster than other mice. In Chapter 4 we enumerated a few specific genes that might qualify as relevant for intelligence and we reviewed some possible ways those genes might influence the brain. Even if hundreds of intelligence-relevant genes are discovered, each with a small influence, the best case for enhancement would be if many of the genes worked on the same neurobiological system. In other words, many genes may exert their influence through a final common neurobiological pathway. That pathway would be the target for enhancement efforts (see, for example, the Zhao et al. paper summarized in Section 2.6). Similar approaches are taken in genetic research on disorders like autism and schizophrenia and many other complex behavioral traits that are polygenetic. Finding specific genes, as difficult as it is, is only a first step. Learning how those genes function in complex neurobiological systems is even more challenging. But once there is some understanding at the functional system level, then ways to intervene can be tested.This is the step where epigenetic influences can best be explicated. If you think the hunt for intelligence genes is slow and complex, the hunt for the functional expression of those genes is a nightmare. Nonetheless, we are getting better at investigations at the molecular functional level and I am optimistic that, sooner or later, this kind of research applied to intelligence will pay off with actionable enhancement possibilities. The nightmares of neuroscientists are the driving forces of progress.

None of the findings reported so far are advanced enough to consider actual genetic engineering to produce highly intelligent children. There is a recent noteworthy development in genetic engineering technology, however, with implications for enhancement possibilities. A new method for editing the human genome is called CRISPR/Cas9 (Clustered Regularly Interspaced Short Palindromic Repeats/Cas genes). I don’t understand the name either, but this method uses bacteria to edit the genome of living cells by making changes to targeted genes (Sander & Joung, 2014). It is noteworthy because many researchers can apply this method routinely so that editing the entire human genome is possible as a mainstream activity. Once genes for intelligence and how they function are identified, this kind of technology could provide the means for enhancement on a large scale. Perhaps that is why the name of the method was chosen to be incomprehensible to most of us. Keep this one on your radar too.

I think there may be gains to had from nootropics, but to find them, we have to get serious: large-scale neuroscientific studies of intelligence must look for correlates that we know/think we can modify, such as the prevalence of certain molecules. Then large-scale pre-registered RCTs must be done on plausible candidates for these. In general, however, it seems more plausible that we can find ways to improve non-intelligence traits that nevertheless help. For instance, speedy drugs generally enhance self-control in low doses. This shows up in higher educational attainment and lower crime convictions. These are very much real gains to be had.

Haier discusses various ways of directly stimulating the brain. In my speculative model of neuro-g, this would constitute an enhancement of the activity or connectivity factors. It seems possible that one can make some small gains this way, but I think the gains are probably larger for non-intelligence traits such as sustained attention and tiredness. If we could find a way to sleep more effectively, this would have insanely high social payoffs, so I recommend research into this.

(From: Nerve conduction velocity and cognitive ability: a large sample study)

For larger gains, genetics is definitely the most certain route (only other alternative is neurosurgery with implants). Since we know genetic variation is very important for variation in intelligence (high heritability), all we have to do is tinker with that variation. Haier makes the usual mistake of focusing on direct editing approaches. Direct editing is hard because one must know the causal variants and be able to change them (not always easy!). So far we know of very few confirmed causal variants, and those we know, are mostly bad: e.g. aneuplodies such as Down syndrome (trisomy 21). However, siblings show that it is quite possible to have the same parents and different genomes, so this means that all we have to do is filter among possible children: embryo selection. Embryo selection does not require us to know the causal variants, it only requires predictive validity, something that’s much easier to attain. See Gwern’s excellent writings on the topic for more info.

4. Socialist neuroscience?

Haier opines a typical HBD-Rawlsian approach to social policy:

Here is my political bias. I believe government has a proper role, and a moral imperative, to provide resources for people who lack the cognitive capabilities required for education, jobs, and other opportunities that lead to economic success and increased SES. This goes beyond providing economic opportunities that might be unrealistic for individuals lacking the requisite mental abilities. It goes beyond demanding more complex thinking and higher expectations for every student irrespective of their capabilities (a demand that is likely to accentuate cognitive gaps). It even goes beyond supporting programs for early childhood education, jobs training, affordable childcare, food assistance, and access to higher education. There is no compelling evidence that any of these things increase intelligence, but I support all these efforts because they will help many people advance in other ways and because they are the right thing to do. However, even if this support becomes widely available, there will be many people at the lower end of the g-distribution who do not benefit very much, despite best efforts. Recall from Chapter 1 that the normal distribution of IQ scores with a mean of 100 and a standard deviation of 15 estimates that 16% of people will score below an IQ of 85 (the minimum for military service in the USA). In the USA, about 51 million people have IQs lower than 85 through no fault of their own. There are many useful, affirming jobs available for these individuals, usually at low wages, but generally they are not strong candidates for college or for technical training in many vocational areas. Sometimes they are referred to as a permanent underclass, although this term is hardly ever explicitly defined by low intelligence. Poverty and near-poverty for them is a condition that may have some roots in the neurobiology of intelligence beyond anyone’s control.

The sentence you just read is the most provocative sentence in this book. It may be a profoundly inconvenient truth or profoundly wrong. But if scientific data support the concept, is that not a jarring reason to fund supportive programs that do not stigmatize people as lazy or unworthy? Is that not a reason to prioritize neuroscience research on intelligence and how to enhance it? The term “neuro-poverty” is meant to focus on those aspects of poverty that result mostly from the genetic aspects of intelligence. The term may overstate the case. It is a hard and uncomfortable concept, but I hope it gets your attention. This book argues that intelligence is strongly rooted in neurobiology. To the extent that intelligence is a major contributing factor for managing daily life and increasing the probability of life success, neuro-poverty is a concept to consider when thinking about how to ameliorate the serious problems associated with tangible cognitive limitations that characterize many individuals through no fault of their own.

Public policy and social justice debates might be more informed if what we know about intelligence, especially with respect to genetics, is part of the conversation. In the past, attempts to do this were met mostly with acrimony, as evidenced by the fierce criticisms of Arthur Jensen (Jensen, 1969; Snyderman & Rothman, 1988), Richard Herrnstein (1973), and Charles Murray (Herrnstein & Murray, 1994; Murray, 1995). After Jensen’s 1969 article, both IQ in the Meritocracy and The Bell Curve raised this prospect in considerable detail. Advances in neuroscience research on intelligence now offer a different starting point for discussion. Given that approaches devoid of neuroscience input have failed for 50 years to minimize the root causes of poverty and the problems that go with it, is it not time to consider another perspective?

Here is the second most provocative sentence in this book: The uncomfortable concept of “treating” neuro-poverty by enhancing intelligence based on neurobiology, in my view, affords an alternative, optimistic concept for positive change as neuroscience research advances. This is in contrast to the view that programs which target only social/cultural influences on intelligence can diminish cognitive gaps and overcome biological/genetic influences. The weight of evidence suggests a neuroscience approach might be even more effective as we learn more about the roots of intelligence. I am not arguing that neurobiology alone is the only approach, but it should not be ignored any longer in favor of SES-only approaches. What works best is an empirical question, although political context cannot be ignored. On the political level, the idea of treating neuro-poverty like it is a neurological disorder is supremely naive. This might change in the long run if neuroscience research ever leads to ways to enhance intelligence, as I believe it will. For now, epigenetics is one concept that might bridge both neuroscience and social science approaches. Nothing will advance epigenetic research faster than identifying specific genes related to intelligence so that the ways environmental factors influence those genes can be determined. There is common ground to discuss and that includes what we know about the neuroscience of intelligence from the weight of empirical evidence. It is time to bring “intelligence” back from a 45-year exile and into reasonable discussions about education and social policies without acrimony.

It’s a little odd that he ignores genetic interventions here, given his early mentioning of them. Aside from that, the focus on neurobiology is eminently sensible. If typical S approaches – e.g. even more income theft redistribution – do have causal effects on intelligence, this must be thru some pretty long causal pathway, so we cannot expect large effects for the change we make in the income distribution. Neurobiology, however, is the direct biological substrate of intelligence, and thus one can expect to see much larger gains by interventions targeted at this domain for the simple reason that it’s the direct causal antecedent of the thing we’re trying to manipulate – provided of course that any non-genetic intervention can work.

From a utilitarian/consequentialist perspective, government action to increase intelligence, if it works, is likely to have huge payoffs at many levels, so it is definitely something I can get behind – with the caveat that we get serious about it: open data, large-scale, preregistered RCTs.

Chronometric measures and the elusive ratio scale of intelligence

Haier quotes Jensen on chronometric measures:

At the end of his book, Jensen concluded, “… chronometry provides the behavioral and brain sciences with a universal absolute [ratio] scale for obtaining highly sensitive and frequently repeatable measurements of an individual’s performance on specially devised cognitive tasks. Its time has come. Let us get to work!” (p. 246). This method of assessing intelligence could establish actual changes due to any kind of proposed enhancement in a before and after research design. The sophistication of this method for measuring intelligence would diminish the gap with sophisticated genetic and neuroimaging methods.

But it does not follow. Performance on any given chronometric test is a function of multiple independent factors, some of which might change without the others doing so. According to a large literature, specific abilities generally have zero or near-zero predictive validity, so boosting them is not of much use. Chronometric tests measure intelligence, but also other factors. When one repeats chronometric tests, there are gains – people’s reaction times do become faster, — but it does not mean that intelligence increased, it was some other factor.

Besides, it is easy enough to have a ratio scale. Most tests that are part of batteries are indeed on a ratio scale: if you get 0 items right you have no ability on that test. The trouble is aligning the ratio scale of a given test with the presumed ratio scale of intelligence. Anyone can make a test sufficiently hard so that no one can get any items right, but that doesn’t mean people who took the test have 0 intelligence.

Besides, chronometric measures are reversed: a 0 on a chronometric test is the best possible performance, not the worst possible performance. So, while they are ratio scale – a 0 ms is a true 0 and one can sensibly apply multiplication operations – they are clearly not aligned with the hypothetical ratio scale of intelligence itself.

With this criticism in mind, chronometric tests are interesting for multiple reasons and deserve much further study:

-

They are resistant to training gains. One cannot just memorize the items beforehand. This is important for high stakes testing. There are training gains on them, but they show diminishing returns, and thus, if we want to do testing that is not very confounded with test gains, we can give people lots of practice trials.

-

They are resistant to motivation effects. Studies have so far not produce any associations between subjects’ rating of how hard they tried at a given trial and their actual performance on that trial.

-

They are much closer to the neurobiology of intelligence and thus their use is likely to lead to advances in our understanding of that area too. I recommend doing brain measurements of people as they complete chronometric tests and see if one can link the two. EEG and similar methods are getting quite cheap so this is possible to do at a large scale.

-

Chronometric tests are resistant to ceiling problems. While one can max out on simple reaction time tests – get to 0 ms decision time – it is easy to simply add some harder tests which will effectively raise the ceiling again. One can do this endlessly. It is quite likely that one can find ways to measure into the extreme high end of ability using simple predictive equations, something that’s not generally possible with ordinary tests. This could be researched using e.g. the SMPY sample.

Unfortunately, I do not have the resources right now to pursue this area of research. It would require having a laboratory and some way to get people to participate in large numbers, Galton style.

Conclusion

All in all, this is a very readable book that summarizes research into intelligence for the intelligent layman, while also giving an overview of the neuroscientific findings. It is similar in spirit to Ritchie’s recent book. The main flaws are statistical, but they are not critical to the book in my opinion.