The great David Rozado has a new study out on the politics of Wikipedia: Is Wikipedia Politically Biased?.

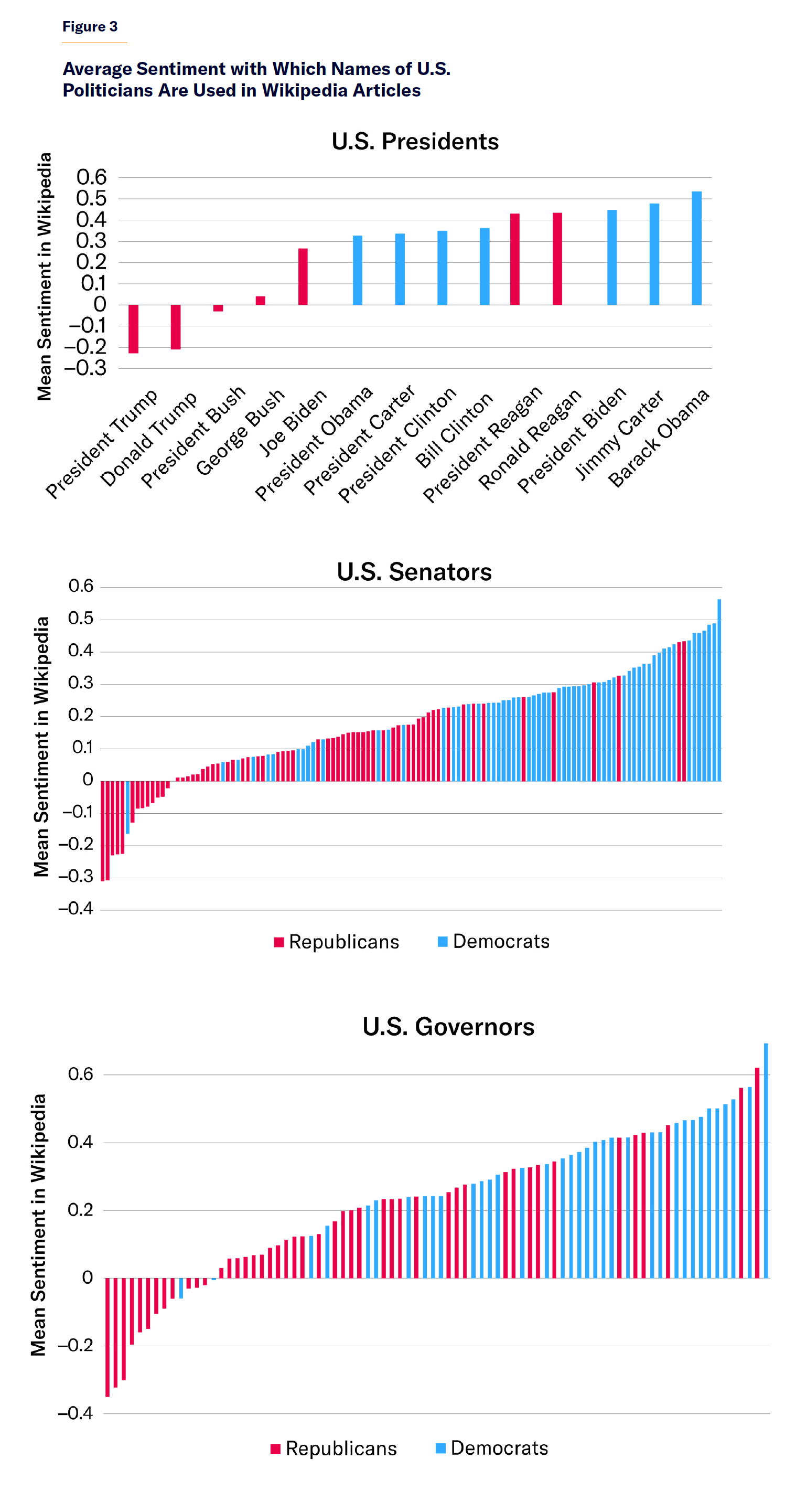

He downloaded the English Wikipedia database, and analyzed whether the articles covering different politically aligned actors or organizations were systematically more positive or negative in tone (sentiment analysis). Tone was assessed using machine learning approaches based on modern LLMs or older methods. The results were very clear for US politicians:

As well as journalists:

Overall, one can compute the difference in positive vs. negative sentiment across various categories, and the results are quite clear:

The average effect size is about 1 standard deviation (Cohen’s d), though there was some difference across categories. UK parliament members had the smallest difference, perhaps because the mostly US-based Wikipedia writers don’t care too much about them, so they won’t spend a lot of time lobbying for adding negative material. It would be informative to see if this holds for non-English speaking areas, say, German or Spanish politicians. One could run the sentiment analysis on Wikipedias of various languages to see how they differ, e.g. English vs. German Wikipedia coverage of Anglo vs. German politicians.

This bias inherent in Wikipedia is important because Wikipedia is one of the most visited websites in the world (#6 perhaps), and has a veneer of neutrality. It is in fact mostly neutral because the overwhelming proportion of pages concern topics not relevant to current political debates (e.g., this or that mineral, this or that park). However, anything related to the Current Thing in politics is certainly left-biased. Many readers don’t know that, so this bias works its way into people’s minds. Even if you know it’s biased, you don’t necessarily know what it is leaving out, or giving improper attention to.

Another way that Wikipedia’s bias is important is that our future overlords LLMs are trained based, in part, on the Wikipedia corpus. Any bias from this corpus is thus potentially introduced into the AIs directly from training. Rozado gives some evidence of this as well.

Wikipedia itself does probably not originate all of this bias since the sources it draws upon — mainstream media and academic writings — are themselves strongly left-biased. But in fact, Wikipedia left-wing activist editors have lobbied to get various non-left sources banned, so they cannot even be used to avoid the bias. You can see which sources are banned or listed as questionable on these pages. There are some curious pairs of reliable vs. unreliable sources:

Amnesty is a fairly radical left-wing advocacy organization, but it is somehow listed as reliable, unlike The American Conservative, which is obviously a conservative magazine. A proper classification would list both as having political bias. Curiously, the ADL, a Jewish hate (defamation) group, is listed as kinda reliable, but depending on the topic:

In fact, even rather tame center-right magazines like Quillette are complete banned:

It would be informative to get a left-right score for each of these outlets and organizations and see how much they relate to their current source credibility standing on this list. The list is quite important because it controls which sources can be used on Wikipedia, and basically anything written based on banned or questionable sources can be immediately removed by an opposing editor with a sound Wikipedia legal basis. As such, one way that left activist editors have been very active was to lobby to get their opponents’ favorite sources banned. Sneaky but effective. You can find the discussions by following the links on the page.

What can be done? I have a positive take. It possibly won’t matter. AIs will takeover the role of Wikipedia. People will not be consulting Wikipedia to manually search for the information they want. They will just be asking their favorite AI to give them the answers. Wikipedia will fade as the go-to resource that humans use. AIs will still use it, but the same David Rozado has also previously shown that current LLMs can seemingly take into account the bias inherent in the source material, the same way historians do this when reading ancient history. The bias seems to come in during the fine-tuning. It is therefore possible that someone like Elon Musk could successfully make an unbiased or right-biased AI (Grok), which will become very popular and won’t suffer from this Wikipedia editor political bias. Based on this take, the key information battle to come is that of who gets to decide what bias goes into the LLMs. Will they be 2+2=4 or 2+2=5 AIs? We will find out in the next few years.