Last year I convinced Charles Murray to share some of his data underlying his great book Human Accomplishment. He put it on OSF, where it remains available. So far so good.

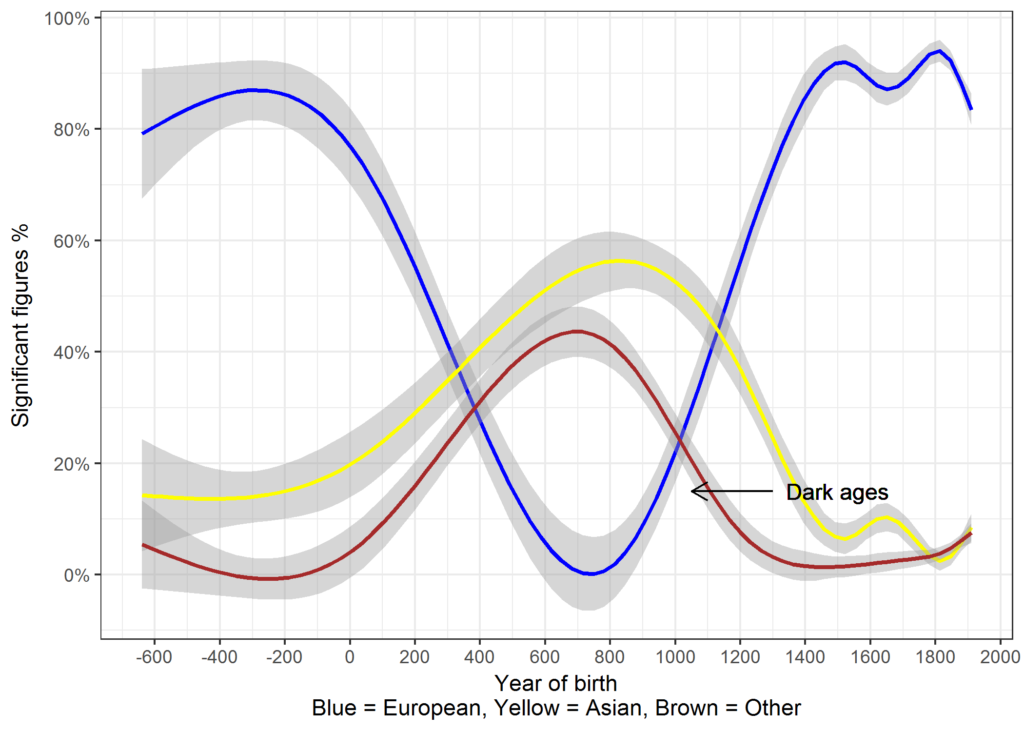

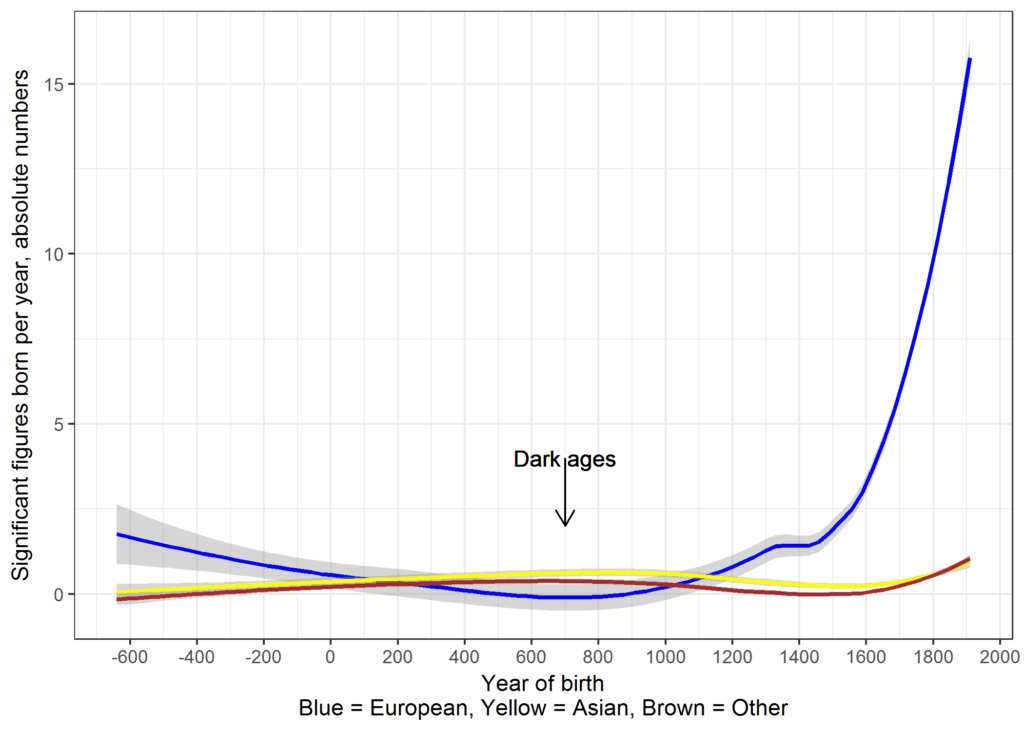

At some later point in 2016, I finally had a closer look at the dataset and made some rudimentary plots based on the data.

I posted these on Twitter (original post, follow-up). The first one was found by some SJWs who caused a minor Twitterstorm. There was also a Reddit thread full of mostly useless and lazy criticism of the figure (and of myself and of Charles). I’m pretty used to this stuff by now.

In general, I’m motivated by people making strong claims based on their religious or political ideology (e.g. I spent years debating creationists and now spend more time debating climate contrarians), and so when they complain about the HA dataset, it motivates me to try to cross-validate it (or invalidate it, whatever the outcome may be). The HA dataset is limited in that it only goes up to 1950. What about since 1950? That’s 67 years, surely we have some idea of people who made good contributions in the 1950-1980 period. There is a recency bias in sources, such that recent events and persons get more space, so we cannot get too close to the present without problems. Or we could try to adjust for this bias. Whatever the case may be, there is an easy-ish way to get more data: Wikipedia. In fact, someone else already built a website for assessing eminence using Wikipedia data: Pantheon. Apparently, there was only a paper published on this that I just found now.

Pantheon 1.0, a manually verified dataset of globally famous biographies

We present the Pantheon 1.0 dataset: a manually verified dataset of individuals that have transcended linguistic, temporal, and geographic boundaries. The Pantheon 1.0 dataset includes the 11,341 biographies present in more than 25 languages in Wikipedia and is enriched with: (i) manually verified demographic information (place and date of birth, gender) (ii) a taxonomy of occupations classifying each biography at three levels of aggregation and (iii) two measures of global popularity including the number of languages in which a biography is present in Wikipedia (L), and the Historical Popularity Index (HPI) a metric that combines information on L, time since birth, and page-views (2008–2013). We compare the Pantheon 1.0 dataset to data from the 2003 book, Human Accomplishments, and also to external measures of accomplishment in individual games and sports: Tennis, Swimming, Car Racing, and Chess. In all of these cases we find that measures of popularity (L and HPI) correlate highly with individual accomplishment, suggesting that measures of global popularity proxy the historical impact of individuals.

The dataset is public, but there is a big problem. The persons in the dataset are not given a standardized id and so it is hard to merge datasets due to the variation in names. In general, when it comes to persons, some sources use ‘last name, first name’ (LNFN; HA does this), others use ‘first name last name’ (FNLN, Wikipedia does this). It is not always easy to convert from FNLN to LNFN because there are names like Abū Kāmil Shujāʿ ibn Aslam (also known as Auoquamel, al-ḥāsib al-miṣrī among others), but one can convert easily from LNFN to FNLN. Some sources include middle names, some include middle names but only with initials. Some include the area or city where the person was from (Amphicrates of Athens). Some use adjectival phrases (Seneca the Younger vs. Seneca the Elder; called epithets).

Fortunately, there is actually standardized ID for prominent persons: International Standard Name Identifier (ISNI). (Actually, the standardization sickness applies and there are a few different ones of these…, but this is the the most standard one!). Unfortunately, neither Pantheon or the HA dataset supply these ids. But in case of Pantheon, since their source is Wikipedia, we should be back to just look up the name on Wikipedia — but ‘should’ is not always can…. Even if one could at the time of creation, someone might have made a disambiguation page afterwards or changed the redirect. I looked over some names looking for trouble and found a case. There’s a person just called Sabrina. Looking up that page does result in a disambiguation page. The person refers to this one, which one can see based on the year/country of birth. However, that requires an extra step of matching, and more coding work. There are 714 single word persons in the dataset. A quick estimate is ~30% of these will cause problems: examples: M.I.A., Hide, Jewel, Pink, Sandra. Most of these problems seem to be due to artists’ stage names that use common words or first names.

The HA dataset is more problematic because we first have to convert from LNFN to FNLN, find the ISNI for that person, then find the Wikipedia page. There is an API/minimal website for searching for ISNIs for persons. Let’s try to find the ISNI for “Abel, Niels” form the HA dataset. The result is here. So, there was only 1 match, which is good. If we search Wikipedia normally, the correct result is the #1. So by finding all the unique names in both datasets and searching for them, it should be possible to link them up using the ISNIs.

Often there will be more than 1 result in which case we need decisions rules the computer can use. My initial proposal is this:

- If there are 0 results, throw an error (we need to figure out what to do in these cases).

- If there is 1 result, use that.

- If there are >1 results, extract their life spans, and see if there is a perfect match. If not, are there any good matches (e.g. differs by <5 years in both born and death year). If not, throw an error.

I could not immediately locate a website that gets us from ISNI to Wikipedia URL, but I found out that Wikipedia has 190k persons with ISNIs, so it seems likely that Wikipedia has good coverage for ISNI with regards to the HA and Pantheon datasets.

Trying to link without ISNI

Without relying on ISNI, I converted the HA dataset to FNLN format and looked up the exact matches in the science index (n=1,442). Of these, I could match up 338 or 25%. Not good, but okay for a first attempt. One could probably match up some more using a fuzzy string matching approach, but it’s not a good solution for matching all of them. Pantheon supplies a few different measures:

- L: Number of Language Editions, i.e. the number of Wikipedias that features this person.

- HPI: The Historical Popularity Index. Complex metric defined in terms of multiple factors.

- Lstar: Another complex measure.

- PV: page views.

- PVe: page views English Wikipedia.

- PVne: page views non-English Wikipedia.

- sPV: standard deviation of page views.

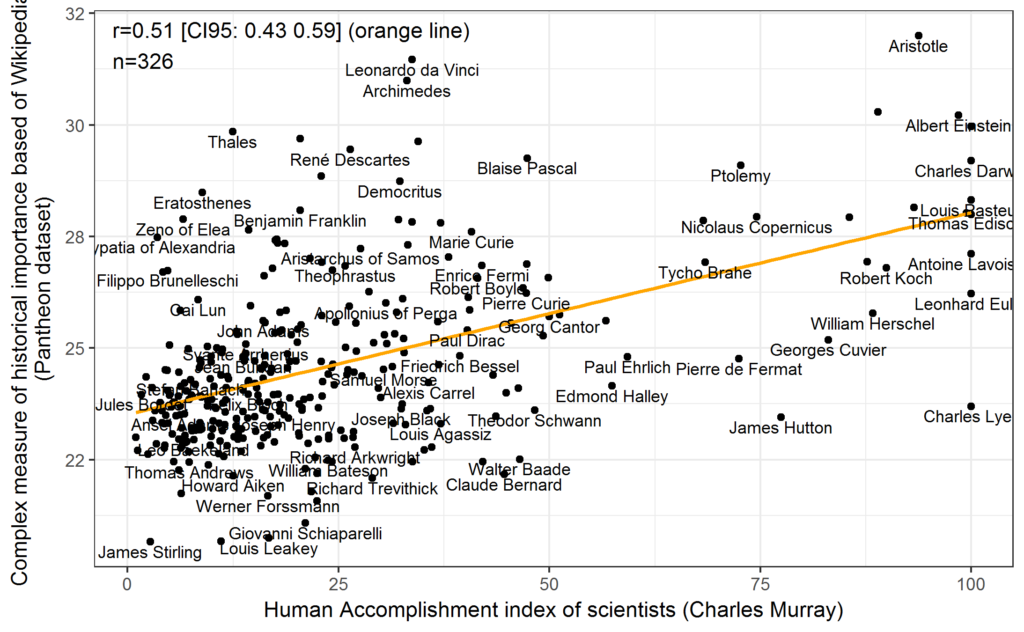

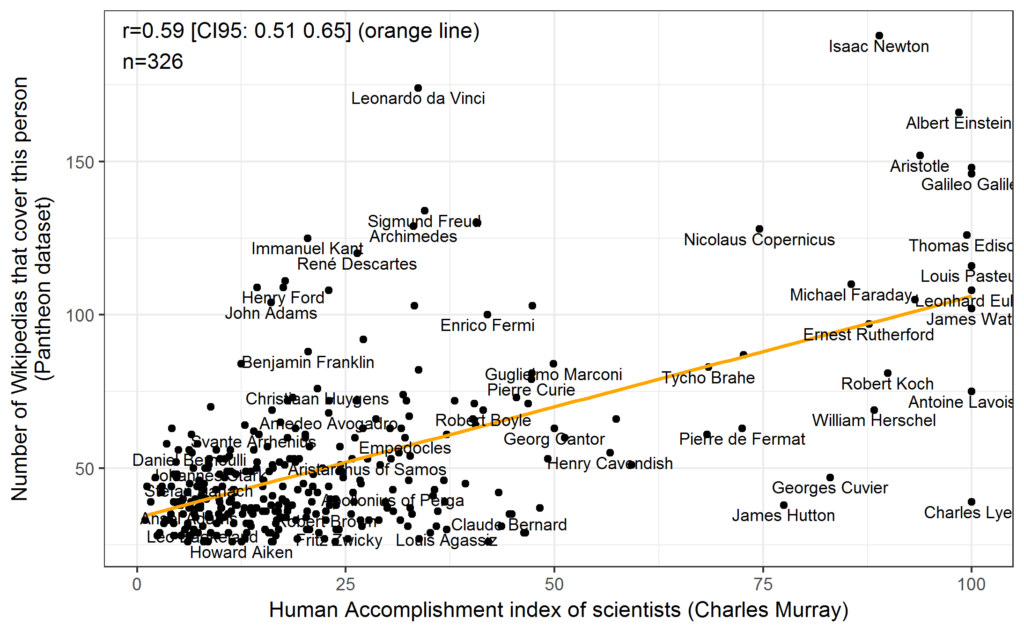

How do they relate to HA’s index?

| Index | Rank | L | Lstar | PV | PVe | PVne | sPV | HPI | |

| Index | 1.00 | -0.45 | 0.59 | -0.12 | 0.47 | 0.45 | 0.48 | 0.29 | 0.51 |

| Rank | -0.45 | 1.00 | -0.73 | -0.11 | -0.45 | -0.43 | -0.44 | -0.17 | -0.93 |

| L | 0.59 | -0.73 | 1.00 | 0.08 | 0.83 | 0.81 | 0.83 | 0.47 | 0.86 |

| Lstar | -0.12 | -0.11 | 0.08 | 1.00 | -0.03 | -0.08 | 0.00 | -0.03 | 0.09 |

| PV | 0.47 | -0.45 | 0.83 | -0.03 | 1.00 | 0.98 | 0.99 | 0.53 | 0.66 |

| PVe | 0.45 | -0.43 | 0.81 | -0.08 | 0.98 | 1.00 | 0.94 | 0.53 | 0.62 |

| PVne | 0.48 | -0.44 | 0.83 | 0.00 | 0.99 | 0.94 | 1.00 | 0.52 | 0.67 |

| sPV | 0.29 | -0.17 | 0.47 | -0.03 | 0.53 | 0.53 | 0.52 | 1.00 | 0.25 |

| HPI | 0.51 | -0.93 | 0.86 | 0.09 | 0.66 | 0.62 | 0.67 | 0.25 | 1.00 |

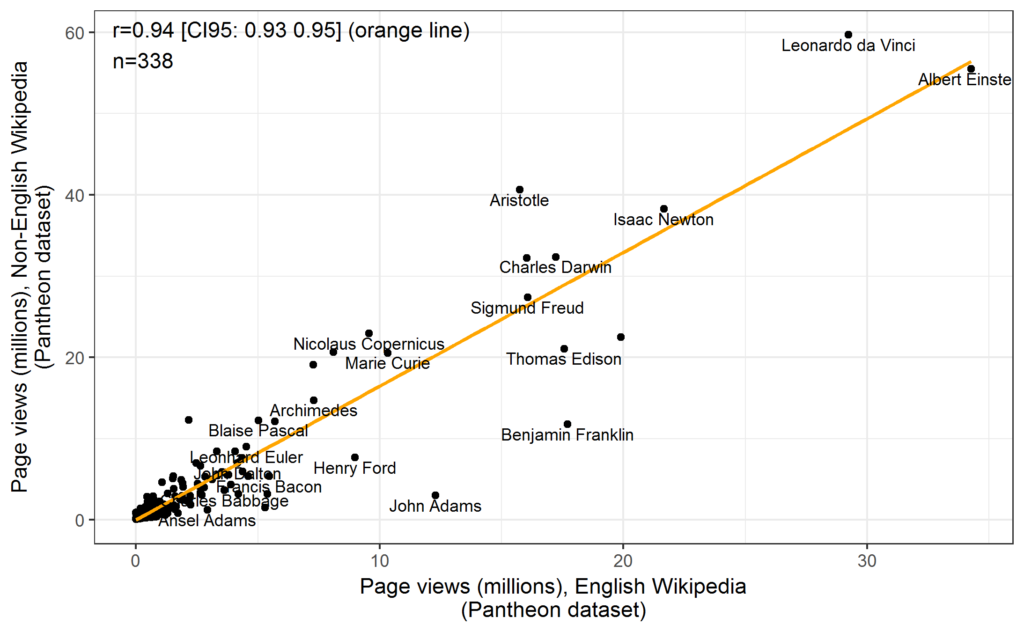

Lstar does not correlate with much of anything. sPV is a measure of dispersion, not of prominence. Interesting is that the PVe and PVne nearly correlate at 1.00 (at r =.94), meaning that English and non-English reading people read about the same persons (but perhaps we should log-transform these values since they are very non-normal).

Apparently, non-English readers read more about Aristotle and da Vinci than English readers (these are both non-English too). The outliers in the other direction seem to be mostly due to Americans: I see 2 founding fathers and 2 popular inventors. The unnamed one close to Edison is Tesla, another inventor from the US. But the language effect is smaller than I imagined.

Rank is just a rank order and it’s based on the entire set of data (which includes all kinds of persons, such as Jesus and Madonna), and so is not an interval scale and definitely not when we subset the Pantheon dataset as we did. HA’s Index correlates from .45 to .59 with the main metrics of interest. Not bad at all. Scatterplots:

Work to be done:

- Make pipelines for getting the ISNIs for both datasets. Then merge datasets.

- Tinker with the measures to see if one can remove some of the English bias.

- Scrape relevant information from the Wikipedia pages about the persons, and study that.

- Acquire the geographical information used in HA, but which was not part of the released dataset. Map the results.

- Write up and submit to somewhere suitable, probably Mankind Quarterly.

Added

Can’t we just use Wikipedia search to get a person page from the ISNI? Sometimes we can:

- https://en.wikipedia.org/w/index.php?title=Special:Search&profile=default&fulltext=1&search=%220000+0001+2125+2846%22&searchToken=8h4ywr2bg5nsve3wj6f8yd89y

- https://en.wikipedia.org/wiki/Special:Search?search=0000+0001+2125+2846+&sourceid=Mozilla-search&searchToken=dp7relojeb9vbk0em2nd273ns

But this approach requires getting the entire page every time, so it’s somewhat slow. A good API would be maybe 100 times faster (with parallel requests), a standard one maybe 10 times. But it seems to work and it only has to be done once provided we save the results for reuse, so the speed is not a big problem (will take some hours to get all the persons in Pantheon dataset, n≈11k). Note that one must use quotes to avoid random numeric matches with other pages.