In Making sense of Heritability (2005), Sesardić wrote:

On the less obvious side, a nasty campaign against H could have the unintended effect of strengthening H [hereditarianism] epistemically, and making the criticism of H look less convincing. Simply, if you happen to believe that H is true and if you also know that opponents of H will be strongly tempted to “play dirty,” that they will be eager to seize upon your smallest mistake, blow it out of all proportion, and label you with Dennett’s “good epithets,” with a number of personal attacks thrown in for good measure, then if you still want to advocate H, you will surely take extreme care to present your argument in the strongest possible form. In the inhospitable environment for your views, you will be aware that any major error is a liability that you can hardly afford, because it will more likely be regarded as a reflection of your sinister political intentions than as a sign of your fallibility. The last thing one wants in this situation is the disastrous combination of being politically denounced (say, as a “racist”) and being proved to be seriously wrong about science. Therefore, in the attempt to make themselves as little vulnerable as possible to attacks they can expect from their uncharitable and strident critics, those who defend H will tread very cautiously and try to build a very solid case before committing themselves publicly. As a result, the quality of their argument will tend to rise, if the subject matter allows it.22

It is different with those who attack H. They are regarded as being on the “right” side (in the moral sense), and the arguments they offer will typically get a fair hearing, sometimes probably even a hearing that is “too fair.” Many a potential critic will feel that, despite seeing some weaknesses in their arguments, he doesn’t really want to point them out publicly or make much of them because this way, he might reason, he would just play into the hands of “racists” and “right-wing ideologues” that he and most of his colleagues abhor. 23 Consequently, someone who opposes H can expect to be rewarded with being patted on the back for a good political attitude, while his possible cognitive errors will go unnoticed or unmentioned or at most mildly criticized.

Now, given that an advocate of H and an opponent of H find them- selves in such different positions, who of the two will have more incentive to invest a lot of time and hard work to present the strongest possible defense of his views? The question answers itself. In the academic jungle, as elsewhere, it is the one who anticipates trouble who will spare no effort to be maximally prepared for the confrontation.

If I am right, the pressure of political correctness would thus tend to result, ironically, in politically incorrect theories becoming better developed, more carefully articulated, and more successful in coping with objections. On the other hand, I would predict that a theory with a lot of political support would typically have a number of scholars flocking to its defense with poorly thought out arguments and with speedily generated but fallacious “refutations” of the opposing view. 24 This would explain why, as Ronald Fisher said, “the best causes tend to attract to their sup- port the worst arguments” (Fisher 1959: 31).

Example? Well, the best example I can think of is the state of the debate about heritability. Obviously, the hypothesis of high heritability of human psychological variation – and especially the between-group heritability of IQ differences – is one of the most politically sensitive topics in contemporary social science. The strong presence of political considerations in this controversy is undeniable, and there is no doubt about which way the political wind is blowing. When we turn to discussions in this context that are ostensibly about purely scientific issues two things are striking. First, as shown in previous chapters, critics of heritability very often rely on very general, methodological arguments in their attempts to show that heritability values cannot be determined, are intrinsically misleading, are low, are irrelevant, etc. Second, these global methodological arguments – although defended by some leading biologists, psychologists, and philosophers of science – are surprisingly weak and unconvincing. Yet they continue to be massively accepted, hailed as the best approach to the nature–nurture issue, and further transmitted, often with no detailed analysis or serious reflection.

Footnotes are:

22 This is not guaranteed, of course. For example, biblical literalists who think that the world was created 6,000 years ago can expect to be ridiculed as irrational, ignorant fanatics. So, if they go public, it is in their strong interest to use arguments that are not silly, but the position they have chosen to advocate leaves them with no good options. (I assume that it is not a good option to suggest, along the lines of Philip Gosse’s famous account, that the world was created recently, but with the false traces of its evolutionary history that never happened.)

23 A pressure in the opposite direction would not have much force. It is notorious that in the humanities and social science departments, conservative and other right-of-center views are seriously under-represented (cf. Ladd & Lipset 1975; Redding 2001).

24 I am speaking of tendencies here, of course. There would be good and bad arguments on both sides.

The Fisher (1959) reference given is actually about probability theory, that context Fisher is not writing about genetics or biology and its relation to politics.

Did no one come up with this idea before? Seems unlikely. Robert Plomin and Thomas Bouchard came close in 1987 chapters in the same book Arthur Jensen: Consensus And Controversy (see also this earlier post). Bouchard:

One might fairly claim that this chapter does not constitute a critical appraisal of the work of Arthur Jensen on the genetics of human abilities, but rather a defense. If a reader arrives at that conclusion he or she has overlooked an important message. Since Jensen rekindled the flames of the heredity vs environment debate in 1969, human behavior genetics has undergone a virtual renaissance. Nevertheless, a tremendous amount of energy has been wasted. In my discussions of the work of Kamin, Taylor, Farber, etc., I have often been as critical of them as they have been of the hereditarian program. While I believe that their criticisms have failed and their conclusions are false, I also believe that their efforts were necessary. They were necessary because human behavior genetics has been an insufficiently self-critical discipline. It adopted the quantitative models of experimental plant and animal genetics without sufficient regard for the many problems involved in justifying the application of those models in human research. Furthermore, it failed to deal adequately with most of the issues that are raised and dealt with by meta-analytic techniques. Human behavior geneticists have, until recently, engaged in inadequate analyses. Their critics, on the other hand, have engaged in pseudo-analyses. Much of the answer to the problem of persuading our scientific colleagues that behavior is significantly influenced by genetic processes lies in a more critical treatment of our own data and procedures. The careful and systematic use of meta-analysis, in conjunction with our other tools, will go a long way toward accomplishing this goal. It is a set of tools and a set of attitudes that Galton would have been the first to apply in his own laboratory.

Plomin:

More behavioral genetic data on IQ have been collected since Jensen’s 1969 monograph than in the fifty years preceding it. As mentioned earlier, I would argue that much of this research was conducted because of Jensen’s monograph and the controversy and criticism it aroused.

…

A decade and a half ago Jensen clearly and forcefully asserted that IQ scores are substantially influenced by genetic differences among individuals. No telling criticism has been made of his assertion, and newer data consistently support it. No other finding in the behavioral sciences has been researched so extensively, subjected to so much scrutiny, and verified so consistently.

Chris Brand also has a chapter in this book, perhaps it has something relevant. I don’t recall it well.

To return to Sesardić, his contention is that non-scientific opposition to some scientific claim will result in so called double standards: higher standards for proponents of the claim, and if reality supports the claim, then higher quality evidence will be gathered and published. It is the reverse for critics of the claim, they will face less scrutiny, so their published arguments and evidence will tend to be poorer. Do we have some kind of objective way to test this claim? We do. We can measure scientific rigor by scientific field or subfield, and compare. Probably the most left-wing field of psychology will be social psychology, and it has massive issues with the replication crisis. Intelligence and behavioral genetics research, on the other hand, have no such big problems. One of the least left-wing fields of psychology (nearly 50-50 balance of self-placed politics), should thus have high rigor. A simple way to measure this is compiling data about statistical power by field. This sometimes calculated as part of meta-analyses. Sean Last has compiled such values, reproduced below.

| Citation | Discipline | Mean / Median Power |

| Button et al. (2013) | Neuroscience | 21% |

| Brain Imaging | 8% | |

| Smaldino and McElreath (2016) | Social and Behavioral Sciences | 24% |

| Szucs and Ioannidis (2017) | Cognitive Neuroscience | 14% |

| Psychology | 23% | |

| Medical | 23% | |

| Mallet et al (2017) | Breast Cancer | 16% |

| Glaucoma | 11% | |

| Rheumatoid Arthritis | 19% | |

| Alzheimer’s | 9% | |

| Epilepsy | 24% | |

| MS | 24% | |

| Parkinson’s | 27% | |

| Lortie-Forgues and Inglis (2019) | Education | 23% |

| Nuijten et al (2018) | Intelligence | 49% |

| Intelligence – Group Differences | 57% |

The main issue with this is that the numbers concern either median or mean power, with some inconsistency across fields. The median is usually lower, so one could convert the values using their mean observed ratio.

I should very much like someone to do a more detailed study of this. I imagine that one will do the following:

- Acquire a large dataset of scientific articles, including title, authors, abstract, keywords, fulltext, and references. This can be done either via Scihub (difficult) or by mining open access journals (probably easy).

- Use algorithms to extract data of interest. Usually studies calculating power rely on so-called focal analyses, i.e., main or important statistical tests. These are hard to identify using simple algorithms, but they can extract all of them (those with standardized format, that is!). Check out the work by Nuijten et al linked above. A better idea is to get a dataset of manually extracted data, and then train neural network to also extract them. I think one can succeed in training such an algorithm that is at least as accurate as human raters. When this is done, one can use it on every paper one has data about. Furthermore, one should look into additional automated measures of scientific rigor or quality. This can be relatively simple stuff like counting table, figure, reference density, or presence of robustness tests, or mentioning of key terms such as “statistical power” “publication bias”. It can also be more complicated, such as an algorithm that predicts whether a paper will likely replicate based on data from large replication studies. Such a prototype algorithm has been developed which reached AUC of .77!

- Relate the measures of scientific rigor to indicators of political view of authors, or the conclusions of the paper, or the topic in general. Control for any obvious covariates such as year of publication.

Note on power in intelligence research

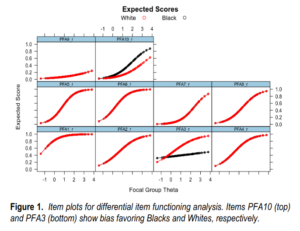

Nuijten et al (2018)’s table has some more subareas of intelligence research.

The findings here are not too surprising except that behavioral genetics has such a tiny power of 9.3%! To those who have been reading the behavioral genetics research over the years, this seems very implausible as findings have been replicating well since the 1960s (since the review by Erlenmeyer-Kimling and Jarvik (1963), cited by Jensen’s famous 1969 review). So what’s up? Over at Gelman’s blog, critic Zhou Fang did not like this post, writing:

Well, Kirkegaard’s links make that inference, but if you go to the source paper, “group differences” actually refers to methodology – specifically:

“group differences compare compare existing (non-manipulated) groups and typically use Cohen’s d or raw mean IQ differences as the key effect size.”

In other words, any study based on a t-test or ANOVA would typically give a high estimated effect size and hence high power. Examples of such studies include estimating IQ in the cases of people with schizophrenia, and gender differences amongst university students (without adjustment for covariates).

This is in stark contrast to “behaviour genetics” studies that attempt to link intelligence to genetic variations or estimate heritability, where Nuiljten estimates they have an average power of 9%.

One may speculate why this result was omitted from discussion.

In my reply, I imagine that Sean Last left it out because it was unclear which findings it referred to. As I wrote in my comment, the low power seen here probably results from inclusion of useless candidate gene studies:

I did attempt to get a clarification on this some time ago (from Michèle). I speculate it is because they included the candidate gene studies in this, and these studies are super underpowered and generally completely useless. I think the regular behavioral genetics twin studies will have fairly recent power. E.g., tons of studies done using the TEDS sample, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3817931/, which has something like 10k twin pairs.

Zhou wants to portray this decision as very unscientific:

The more important point is that this rather establishes the original analysis has very little to do with your inference, and in fact on the face of it contradicts it, unless you “speculate” it away…

So I wrote to Michéle to ask, and her reply is here (posted with permission):

Hi Emil,

We included 5 meta-analyses that we labelled as behavior genetics.

Three of these are candidate gene studies:

Barnett, J. H., Scoriels, L., & Munafo, M. R. (2008). Meta-analysis of the cognitive effects of the catechol-O-methyltransferase gene val158/108Met polymorphism. Biological Psychiatry, 64(2), 137-144. doi:10.1016/j.biopsych.2008.01.005

Yang, L., Zhan, G.-d., Ding, J.-j., Wang, H.-j., Ma, D., Huang, G.-y., & Zhou, W.-h. (2013). Psychiatric Illness and Intellectual Disability in the Prader-Willi Syndrome with Different Molecular Defects – A Meta Analysis. Plos One, 8(8). doi:10.1371/journal.pone.0072640

Zhang, J.-P., Burdick, K. E., Lencz, T., & Malhotra, A. K. (2010). Meta-analysis of genetic variation in DTNBP1 and general cognitive ability. Biological Psychiatry, 68(12), 1126-1133. doi:10.1016/j.biopsych.2010.09.016

One is a candidate gene study involving twins:

Luciano, M., Lind, P. A., Deary, I. J., Payton, A., Posthuma, D., Butcher, L. M., . . . Plomin, R. (2008). Testing replication of a 5-SNP set for general cognitive ability in six population samples. European Journal of Human Genetics, 16(11), 1388-1395. doi:10.1038/ejhg.2008.100

The fifth one studies heritability with twins:

Beaujean, A. A. (2005). Heritability of cognitive abilities as measured by mental chronometric tasks: A meta-analysis. Intelligence, 33(2), 187-201. doi:10.1016/j.intell.2004.08.001

Hope this helps!

Best,

Michèle

So I was right, and correct in leaving it out.