Yep, it’s another comment based on reading too much industrial and organizational psychology!

The Schmidt and Hunter approach to meta-analysis, AKA validity generalization theory (VG theory) AKA psychometric meta-analysis (PMA) differs from others in that it explicitly considers data artifacts and biases and tries to correct for them (good introduction paper here, and authors also made a great R package for doing these!). This may seem obvious to a working physical scientist. Surely, if we know about a bias in the data, we should correct for it, and use the corrected data for downstream analyses. We are interested in reality, not in raw data. This attitude can be seen e.g. in climate science where known bias sources are adjusted for in the temperature data. For instance, the effect of population density or cities has a minor positive bias on temperature data insofar as these are supposed to measure the air temperature.

Back to the world of PMA, to apply corrections to raw data, one usually needs some other data from the study. Specially, to apply corrections for measurement error, one needs to know what the reliability of the instruments were. Since corrections for measurement error are concerned with random measurement error (or at least, almost random!), it is by definition not correlated with anything else, and thus only causes correlations to become weaker (but does not necessarily affect other regression estimates). The typical psychology study, however, does not report the kind of reliability estimate one wants, the test-retest reliability across a ‘short time’ (like 2 days). Instead, they usually report internal reliability estimates, mostly just (Cronbach’s) alpha (and yes, there’s others). This is alright, but often even this value is not reported, especially in older studies. Since scientists refuse to properly archive their data, or share them (freely or at all) if they do archive them, we can’t easily (or at all) just acquire the data and do the missing calculations. So instead, what do we do? Well, one option is disregarding the study entirely, casewise deletion. Another option is using the study for part of our analysis, akin to pairwise deletion. However, the more sensible approach is usually to impute the data, i.e. fill in the missing data based on a best guess of the data we do have. In the PMA tradition, researchers always stress all these things above (well, not the part about not sharing data!). However, they then usually strangely go on to impute the mean reliability. This is a weird practice considering that mean imputation is known to have issues if one looks in related areas of science that also use data imputation (read any tutorial on imputation, like this or this). There are two main issues with mean imputation. First, imputing the mean value reduces variation in the variable, and this can affect other aspects of the analysis (standardized betas/correlations depend on the variation and reducing it will bias the effect sizes). Generally, it will cause relations between variables to become closer to 0, so it’s a downwards bias (the imputed means do not relate to anything). Second, imputing the mean is biased if the data are not missing at random (I don’t use MNAR terms!). In real life, data are not generally missing at random. Researchers didn’t flip a coin to decide whether to report a value or not. They are missing for some reason or other. In some cases, it is obvious: one cannot report a test-retest value if one did not test people twice. However, there’s no particular reason not to report internal reliability aside from poor memory or saving a bit of time/writing. That is, unless there is a reason. Maybe it was not reported because it was poor. Generally speaking, humans tend to under-report information that put themselves in a negative light (self-serving bias). Generalized to science, this suggests that researchers will tend to under-report information in their work that is less than stellar. We of course know this problem already in various forms: publication bias, when people don’t publish all the work they did because results didn’t come out as expected. But one can also have reporting bias within studies, where some methods or tests were tried, and not reported because the results were unfavorable to researchers’ pet theory, or simply worse than other method variants (called forking paths). Naturally, some skeptical people eventually started thinking about this, and they designed studies to look for evidence of this kind of under-reporting, finding lots of such evidence. Here’s a few studies I have come across:

-

Snyder, C., & Zhuo, R. (2018). Sniff Tests in Economics: Aggregate Distribution of Their Probability Values and Implications for Publication Bias (No. w25058). National Bureau of Economic Research.

-

Brodeur, A., Cook, N., & Heyes, A. G. (2018). Methods matter: P-hacking and causal inference in economics.

To come back to the missing data problem in PMA, the use of the mean imputation carries the risk that we are using the wrong values to adjust with. Fortunately, it should be easy to test for this possibility by examining meta-analytic datasets and seeing whether missing data status seems to be related to other variables, thus enabling more accurate imputation. Alas, unfortunately, the researchers in I/O psychology really don’t like sharing their detailed meta-analysis datasets. The best example of a dataset I can think of is from the R package that implement PMA:

-

Dahlke, J. A., & Wiernik, B. M. (2019). psychmeta: An R package for psychometric meta-analysis. Applied Psychological Measurement, 43(5), 415-416.

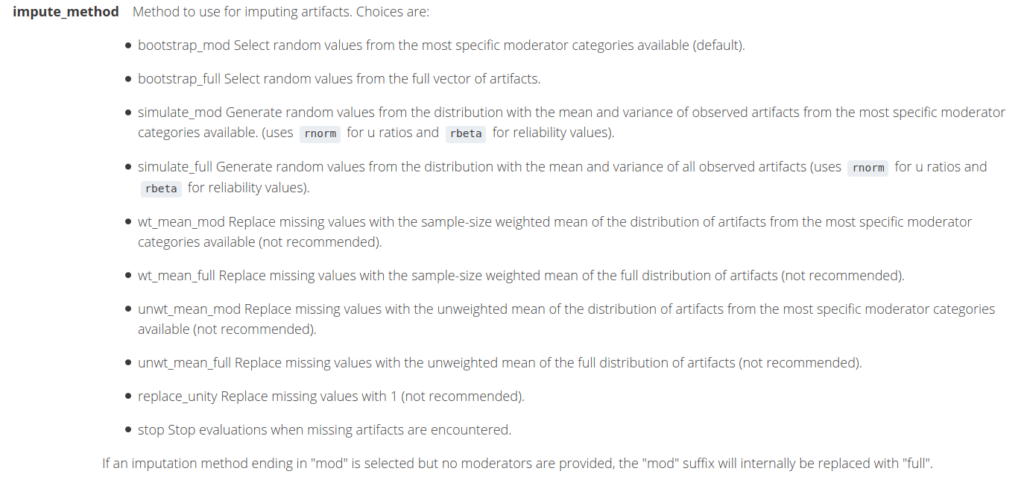

Browsing this package again for writing this post, I was happy to discover it does feature proper imputation! The documentation for control_psychmeta():

It also features a few real datasets one can try stuff on:

(I find these by looking at the package index and searching for “data”. This is easier done inside RStudio)

Anyway, so I am happy that this poor practice is being moved away from, but it does mean that some of the older work is somewhat questionable.