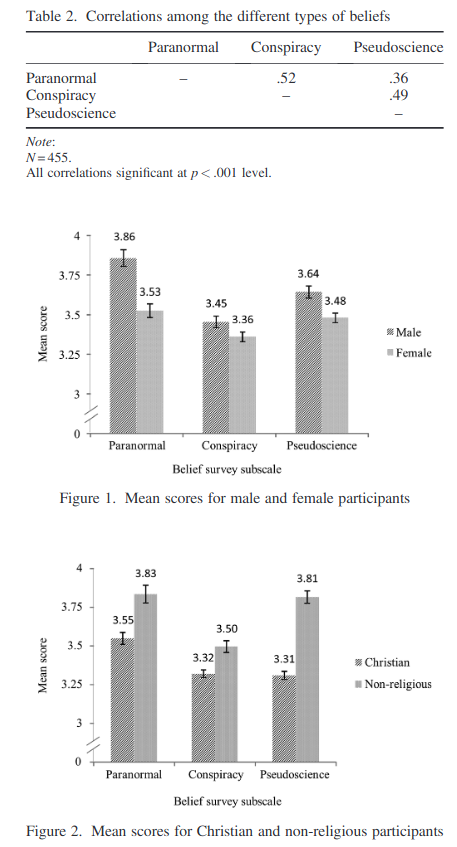

Rationality is great. It’s what separates humans from other species to a very large extent. There’s not one but two philosophical movements named after this (rationalism of Descartes et al, and rationalism of Sillycon Valley). One can easily find popular books by famous authors defending it, e.g. Steven Pinker’s Rationality: What It Is, Why It Seems Scarce, Why It Matters (2021), and Keith Stanovich’s The Rationality Quotient: Toward a Test of Rational Thinking (2016). The former is a review and more philosophical defense of giving reason a lot of weight and becoming more rational. The second is about an attempt at constructing a good test of it. I haven’t read the last book yet, but the idea is simple enough. One takes a bunch of questions about base rate neglect, anchoring bias, outcome bias etc., the stuff that Wikipedia lists. Stanovich also published an article about this test (CART, Comprehensive Assessment of Rational Thinking), and in that we can learn the content of the test:

Now, we might also ask: so who’s more rational, men or women? Surely, given that 1000s of studies have collected data on mixed-sex samples, someone must have looked into this question. After all, there are long running stereotypes of women being irrational. Here’s a Twitter poll:

(I would be interested in whether this stereotype is also endorsed by women. Anecdotally, a lot of women seem to realize this, but maybe they are not a majority. My followers are, of course, much more into these things, so we would expect their stereotypes to be particularly extreme.)

Trust the science, yes, but what does the science say? Not much apparently (look for yourself). I don’t see any meta-analyses of work on the sex differences in rationality. In fact, studies that look at rationality tests rarely report the sex difference. For instance, this Dutch translation and short-form of the above test from 2021 doesn’t report any sex difference (their sample is amusingly described as “[name of large institute for higher education], [name of European country]”. To be fair, this was a survey of students in engineering, so were probably weren’t many women.). Of course, this could be because it is not interesting (e.g. about zero). Or maybe because it is too interesting, indeed, inconvenient.

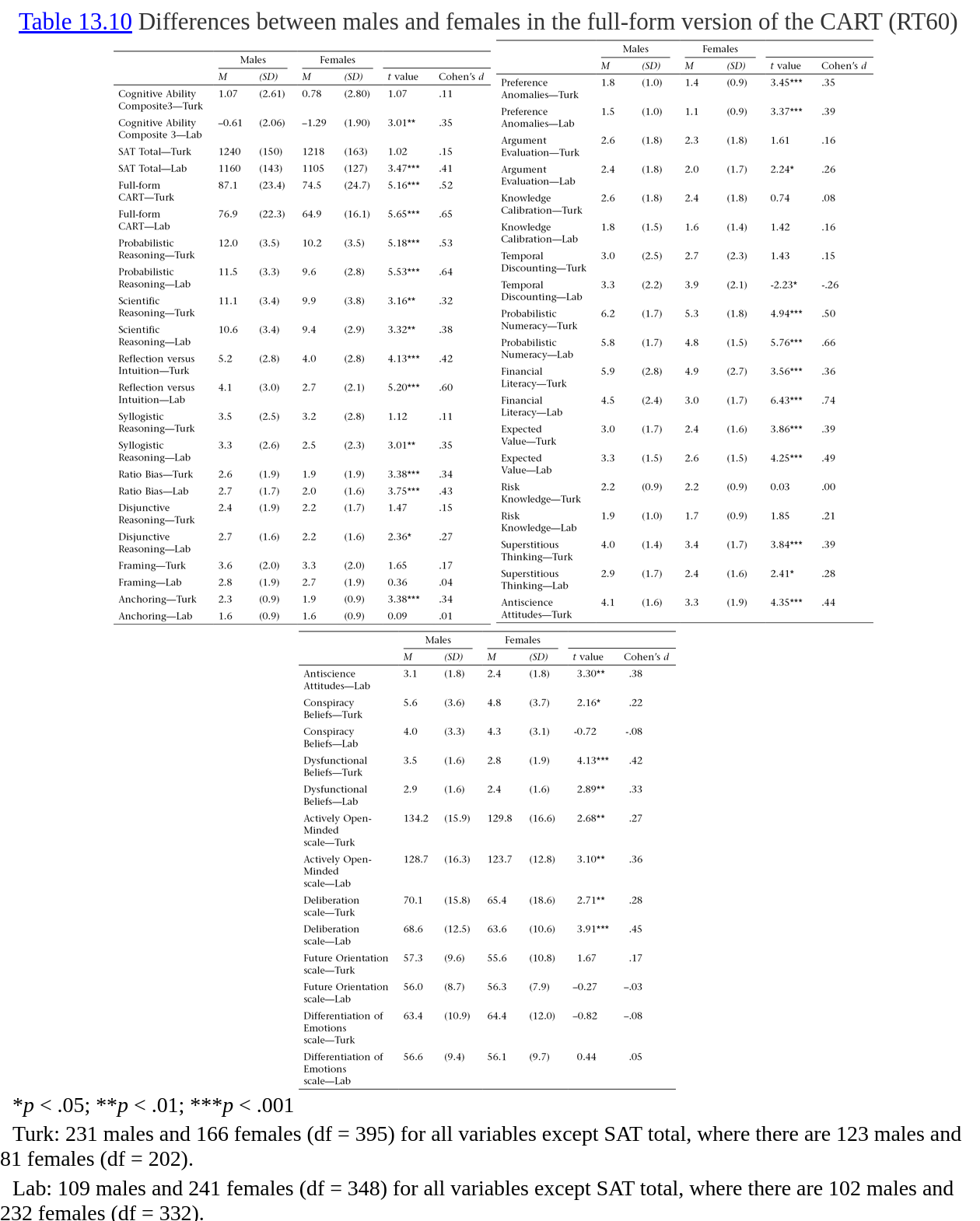

To his credit, Keith Stanovich does not shy away from the topic in his 2016 book. There is a section in chapter 13 about the sex differences. Here’s the table of differences:

So he has two samples. One with Amazon Turk workers and one of students. The overall rationality score gaps are 0.52 d and 0.65 d. The advantages for men are distributed widely among the various kinds of questions, though the sample size is not impressively large so these are somewhat imprecisely estimated. Stanovich notes:

Continuing down the table, it can be seen that the total score on the entire CART full form was higher for males than for females in both samples and the mean difference corresponded to a moderate effect size of 0.52 and 0.65, respectively. These were somewhat lower effect size sizes than were displayed in the study of the short form described in chapter 12 (0.82). Moving down the table, we see displayed the sex differences for each of the twenty subtests within each of the two samples. In thirty-eight of the forty comparisons the males outperformed the females, although this difference was not always statistically significant. There was one statistically significant comparison where females outperformed males: the Temporal Discounting subtest for the Lab sample (convergent with Dittrich & Leipold, 2014; Silverman, 2003a, 2003b). The differences favoring males were particularly sizable for certain subtests: the Probabilistic and Statistical Reasoning subtest, the Reflection versus Intuition subtest, the Practical Numeracy subtest, and the Financial Literacy and Economic Knowledge subtest. The bottom of the table shows the sex differences on the four thinking dispositions for each of the two samples. On two of the four thinking dispositions scales—the Actively Open-Minded Thinking scale and the Deliberative Thinking scale—males tended to outperform females.

So men did especially better on the more mathy parts of the rationality test. This is not so surprising considering that among adults men are better at math, especially more complicated math (women sometimes do better at arithmetic-like tasks).

Since Stanovich reports all the means and the correlation matrices, it is possible to investigate the measurement invariance of this difference, though it wasn’t done in the book. Task left for the reader. The topic of test bias is not discussed in the book as far as I can tell, except the usual disclaimers that the test was based on Western Eurocentric standards of rationality, you know, the kind that makes planes fly.

Sex differences in irrational beliefs

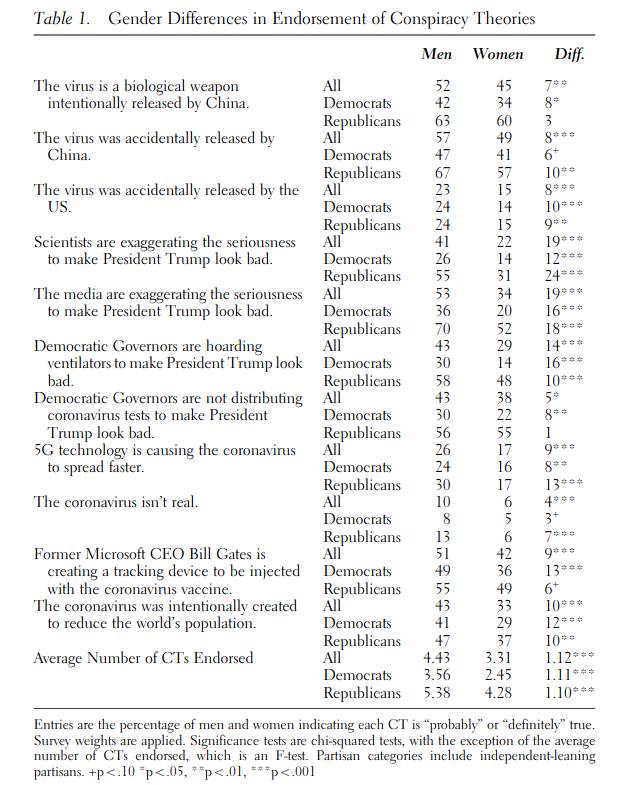

The rationality test above has questions with rather obvious true or false answers, but it also has questions about beliefs that Stanovich considers irrational. The idea here is simple enough. More rational people should be less likely to start believing weird things that don’t make, well, rational sense. So one can measure rationality indirectly by checking who believes irrational stuff. This indirect approach is susceptible to sampling bias, however. Clearly, one can find some topics where one sex is more apt to believe irrational things than others. Here’s an amusing study about COVID beliefs from 2020.

Basically, they show that men tended to believe everything counter-mainstream about COVID more, both the wacky stuff (“5G technology is causing the coronavirus to spread faster”), and the reasonable stuff (“The virus was accidentally released by China”). Still, many of their numbers are surprisingly, suspiciously high. One cannot attack the sample, it’s some 3000 representative Americans collected by a pollster (Lucid Theorem, never heard of it, but this guy looked at some of their survey data and finds it reasonable).

Similarly, one can pick topics where women are more irrational. Here’s a Gallup survey from 2005 that often floats around on social media:

They only cover 5 beliefs, but 4 of them are in favor of men and involves patently irrational stuff like haunted houses (40% of women??!). The one belief men endorse more involves aliens, which is also by far the most scientifically plausible. Though the universe seems basically dead insofar as signs of intelligent life are concerned, there’s no possibility of knowing whether some aliens using advanced technology beyond our understanding previously visited Earth to have a look. Generally speaking, we can probably expect men to be more into sci-fi style irrational beliefs.

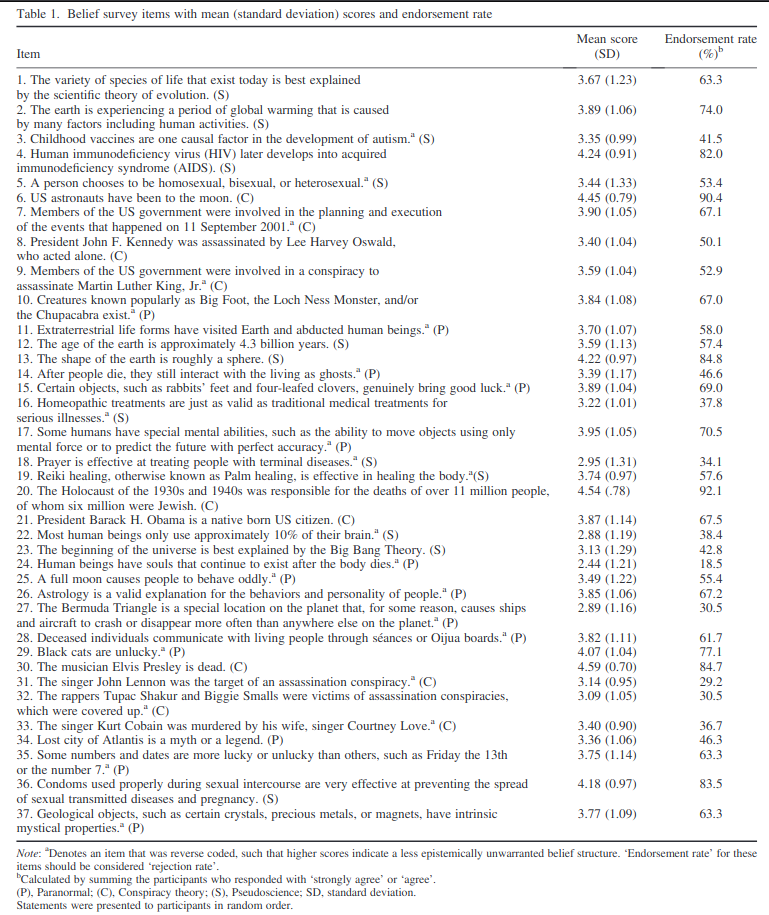

To properly estimate the sex difference in such beliefs, overall, it is important to sample widely in beliefs without trying to select ones that men or women are more apt to believe. This article does a reasonably good job:

-

Lobato, E., Mendoza, J., Sims, V., & Chin, M. (2014). Examining the relationship between conspiracy theories, paranormal beliefs, and pseudoscience acceptance among a university population. Applied Cognitive Psychology, 28(5), 617-625.

Very little research has investigated whether believing in paranormal, conspiracy, and pseudoscientific claims are related, even though they share the property of having no epistemic warrant. The present study investigated the association between these categories of epistemically unwarranted beliefs. Results revealed moderate to strong positive correlations between the three categories of epistemically unwarranted beliefs, suggesting that believers in one type tended to also endorse other types. In addition, one individual difference measure, looking at differences in endorsing ontological confusions, was found to be predictive of both paranormal and conspiracy beliefs. Understanding the relationship between peoples’ beliefs in these types of claims has theoretical implications for research into why individuals believe empirically unsubstantiated claims.

Here’s how they measured it:

Ontological confusion was examined using an English version of the CORE scale developed by Lindeman and col- leagues (α = .93; Lindeman & Aarnio, 2007; Lindeman et al., 2011). This was a 30-item scale examining different varieties of core ontological confusions (e.g., Natural, lifeless objects are living; artificial objects are animate). Participants were presented with a statement representing one of the types of core ontological confusions and were asked to determine whether the statement was literally true or not literally true. An example of statements in this scale is ‘Stars live in the sky’, which describes a physical entity (i.e., stars) as possessing a biological property (i.e., life). Eight filler items were included in the scale.

A novel 37-item questionnaire investigating acceptance of epistemically unwarranted beliefs was created for this study (see Table 1; hereafter, Belief survey). Many items used in this questionnaire were adopted from other scales, such as the Revised Paranormal Belief Scale (Tobacyk, 2004), and studies on conspiracy theory acceptance such as Goertzel (1994) and Abalakina-Paap et al. (1999). A few additional items not previously investigated in the existing literature were included. All items were a priori defined as paranor- mal, conspiracy, or pseudoscientific. Participants were instructed to read each statement and select on a five-point scale how much they agree or disagree with the claim (1 = strongly disagree and 5 = strongly agree). For analysis, scores were recoded such that a higher score reflected a greater rejection of the epistemically unwarranted belief.

Here’s their list of bad beliefs:

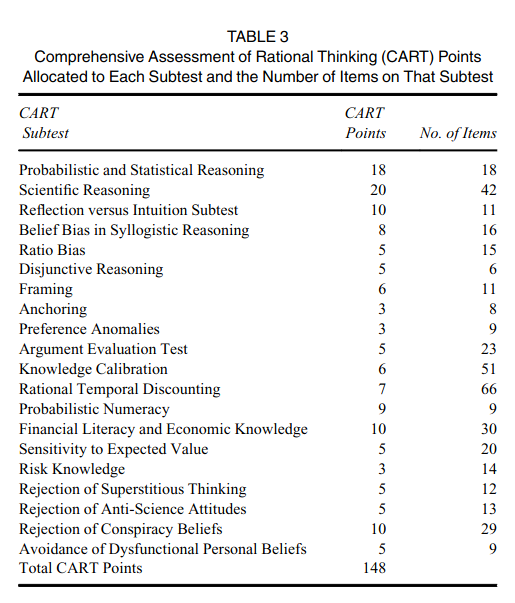

They grouped them into 3 categories: paranormal, conspiracy, and pseudoscience. And the results:

Naturally, the three groupings themselves correlate, showing us the existence of the assumed general factor of rationality. I guess we can call it big R. Being male correlated r = .25 with R. Since male/female is a binary indicator, they should have computed the Cohen’s d instead. Cohen’s d is roughly twice the correlation when sample sizes are evenly split, so d = 0.55 or so (this sample is psychology students). Not surprisingly, less religious people scored better as well, even on beliefs unrelated to religions. Reassuringly, this difference is about the same as the one the direct rationality test finds: 0.50 d. So if this was like the IQ scale, men would score about 7.5 RQ higher than women. This is a fairly large gap, about the same as that between American Hispanics and Whites.

Social consequences of more women in power

Everybody knows that rationality is important for social policies. Poor policy decisions lead to waste of money and potentially counterproductive policies (doing the opposite of intended). Indeed, some ruthless people have looked at this before. For instance, if one trusts economists to work out generally how economics works and have more rational beliefs about economic policy, then we can use economist beliefs about economic policy as a benchmark to evaluate men and women. Bryan Caplan & coatuthor did this in a 2010 paper. They find that being male is statistically associated with agreeing more with economists in 15 cases and agreeing less in 1 case, out of a total of 34. There’s also some studies showing that the introduction of the female vote lead to increased government spending, despite this being bad for economic growth (i.e. short-sighted).

In the last 100 years or so, men have decided to give women more say in how things are run. In the last few decades, this has also lead to their entrance on the job market and increasingly in power. This is true both for national governments, and various industries of high importance. In the military, this has been an amusing disaster. Noah Carl has written about how Wokeness in academia is associated especially strongly with women and ethnic groups with lower intelligence, the demographics with lower levels of rationality. The link between intelligence and rationality is actually very strong, though probably distinct. Keith Stanovich has previously (2010) written a book on this topic, trying to make the case for rationality testing beyond intelligence testing. However, in his 2016 book, he found that the score on his own rationality test correlated about .70 with IQ. This is without adjusting for measurement error, so the true correlation might well be .85. Stuart Ritchie has an amazing book review about this. This still leaves some space for rationality. Everybody knows someone who is very bright but somehow fails at rationality. Why is that? I would think this would relate to the P factor of mental illness, but I am not familiar with any evidence about this. I would also wager that autism tends to relate positively to rationality, hence all the autists in the HBD movement.