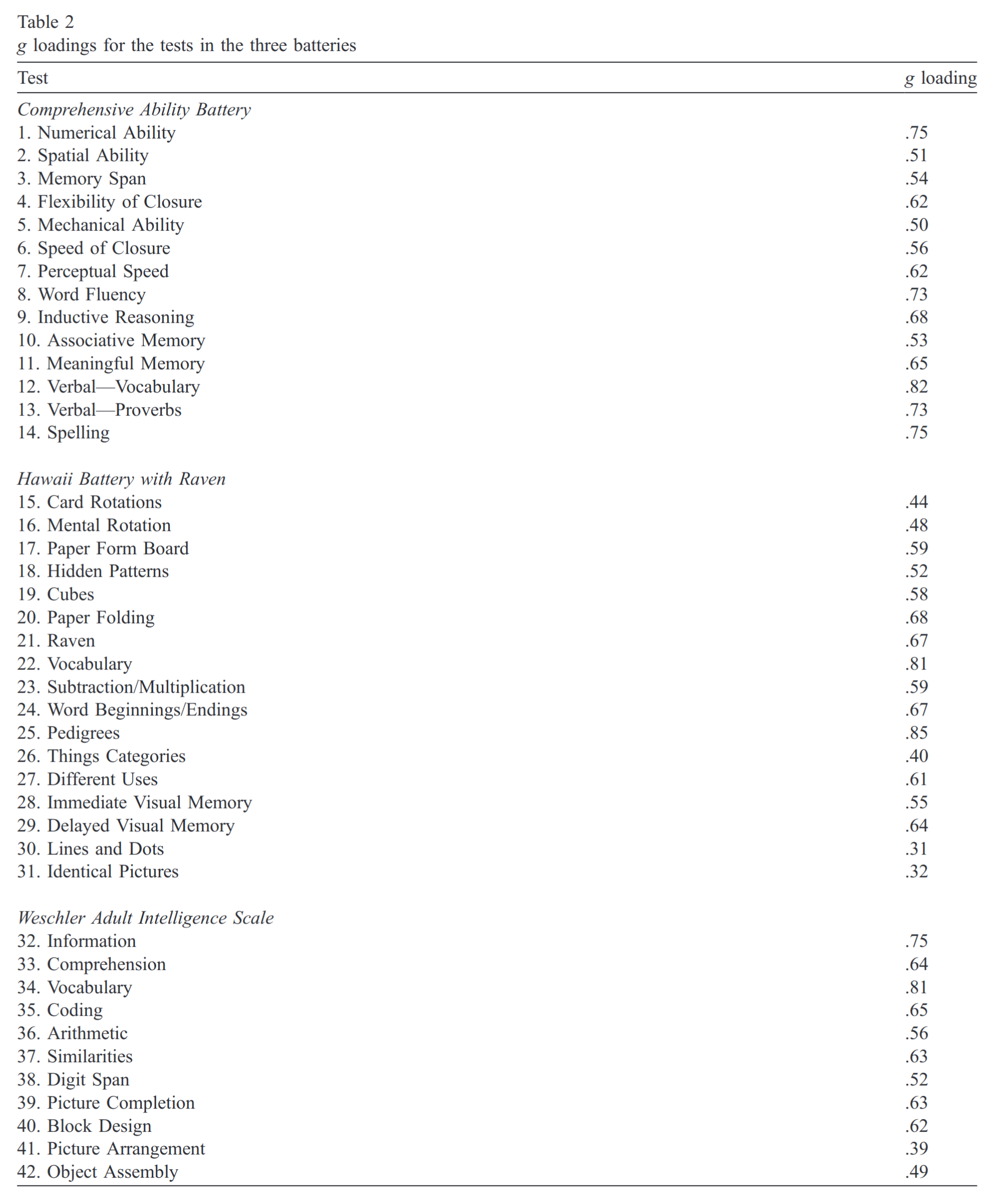

There’s 100s of different mental tests, all of which measure intelligence to some degree or another. However, there is quite the difference between the degree to which the tests measure intelligence. Intelligence is here operationally defined as the general factor of the matrix, which we label g. Ideally, to figure out which test has the highest g-loading, we need a dataset that gave every mental test to a large group of people at once. Since this doesn’t exist, we have to rely on studies where a large pool of tests were given. This approach is somewhat suboptimal because the composition of a test battery will somewhat influence the observed g factor. For instance, if I administered a battery where 10 of 12 tests are tests of verbal ability, then the g factor extracted from this battery will have a verbal ability bias. One can attempt to remove this using a more advanced method than exploratory factor analysis (EFA), where one introduces group factors, which some studies have done.

So what are the results from some studies where they gave large numbers of mental tests? I looked around and found a few:

- Johnson, W., Bouchard Jr, T. J., Krueger, R. F., McGue, M., & Gottesman, I. I. (2004). Just one g: Consistent results from three test batteries. Intelligence, 32(1), 95-107.

The concept of a general intelligence factor or g is controversial in psychology. Although the controversy swirls at many levels, one of the most important involves g’s identification and measurement in a group of individuals. If g is actually predictive of a range of intellectual performances, the factor identified in one battery of mental ability tests should be closely related to that identified in another dissimilar aggregation of abilities. We addressed the extent to which this prediction was true using three mental ability batteries administered to a heterogeneous sample of 436 adults. Though the particular tasks used in the batteries reflected varying conceptions of the range of human intellectual performance, the g factors identified by the batteries were completely correlated (correlations were .99, .99, and 1.00). This provides further evidence for the existence of a higher-level g factor and suggests that its measurement is not dependent on the use of specific mental ability tasks.

Their results:

- Johnson, W., te Nijenhuis, J., & Bouchard Jr, T. J. (2008). Still just 1 g: Consistent results from five test batteries. Intelligence, 36(1), 81-95.

In a recent paper, Johnson, Bouchard, Krueger, McGue, and Gottesman (2004) addressed a long-standing debate in psychology by demonstrating that the g factors derived from three test batteries administered to a single group of individuals were completely correlated. This finding provided evidence for the existence of a unitary higher-level general intelligence construct whose measurement is not dependent on the specific abilities assessed. In the current study we constructively replicated this finding utilizing five test batteries. The replication is important because there were substantial differences in both the sample and the batteries administered from those in the original study. The current sample consisted of 500 Dutch seamen of very similar age and somewhat truncated range of ability. The batteries they completed included many tests of perceptual ability and dexterity, and few verbally oriented tests. With the exception of the g correlations involving the Cattell Culture Fair Test, which consists of just four matrix reasoning tasks of very similar methodology, all of the g correlations were at least .95. The lowest g correlation was .77. We discuss the implications of this finding.

Results:

- Gignac, G. E. (2015). Raven’s is not a pure measure of general intelligence: Implications for g factor theory and the brief measurement of g. Intelligence, 52, 71-79.

It has been claimed that Raven’s Progressive Matrices is a pure indicator of general intelligence (g). Such a claim implies three observations: (1) Raven’s has a remarkably high association with g; (2) Raven’s does not share variance with a group-level factor; and (3) Raven’s is associated with virtually no test specificity. The existing factor analytic research relevant to Raven’s and g is very mixed, likely because of the variety of factor analytic techniques employed, as well as the small sample sizes upon which the analyses have been performed. Consequently, the purpose of this investigation was to estimate the association between Raven’s and g, Raven’s and a theoretically congruent group-level factor, and Raven’s test specificity within the context of a bifactor model. Across several large samples, it was observed that Raven’s (1) shared approximately 50% of its variance with g; (2) shared approximately 10% of its variance with a fluid intelligence group-level factor orthogonal to g; and (3) was associated with approximately 25% test specific reliable variance. Overall, the results are interpreted to suggest that Raven’s is not a particularly remarkable test with respect to g. Potential implications relevant to the commonly articulated central role of Raven’s in g factor theory, as well as the Flynn effect, are discussed. Finally, researchers are discouraged to include only Raven’s in an investigation, if a valid estimate of g is sought. Instead, as just one example, a four-subtest combination from the Wechsler scales with a g validity coefficient of .93 and 14 min administration time is suggested.

First set of results:

Second set of results (note this overlaps with Johnson above):

Third set of results (note this overlaps with Johnson above):

Fourth set of results:

One could go on, digging up more matrices, and calculating g-loadings across many batteries, so that one can average them. That exercise is left for the future. Here I will merely note some results of interest regarding the highest g-loadings:

- Vocabulary:

- In the comprehensive ability battery, vocabulary has a g-loading of .82 the highest in the battery.

- In the Hawaii battery with raven, vocabulary has a g-loading of .81, the 2nd highest in the battery (pedigrees .85).

- In the WAIS, vocabulary has a g-loading of .81, the highest in the battery.

- In the test battery of the Royal Dutch navy, “verbal” has a g-loading of .67, only slightly below the highest of .70 (“computation part 2”).

- In the general aptitude test battery, vocabulary has a g-loading of .65, second only to arithmetic which has .66.

- In the Marshalek study, vocabulary has a loading of .65, below the best test which was arithmetic concepts, which has .85.

- Arithmetic:

- In the comprehensive ability battery, “numerical ability” has a g-loading of .77, below the .82 from vocabulary.

- In the Hawaii battery with raven, subtraction/multiple has a g-loading of .59, below .81 from vocabulary.

- In the WAIS, arithmetic has a g-loading of .56, below vocabulary of .81.

- In the test battery of the Royal Dutch navy, the highest g-loading is .70 “computation part 2”, and “computation part 1” .69.

- In the general aptitude test battery, arithmetic has the highest g-loading, .66.

- In the Marshalek study, the best test is arithmetic concepts, which has .85.

- In the Gustafsson study, mathematics has a g-loading of .79. the highest in the battery together with number series (also .79).

Overall, it seems vocabulary is the single best way to measure intelligence in terms of g-loading. The chief other benefits of vocabulary tests is that they are fast to administer, and not stressful for the subjects. The chief disadvantage is that they are prone to bias, both with regards to age and non-native speakers.

Since research samples are increasingly composed of non-native speakers, it may be wise to use a non-verbal test. For this purpose, arithmetic and number series seem to have the highest g-loadings. In fact, looking at the above studies, they also has the advantage of having very weak group factor loadings. In the Gustafsson study, number series has no group factor loading whereas mathematics only has .15. In the Marshalek study, arithmetic operations has group factor loading of -.02. In the MISTRA, numerical ability has no group factor loading. In the second part of MISTRA, arithmetic has a group factor loading of .18. Insofar as these 4 bi-factor studies are concerned, then, arithmetic and number series seem to be both relatively good measures of g, and relatively pure, as in not measuring a secondary construct much. The other advantage of these tests is then that they are relatively universally applicable, requiring no advanced mathematical training or particular native language. For the purposes of designing a relatively universal and highly valid g test, then one should aim to construct computer adaptive versions of these tests.

Why is vocabulary so highly g-loaded?

Jensen, as usual, supplied us with an answer and even a conclusion (Jensen 1980):

Vocabulary. Word knowledge figures prominently in standard tests. The scores on the vocabulary subtest are usually the most highly correlated with total IQ of any of the other subtests. This fact would seem to contradict Spearman’s important generalization that intelligence is revealed most strongly by tasks calling for the eduction of relations and correlates. Does not the vocabulary test merely show what the subject has learned prior to taking the test? How does this involve reasoning or eduction?

In fact, vocabulary tests are among the best measures of intelligence, because the acquisition of word meanings is highly dependent on the eduction of meaning from the contexts in which the words are encountered. Vocabulary for the most part is not acquired by rote memorization or through formal instruction. The meaning of a word most usually is acquired by encountering the word in some context that permits at least some partial inference as to its meaning. By hearing or reading the word in a number of different contexts, one acquires, through the mental processes of generalization and discrimination and eduction, the essence of the word’s meaning, and one is then able to recall the word precisely when it is appropriate in a new context. Thus the acquisition of vocabulary is not as much a matter of learning and memory as it is of generalization, discrimination, eduction, and inference. Children of high intelligence acquire vocabulary at a faster rate than children of low intelligence, and as adults they have a much larger than average vocabulary, not primarily because they have spent more time in study or have been more exposed to words, but because they are capable of educing more meaning from single encounters with words and are capable of discriminating subtle differences in meaning between similar words. Words also fill conceptual needs, and for a new word to be easily learned the need must precede one’s encounter with the word. It is remarkable how quickly one forgets the definition of a word he does not need. I do not mean “ need” in a practical sense, as something one must use, say, in one’s occupation; I mean a conceptual need, as when one discovers a word for something he has experienced but at the time did not know there was a word for it. Then when the appropriate word is encountered, it “ sticks” and becomes a part of one’s vocabulary. Without the cognitive “need,” the word may be just as likely to be encountered, but the word and its context do not elicit the mental processes that will make it “ stick.”

During childhood and throughout life nearly everyone is bombarded by more different words than ever become a part of the person’s vocabulary. Yet some persons acquire much larger vocabularies than others. This is true even among siblings in the same family, who share very similar experiences and are exposed to the same parental vocabulary.

Vocabulary tests are made up of words that range widely in difficulty (percentage passing); this is achieved by selecting words that differ in frequency of usage in the language, from relatively common to relatively rare words. (The frequency of occurrence of each of 30,000 different words per 1 million words of printed material—books, magazines, and newspapers—has been tabulated by Thorndike and Lorge, 1944.) Technical, scientific, and specialized words associated with particular occupations or localities are avoided. Also, words with an extremely wide scatter of “ passes” are usually eliminated, because high scatter is one indication of unequal exposure to a word among persons in the population because of marked cultural, educational, occupational, or regional differences in the probability of encountering a particular word. Scatter shows up in item analysis as a lower than average correlation between a given word and the total score on the vocabulary test as a whole. To understand the meaning of scatter, imagine that we had a perfect count of the total number of words in the vocabulary of every person in the population. We could also determine what percentage of all persons know the meaning of each word known by anyone in the population. The best vocabulary test limited to, say, one hundred items would be that selection of words the knowledge of which would best predict the total vocabulary of each person. A word with wide scatter would be one that is almost as likely to be known by persons with a small total vocabulary as by persons with a large total vocabulary, even though the word may be known by less than 50 percent of the total population. Such a wide-scatter word, with about equal probability of being known by persons of every vocabulary size, would be a poor predictor of total vocabulary. It is such words that test constructors, by statistical analyses, try to detect and eliminate.

It is instructive to study the errors made on the words that are failed in a vocabulary test. When there are multiple-choice alternatives for the definition of each word, from which the subject must discriminate the correct answer among the several distractors, we see that failed items do not show a random choice among the distractors. The systematic and realiable differences in choice of distractors indicate that most subjects have been exposed to the word in some context, but have inferred the wrong meaning. Also, the fact that changing the distractors in a vocabulary item can markedly change the percentage passing further indicates that the vocabulary test does not discriminate simply between those persons who have and those who have not been exposed to the words in context. For example, the vocabulary test item erudite has a higher percentage of errors if the word polite is included among the distractors; the same is true for mercenary when the words stingy and charity are among the distractors; and stoical – sad, droll – eerie, fecund – odor, fatuous – large.

Another interesting point about vocabulary tests is that persons recognize many more of the words than they actually know the meaning of. In individual testing they often express dismay at not being able to say what a word means, when they know they have previously heard it or read it any number of times. The crucial variable in vocabulary size is not exposure per se, but conceptual need and inference of meaning from context, which are forms of eduction. Hence, vocabulary is a good index of intelligence.

Picture vocabulary tests are often used with children and nonreaders. The most popular is the Peabody Picture Vocabulary Test. It consists of 150 large cards, each containing four pictures. With the presentation of each card, the tester says one word (a common noun, adjective, or verb) that is best represented by one of the four pictures, and the subject merely has to point to the appropriate picture. Several other standard picture vocabulary tests are highly similar. All are said to measure recognition vocabulary, as contrasted to expressive vocabulary, which requires the subject to state definitions in his or her own words. The distinction between recognition and expressive vocabulary is more formal than psychological, as the correlation between the two is close to perfect when corrected for errors of measurement.