Abstract

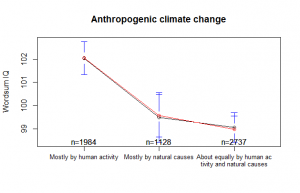

Using data from the ANES 2012 survey, I investigate the relationship between wordsum IQ estimates (unweighted sums, factor analytic and item-response theory scores) and opinions about nuclear energy and global warming and related beliefs. Proponents of nuclear energy were found to have somewhat higher IQs (103) than those who think we should have fewer (100) or the same as now (99). Surprisingly, IQ did not much much effect on belief in global warming (100.5 vs. 99.5), but it did show effects for the related beliefs.

Introduction

In my previous post (Kirkegaard, 2015), I reviewed data about education and beliefs about nuclear energy/power. In this post, I report some results for intelligence measured by wordsum. The wordsum is a 10 word vocabulary test widely given in US surveys. It correlates .71 with a full-scale IQ test (Wolfle, 1980), so it’s a decent proxy for group data, although not that good for individual level. It is short and innocent enough that it can be given as part of larger surveys and has strong enough psychometric properties that it is useful to researchers. Win-win.

The data

The data comes from the ANES 2012 survey (ANES, 2012). A large survey given to about 6000 US citizens. The datafile has 2240 variables of various kinds.

Method and results

The analysis was done in R.

The datafile does not come with a summed wordsum score, so one must do this oneself, which involves recoding the 10 variables. In doing so I noticed that one of the words (#5, labeled “g”) has a very strong distractor: 43% pick item 5 (the correct), 49% pick item 2. This must clearly be either a very popular confusion (which makes it not a confusion at all because if a word is commonly used to mean x, that is what it means in addition to other meanings if any), or an ambiguous word, or two reasonable answers. Clearly, something is strange. For this reason, I downloaded the questionnaire itself. However, the word options has been redacted!

I don’t know if this is for copyright reasons or test-integrity reasons. The first would be very silly, since it is merely 10 lists of 1+6 words, hardly copyrightable. The second is more open to discussion. How many people actually check the questionnaire so that they can get the word quiz correct? Can’t be that many, but perhaps a few. Anyway, if the alternative is to change the words every year to avoid possible cheating, this is the better option. Changing words would mean that between year results were not so easily comparable, even if care is taken in choosing similar words. Still, for the above reasons, I decided to analyze the test with and without the odd item.

First I recoded the data. Any response other than the right one was coded as 0, and the correct one as 1. For the policy and science questions, I recoded the missing/soft refusal and (hard?) refusal into NA (missing data).

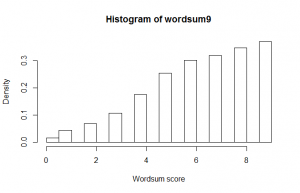

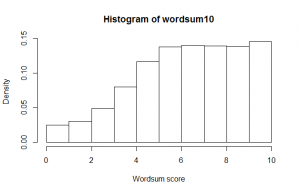

Second, for each case, I summed the number of correct responses. Histograms:

The test shows a very strong ceiling effect. They need to add more difficult words. They can do this without ruining comparison-ability with older datasets, since one can just exclude the newer harder words in the analysis if one wants to do longitudinal comparisons.

Third, I examined internal reliability using alpha() from psych. This revealed that the average inter-item correlation would increase if the odd item was dropped, tho the effect was small: .25 to .27.

Fourth, I used both standard and item response theory factor analysis on 10 and 9 item datasets and extracted 1 factor. Examining factor loadings showed that the odd item had the lowest loading using both methods (classic: median=.51, item=.37; IRT: median=.68, item=.44).

Fifth, I correlated the scores from all of these methods to each other: classical FA on 10 and 9 items, IRT FA on 10 and 9 items, unit-weighted sum of 10 and 9 items. The results are in the table below.

|

fa.10

|

fa.9

|

fa.irt.10

|

fa.irt.9

|

sum10

|

sum9

|

|

|

fa.10

|

1.00

|

0.99

|

0.91

|

0.90

|

0.98

|

0.99

|

|

fa.9

|

0.99

|

1.00

|

0.87

|

0.88

|

0.96

|

0.98

|

|

fa.irt.10

|

0.91

|

0.87

|

1.00

|

0.98

|

0.94

|

0.91

|

|

fa.irt.9

|

0.90

|

0.88

|

0.98

|

1.00

|

0.92

|

0.92

|

|

sum10

|

0.98

|

0.96

|

0.94

|

0.92

|

1.00

|

0.98

|

|

sum9

|

0.99

|

0.98

|

0.91

|

0.92

|

0.98

|

1.00

|

Surprisingly, the IRT vs. non-IRT scores did not correlate that strongly, only around .90 (range .87 to .94). The others usually correlated near 1 (range .96 to .99). The scores with and without the odd item correlated strongly tho, so the rest of the analyses I used the 10-item version. Due to the rather ‘low’ correlations between the estimates, I retained both the IRT and classic FA scores for further analysis.

Sixth, I converted both these factor scores to standard IQ format (mean=100, sd=15). Since the output scores of IRT FA was not normal, I normalized it first using scale().

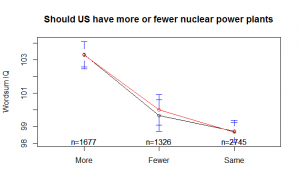

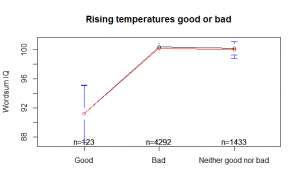

Seventh, I plotted the answer categories with their mean IQ and 99% confidence intervals. For each plot, I plotted both the IQ estimates. Classical FA scores in black, IRT scores in red.

Results and discussion

We see as expected that nuclear proponents (people who think we should have more) have higher IQ than those who think we should have fewer or the same. Note that this is a non-linear result. The group who thinks we should have fewer have a slightly higher IQ than those who favor the status quo, altho this difference is pretty slight. If it is real, it is perhaps because it is easier to favor the status quo, than to have an opinion on which way society should change in line with cognitive miser theory (Renfrow and Howard, 2013). The difference between proponents and opponents was about 3 IQ. This is the same as I found using OKCupid data (Kirkegaard, 2015).

The result for the question of whether global warming is happening is interesting. There is almost no effect of intelligence on getting such an obvious question right. Presumably, this means that there is a strong effect of political ideology, at least in these (US) data.

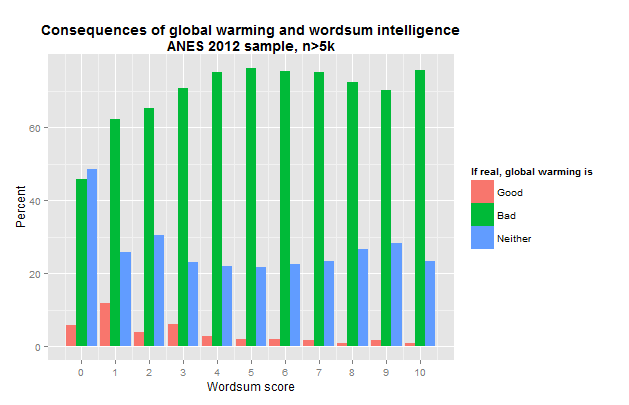

The next two questions were also given to people who said that global warming was not happening. When it was, they prefixed it with “Assuming it’s happening”. Here we see a strong negative effect on thinking that the consequences will be good, as well as weak effects on the cause of global warming.

Non-linear results

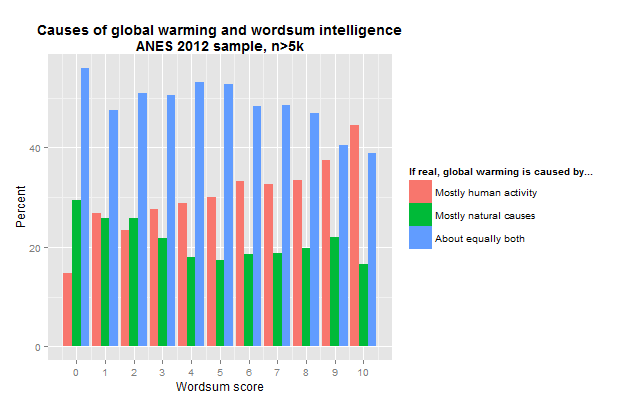

The above plots show only the mean IQ by each answer group to the questions. However, it is possible that there are non-linear effects. These cannot be seen in the above plots. Instead, we may plot the results something like this:

So do we see a non-linear effect, in that approximately before score 6, there is no effect of intelligence on being a proponent of nuclear power. But the effect is on aggregate strong, about 20%points between min and max. Perhaps the cognitive capacity to understand the situation does not begin to emerge before that point. There is little relationship between being an opponent and intelligence, which is perhaps because this belief is caused by factors that do not have a strong relationship to intelligence, such as political leftism. Favor of the status quo goes down with more intelligence, but it is almost entirely to the proponent camp. This is what one would expect to see if people were being converted by better understanding of the situation.

Note that they effect may be mediated by educational level, but I did not examine that.

There is a slight effect of intelligence on belief in global warming (about 10%points between min and max). Perhaps the relatively low ceiling of the test, perhaps around 115. There may be a stronger effect further up.

There is a strong effect of intelligence of believing that humans are the main cause of global warming. The lower intelligence groups strongly embrace the middle position (both are important) which is perhaps the position of someone who did not research the issue very well.

Relatively few people think that global warming (given that it is real) is overall a good outcome. There is a non-linear looking trend on thinking it is bad, but it changes again at the max level. Perhaps a statistical fluke, perhaps not.

References

ANES. (2012). The American National Election Studies (ANES; www.electionstudies.org). The ANES 2012 Time Series Study [dataset]. Stanford University and the University of Michigan [producers].

Kirkegaard, E. O. W. (2015). Attitude towards nuclear energy and correlates: educational attainment, gender, age, self-rated knowledge, experience, etc. Clear Language, Clear Mind (blog).

Renfrow, D. G., & Howard, J. A. (2013). Social psychology of gender and race. In Handbook of Social Psychology (pp. 491-531). Springer Netherlands.

Wolfle, L. M. (1980). The enduring effects of education on verbal skills. Sociology of Education, 104-114.

R code and data

The dataset and source code is available at the Open Science Framework repository.