Lots of published psychology studies don’t replicate well, in particular cute things like interactions, counter-intuitive or priming effects. In general, traditional behavioral genetics studies have replicated well. This is not surprising because basically the same method (ACE fitting) has been applied to lots of large datasets, and studies that use consistent methods on large datasets tend to find the same conclusions. That’s because reality is pretty consistent and doesn’t suddenly change from one time to another. There is one thing in behavioral genetics that didn’t replicate well, namely candidate gene associations studies. The reasons for this are the usual mix of fallacious p-value reasoning, lack of attention to effect sizes, publication bias and general statistical incompetence. Things that people like Meehl had been criticizing decades earlier. It’s hard to teach someone something when his livelihood depends on not understanding it, scientists included. Behavioral geneticists have since realized the error of their ways and now their results replicate well.

Behavioral geneticists also sometimes fall to temptation and promote cute things like interactions. There are two such prominent hypotheses that I can think of. The first is the proposed interaction effect of variance in the 5-HTT gene, life stress and depression. This study has some of the usual signs of trouble: published in Science, interaction effect, >7000 citations, suitability to left-wing ideology, It doesn’t have a small sample, n≈1000. The discovery p value was p=.02, the main effect of the gene variation was p=.06. Also, different p values across related outcomes, so not particularly convincing to begin with, but it has >7000 citations, so obviously there is something about it that scientists like. And then the usual stuff happened: large failed replications, meta-analyses with newly proposed moderator effects (effect found for men, not for women), and citation bias. Not exactly confidence inspiring.

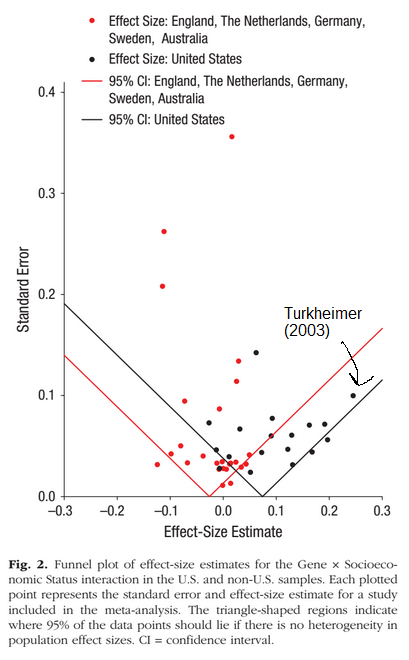

The second hypothesis is the supposed interaction between parental S and heritability of cognitive ability. As above, the first study was published about 10 years ago, has a large number of citations (~1000) and the finding suits left-wing ideology. The sample size was pretty small for a modern behavioral genetic study at ~320 pairs. You can guess what happened, large follow-up studies found inconsistent results and finally someone did a meta-analysis which found no reliable overall effect. What it did was find an effect in a subsample of US studies and none in non-US samples. Unsurprisingly, the original study was a strong outlier:

In fact, we can go further. The meta-analysis dataset is public, so we can take a look at it and look for trouble. The authors used a fancy model that could take the hierarchical data structure into account (multiple outcomes per sample). I used a simpler method where I averaged the results within samples first and then meta-analyzed as normally (same approach as used in my study here). This gave me an overall effect size of .029 [CI95: -.015, .073], so pretty negligible. The authors’ more fancy method gave the same number. But then they pull a positive spin on it and analyze the data for US and non-US samples separately, which produces findings of .074 and -.027 using their method (.074 and -.041 using mine). Of this, the Turkheimer study had the largest effect size, .19 using my method. So the finding from that study is about 2.6 times larger than the overall US estimate.

What about publication bias? There aren’t enough studies to be very certain (k=17 after aggregation), but if one does run the correlation between standard error/sample size and effect size it’s negative: -.31/-.23, with large confidence intervals. So, an unsatisfactory maybe.

So did Turkheimer (2003) replicate? Well, if by replicate we mean: a later meta-analysis that analyzed an ad hoc subset of all studies produced an estimate with p<alpha that was ~2.6 smaller than the original study, then yes. Really, the word replicate is a dichotomous way of thinking about outcomes of studies. Another way to put it is that the original study produced a finding much larger than what other studies find and that we’re not even sure there is an overall effect that isn’t so close to zero we don’t care, even for the US. Possibly there is no finding here at all, possibly there are moderator effects and we will have to do 20 more large studies to find out. Business as usual. Sigh.

With regards to discussion of race differences, all this is fairly pointless because these shared environment effects go away with age and heritability does not seem to differ by SIRE anyway. At least, based on those studies that did report heritabilities by SIRE.

Update 27th May, 2017

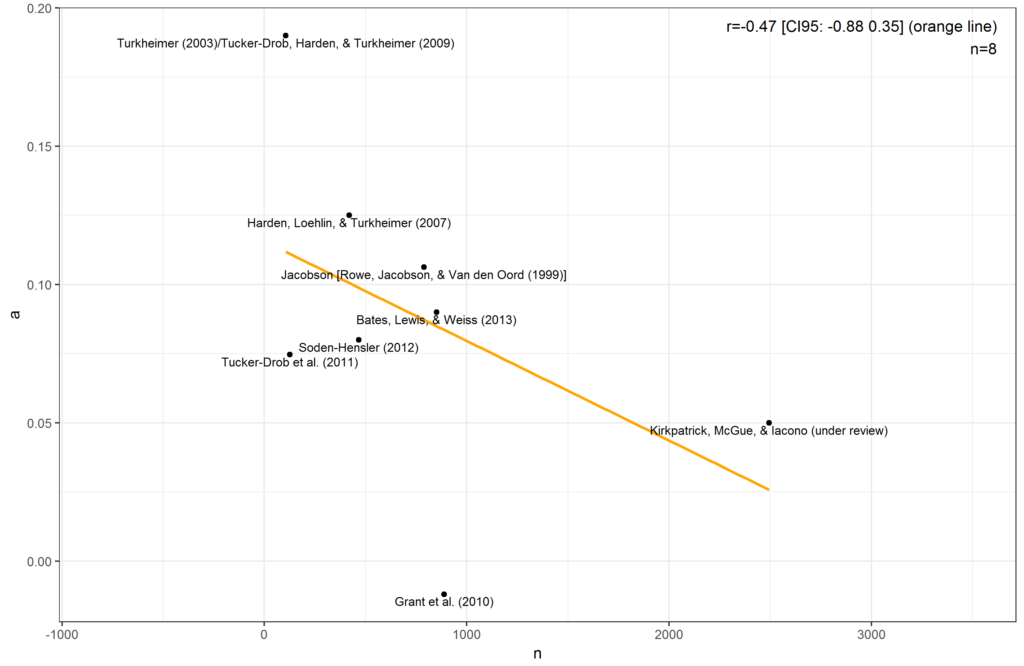

Some keep claiming that we should accept the interaction effect for the US subsample. Well, let’s do a quick test for publication bias in that too. Here’s the scatterplots for the total sample and the US-only subsample.

The patterns are negative, but not conclusively so due to the small number of studies. Still, it means that we should be more skeptical. The effect size is likely overestimated.

(This was post was written in response to Daphne’s odd behavior of telling me to read a meta-analysis I already covered in my own post and even included a central figure from!)