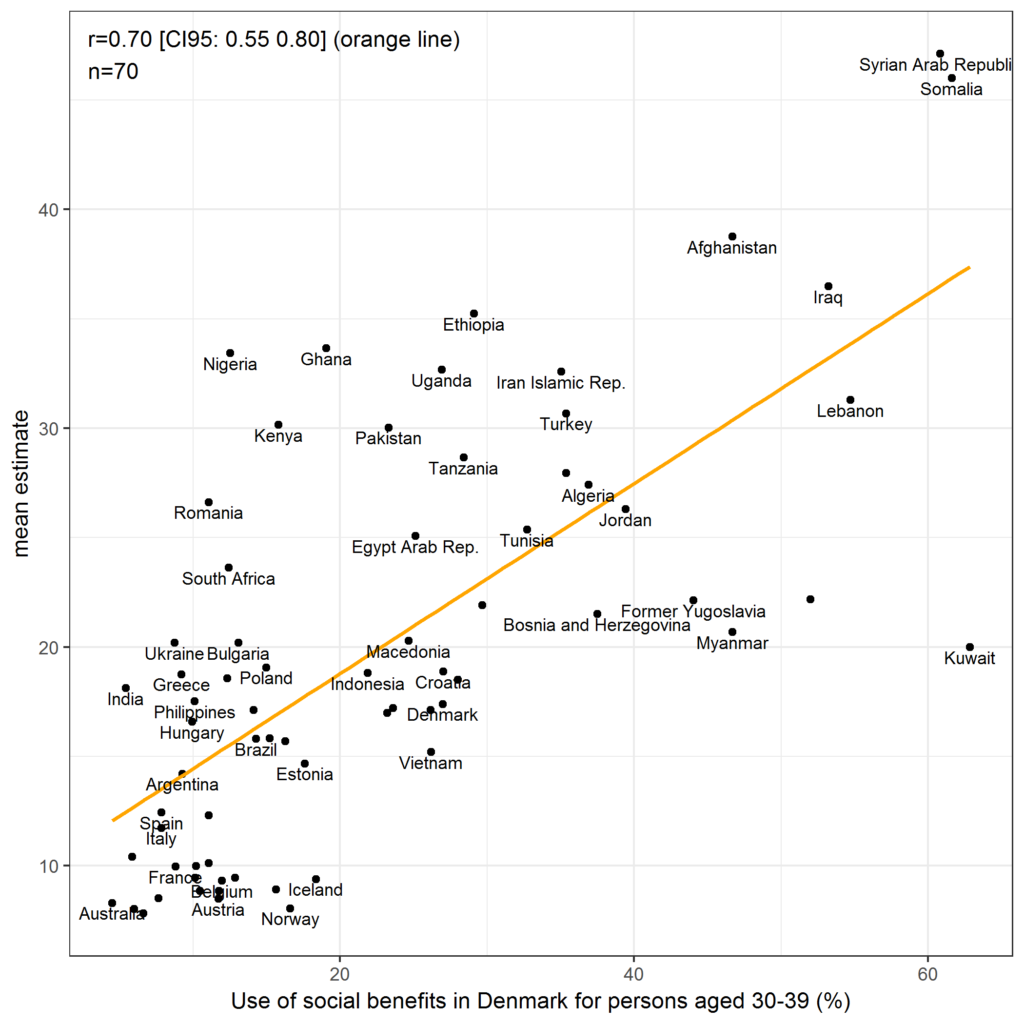

Humans have stereotypes, that is, they hold beliefs about groups of people. When examined, these beliefs tend to reflect reality quite well, sometimes very well (for review, see Jussim’s book). Below is a figure from our own study (Kirkegaard and Bjerrekær, 2016). We recruited a sample of 500 nationally representative Danes, filtered away those who failed control questions. We had everybody try to estimate how large a percentage of persons in 70 national groups were receiving welfare benefits in Denmark. We compared their estimates with the true values from the Danish Statistics agency. The plot shows the the average estimate and the true value.

As can be seen, the accuracy was overwhelming, at r = .70. Such large relationships are very rarely seen in social science.

Recently there has been talk of how algorithms to make predictions are ‘racist’ and ‘sexist’ because they take into account race and sex to make the predictions. This is to say that these social categories have non-zero incremental validity, meaning that ignoring them to make predictions is purposefully making worse predictions than one could, and prima facie irrational. The situation is not even simple from an ethical perspective. Most (US) black crime is targeted against other blacks, so by e.g. granting blacks higher rates of probation, one risks releasing them only to commit more crimes against other blacks. Which is best for the black community at large?

The fact that non-humans also find that these social groups have non-zero validity is hard to square with certain models of stereotypes. Some people believe that stereotypes are massively affected by allegedly biased representation of the same social groups on e.g. TV shows. The theory is that the stereotypes on e.g. TV cause people to adopt stereotypes which then cause social inequality in various ways, usually thru discrimination. This view is hard to square with the fact that AIs and statistical algorithms also find these cues to be useful despite not watching TV shows, and even having any understanding of what they are doing. This finding fits better with the accuracy model of stereotypes which is that humans are able to identify useful statistical cues from their own experiences and from the culture more broadly speaking. Culture, on this view, reflects reality rather than creates it. Of course, there may also be some very inaccurate stereotypes, but in general, they tend to have nontrivial accuracy because they are caused by real differences. We may note that many studies of stereotypes in culture finds that these are too moderate for reality. For instance, Dalliard (2014) notes:

A better test of racial bias in television —and also more pertinent to Kaplan’s concerns about children’s television viewing — is the portrayal of race in fictional crime shows. Unlike news programs, such shows are not constrained by verisimilitude, which means that gross racial biases in the portrayal of crime are possible. However, studies have consistently found that compared to real-life crime statistics, blacks are underrepresented among criminal offenders in crime dramas, while whites are greatly overrepresented (Potter et al. , 1995; Eschholz , 2002; Eschholz et al., 2004; Deutsch & Cavendar, 2008; Case, 2013 ). For example, 75 percent of the violent offenders and suspects in the 2000-01 season of Law & Order were white, whereas in the late 1990s only 13 percent of real-life violent crime suspects were white in New York City where the show was set. For black offenders and suspects, the proportions were 14 percent in the fictional world of Law & Order versus 51 percent in real life. (Eschholz et al., 2004, Table 1.)

I note that stereotype underestimation of real differences is 1) commonly found, including in our study above where stereotypes varied 38% less than real differences (i.e. were not extreme enough), 2) predicted from the accuracy model because if stereotypes are imperfect reflections of reality they should be closer to the mean than reality, and 3) unpredicted from the stereotypes cause inequality-model because, same as before, the cause should show larger dispersion than the effect.

Note, tho, that one possible reply from the usual stereotypes as inaccurate causes of social inequality-position is that the reason computers pick up these cues is that these cues are accurate, but only because of the human stereotypes which cause inequality. This hypothesis is harder to test, but one can note that it is hard to explain the consistency of stereotypes across societies on this model. How come stereotypes just happened to be very similar — e.g. for sex — more or less everywhere while at the same time we find more or less the same sex differences everywhere too? If these were caused by contingent cultural causes, why are they so… universal? Universality suggests a single universal cause — genetics — not many independent causes that happen to align.