- Dahlke, J. A., & Sackett, P. R. (2017). The Relationship Between Cognitive-ability Saturation and Subgroup Mean Differences Across Predictors of Job Performance. Journal of Applied Psychology.

Abstract

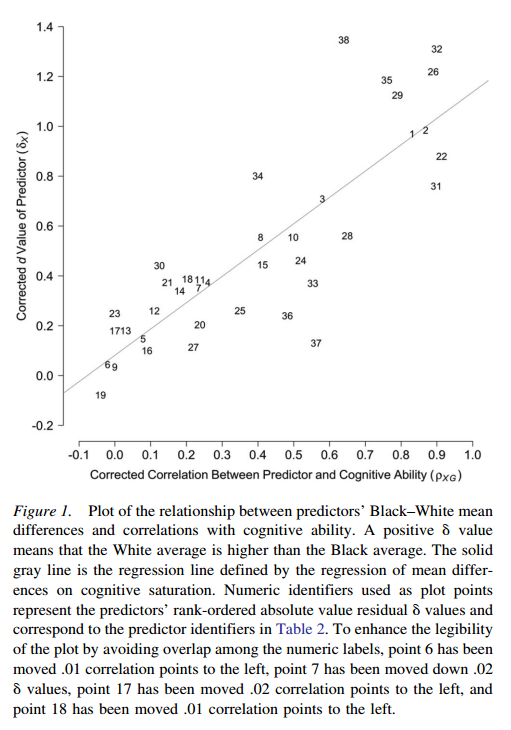

The authors quantify the conventional wisdom that predictors’ correlations with cognitive ability are positively related to subgroup mean differences. Using meta-analytic and large-N data from diverse predictors, they found that cognitive saturation correlates .84 with predictors’ artifact-corrected Black–White d values and .95 with predictors’ artifact-corrected Hispanic–White d values. The authors also investigate the extent to which d values are associated with the use of assessor-based scoring and with predictor domains in which differential investment is likely to occur. As a practical application of these findings, they present a procedure to forecast mean differences on a new predictor based on its cognitive saturation and other attributes. They also present a Bayesian framework that allows one to integrate regression-based forecasts with observed d values to achieve more precise estimates of mean differences. The proposed forecasting techniques based on the relationship between mean differences and cognitive saturation can help to mitigate the difficulties inherent in computing precise local estimates of mean differences.

If you find it a little circumspect with regards to Spearman’s Hypothesis, you are right. SH is in fact only mentioned a few times:

Our focus on correlating subgroup mean differences with cognitive saturation makes the present study a generalization of Spearman’s hypothesis. Spearman’s hypothesis proposes that standardized Black–White mean differences on tests of specific cognitive abilities are primarily due to the tests’ correlations with g (i.e., a general ability factor; Jensen, 1985; Rushton & Jensen, 2005). We broaden the scope of this perspective beyond specific cognitive abilities to include predictor measures commonly of interest in personnel selection (e.g., interviews, work simulations, and personality inventories). By testing the extent to which predictors’ cognitive saturations are associated with mean differences, we address Spearman’s hypothesis in a way that broadens its relevance to the literature on pre-employment assessments.

With pre-employment assessments spanning a wide range of content domains, we recognize that subgroup mean differences on predictor variables may be influenced by factors other than cognitive saturation, such as biased evaluations by assessors. Some predictors are scored objectively, whereas others (e.g., interviews, assessment centers) require rater judgment. Bias against minority group members could result in a larger d value than would be expected from a predictor’s cognitive saturation. However, an alternative perspective suggests that ratings made using a shiftingstandards model (i.e., members of different groups are rated in terms of their standing within their respective groups) could result in a smaller d value than expected (Biernat, 2012), as could ratings made by assessors who implicitly or explicitly favor minority candidates. Thus, there are valid reasons to expect assessors to either exacerbate or attenuate subgroup mean differences and our study may help to clarify which effect is more prevalent.

Briefly stated, the authors expand upon the usual SH literature by looking at non-test predictors of job performance and noting whether the subgroup (i.e. race) gap on these alternative non-general intelligence-ish tests/measures. This includes:

The meta-analytic literature on d values indicates that predictors vary substantially in mean differences, ranging from small or no differences on measures of personality traits such as agreeableness and conscientiousness (Foldes, Duehr, & Ones, 2008) to rather large differences on measures of general cognitive ability (Bobko, Roth, & Potosky, 1999; Roth, Bevier, Bobko, Switzer, & Tyler, 2001). Predictors toward the midpoint of these extremes include assessment centers (Dean, Roth, & Bobko, 2008), situational judgment tests (Whetzel, McDaniel, & Nguyen, 2008), and unstructured interviews (Huffcutt & Roth, 1998), each of which clearly draws on cognitive abilities to some extent, but also taps other individual differences such as personality traits. In the data we examine here, we explore whether predictors’ correlations with cognitive ability can explain variance in predictors’ subgroup mean differences.

They employ the usual meta-analytic procedures by Schmidt and Hunter: corrections for measurement error and variance bias (‘range restriction’). They digged around in a bunch of large sample studies and meta-analysis to find the required correlations and applied the corrections. There were only sufficient data for Black-White and Hispanic-White comparisons. The scatterplots speak for themselves.

They then ran some regressions with a few controls, which changed not much at all. So, the rather obvious general thesis holds that the more correlated with IQ some selection criterion is, the larger race gaps it produces. This finding is not a tautology because it is theoretically possible that there is some odd interaction such that the more g-loaded alternative criteria criterions just happened to have opposite race gaps. There was no reason to expect this, in fact the opposite, so the present findings may be regarded as fairly unsurprising.

See also a previous study that manipulated g-loadings of a battery and found that this mirrored the race gaps and the validity very well. Why resist the obvious?

- McDaniel, M. A., & Kepes, S. (2014). An Evaluation of Spearman’s Hypothesis by Manipulating g Saturation. International Journal of Selection and Assessment, 22(4), 333–342. https://doi.org/10.1111/ijsa.12081

Spearman’s Hypothesis holds that the magnitude of mean White–Black differences on cognitive tests covaries with the extent to which a test is saturated with g. This paper evaluates Spearman’s Hypothesis by manipulating the g saturation of cognitive composites. Using a sample of 16,384 people from the General Aptitude Test Battery database, we show that one can decrease mean racial differences in a g test by altering the g saturation of the measure. Consistent with Spearman’s Hypothesis, the g saturation of a test is positively and strongly related to the magnitude of White–Black mean racial differences in test scores. We demonstrate that the reduction in mean racial differences accomplished by reducing the g saturation in a measure is obtained at the cost of lower validity and increased prediction errors. We recommend that g tests varying in mean racial differences be examined to determine if the Spearman’s Hypothesis is a viable explanation for the results.

Both papers remind me that I want to get a hold of that GATK dataset.

PS. I don’t know why Dahlke & Sackett does not cite any of the recent literature on SH. It’s been 10 years of large meta-analyses since the Rushton and Jensen 2005 review article. Probably some academic quarrel…