Der er is a famous meta-analysis showing that motivation affects IQ scores to a substantial degree:

-

Duckworth, A. L., Quinn, P. D., Lynam, D. R., Loeber, R., & Stouthamer-Loeber, M. (2011). Role of test motivation in intelligence testing. Proceedings of the National Academy of Sciences, 108(19), 7716-7720.

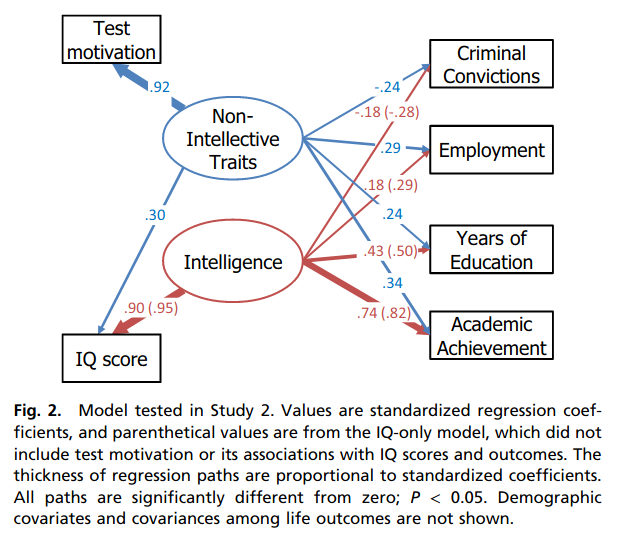

Intelligence tests are widely assumed to measure maximal intellectual performance, and predictive associations between intelligence quotient (IQ) scores and later-life outcomes are typically interpreted as unbiased estimates of the effect of intellectual ability on academic, professional, and social life outcomes. The current investigation critically examines these assumptions and finds evidence against both. First, we examined whether motivation is less than maximal on intelligence tests administered in the context of low-stakes research situations. Specifically, we completed a meta-analysis of random-assignment experiments testing the effects of material incentives on intelligence-test performance on a collective 2,008 participants. Incentives increased IQ scores by an average of 0.64 SD, with larger effects for individuals with lower baseline IQ scores. Second, we tested whether individual differences in motivation during IQ testing can spuriously inflate the predictive validity of intelligence for life outcomes. Trained observers rated test motivation among 251 adolescent boys completing intelligence tests using a 15-min “thin-slice” video sample. IQ score predicted life outcomes, including academic performance in adolescence and criminal convictions, employment, and years of education in early adulthood. After adjusting for the influence of test motivation, however, the predictive validity of intelligence for life outcomes was significantly diminished, particularly for nonacademic outcomes. Collectively, our findings suggest that, under low-stakes research conditions, some individuals try harder than others, and, in this context, test motivation can act as a third-variable confound that inflates estimates of the predictive validity of intelligence for life outcomes.

I.e., IQ scores are caused both by trait variance (intelligence, g) and by motivation. That is to say, there is some trait impurity in the measures because it in part measures the wrong trait, motivation. Insofar as people differ on this trait, and insofar as differences on this trait relate to variables of interest, it will cause biases when using IQ scores to estimate the intelligence to variables of interest.

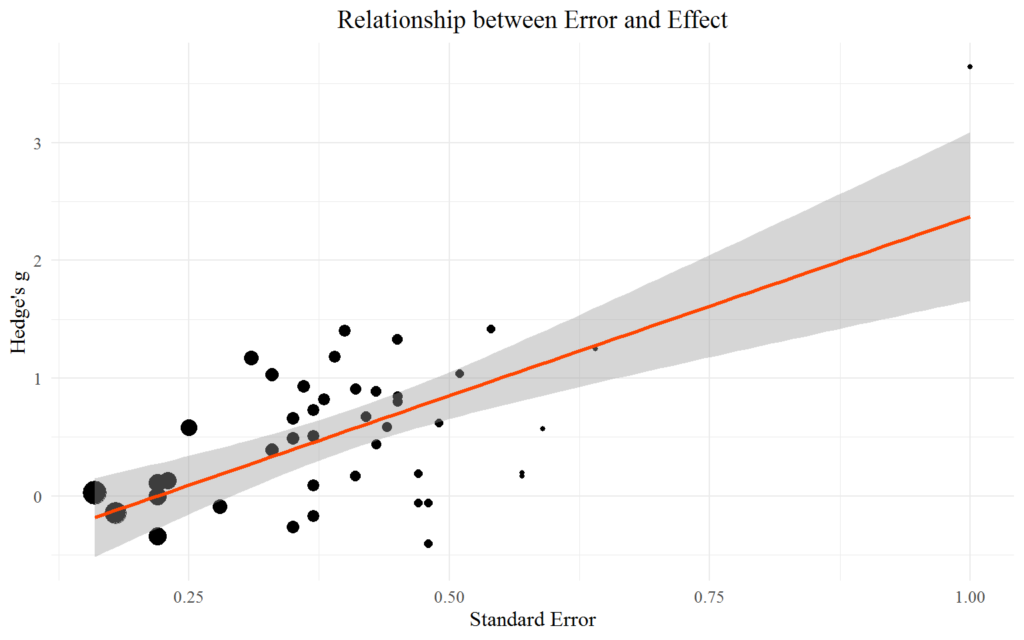

There are some serious problems with this meta-analysis, which you can read about here in detail, but two points: 1) Stephen Breuning contributed studies to it and he’s a known fraud and his effect sizes are about 2 d (!!!) and thus very likely fraudulent. Strangely, this has been known for a long time (e.g. 1988) but his studies were still included and there wasn’t a note about this issue. 2) even without his likely fraudulent studies, we get this pattern:

If you have learned anything from the last years of replication failures, then it is that this pattern is not what you want.

Problems aside with that work, there is some newer work too:

-

Gignac, G. E. (2018). A moderate financial incentive can increase effort, but not intelligence test performance in adult volunteers. British Journal of Psychology, 109(3), 500-516.

A positive correlation between self‐reported test‐taking motivation and intelligence test performance has been reported. Additionally, some financial incentive experimental evidence suggests that intelligence test performance can be improved, based on the provision of financial incentives. However, only a small percentage of the experimental research has been conducted with adults. Furthermore, virtually none of the intelligence experimental research has measured the impact of financial incentives on test‐taking motivation. Consequently, we conducted an experiment with 99 adult volunteers who completed a battery of intelligence tests under two conditions: no financial incentive and financial incentive (counterbalanced). We also measured self‐reported test‐taking importance and effort at time 1 and time 2. The financial incentive was observed to impact test‐taking effort statistically significantly. By contrast, no statistically significant effects were observed for the intelligence test performance scores. Finally, the intelligence test scores were found to correlate positively with both test‐taking importance (rc = .28) and effort (rc = .37), although only effort correlated uniquely with intelligence (partial rc = .26). In conjunction with other empirical research, it is concluded that a financial incentive can increase test‐taking effort. However, the potential effects on intelligence test performance in adult volunteers seem limited.

So, limited sample size, but we need more of this. I was telling the author this when he presented the work at ISIR 2017. Hopefully, he can find some collaborators to run a larger replication. There is a replication of sorts here, also small and with some dubious p values…

-

Gignac, G. E., Bartulovich, A., & Salleo, E. (2019). Maximum effort may not be required for valid intelligence test score interpretations. Intelligence, 75, 73-84.

Intelligence tests are assumed to require maximal effort on the part of the examinee. However, the degree to which undergraduate first-year psychology volunteers, a commonly used source of participants in low-stakes research, may be motivated to complete a battery of intelligence tests has not yet been tested. Furthermore, the assumption implies that the association between test-taking motivation and intelligence test performance is linear – an assumption untested, to date. Consequently, we administered a battery of five intelligence subtests to a sample of 219 undergraduate volunteers within the first 30 min of a low-stakes research setting. We also administered a reading comprehension test near the end of the testing session (55 min). Self-reported test-taking motivation was measured on three occasions: at the beginning (as a trait), immediately after the battery of five intelligence tests (state 1), and immediately after the reading comprehension test (state 2). Six percent of the sample was considered potentially insufficiently motivated to complete the intelligence testing, and 13% insufficiently motivated to complete the later administered reading comprehension test. Furthermore, test-taking motivation correlated positively with general intelligence test performance (r ≈ 0.20). However, the effect was non-linear such that the positive association resided entirely between the low to moderate levels of test-taking motivation. While simultaneously acknowledging the exploratory nature of this investigation, it was concluded that a moderate level of test-taking effort may be all that is necessary to produce intelligence test scores that are valid. However, in low-stakes research settings, cognitive ability testing that exceeds 25 to 30 min may be inadvisable, as test-taking motivational levels decrease to a degree that may be concerning for a non-negligible portion of the sample.

Important for survey research or student ‘volunteers’. Make briefer tests!

-

Silm, G., Pedaste, M., & Täht, K. (2020). The relationship between performance and test-taking effort when measured with self-report or time-based instruments: A meta-analytic review. Educational Research Review, 100335.

Test-taking motivation (TTM) has been found to have a profound effect on low-stakes test results. From the components of TTM test-taking effort has been shown to be the strongest predictor of test performance. This article presents an overview of methods and instruments used to measure TTM and effect sizes between test-taking effort and performance found with these instruments. Altogether 104 articles were included in the qualitative synthesis based on literature search in EBSCO Discovery database. Effect sizes for the relationship between test-taking effort and performance were available in 28 studies. The average correlation between self-reported effort and performance was r = .33 and the average correlation between Response Time Effort and performance was r = .72, indicating that these two types of measures could be distinctly different. Educational level was a significant moderator of the effect sizes: the average correlation between test-taking effort and performance was stronger for university students than for school students. An overview of interventions aimed to enhance TTM and their effect is given.

So a meta-analysis replication, sort of, because this one is not about controlled studies.