And I am not talking about COVID vaccines!

I am talking about this far more intriguing paper:

- Miller, N. Z., & Goldman, G. S. (2011). Infant mortality rates regressed against number of vaccine doses routinely given: Is there a biochemical or synergistic toxicity?. Human & Experimental Toxicology, 30(9), 1420-1428.

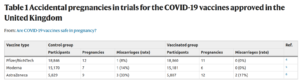

The infant mortality rate (IMR) is one of the most important indicators of the socio-economic well-being and public health conditions of a country. The US childhood immunization schedule specifies 26 vaccine doses for infants aged less than 1 year—the most in the world—yet 33 nations have lower IMRs. Using linear regression, the immunization schedules of these 34 nations were examined and a correlation coefficient of r = 0.70 (p < 0.0001) was found between IMRs and the number of vaccine doses routinely given to infants. Nations were also grouped into five different vaccine dose ranges: 12–14, 15–17, 18–20, 21–23, and 24–26. The mean IMRs of all nations within each group were then calculated. Linear regression analysis of unweighted mean IMRs showed a high statistically significant correlation between increasing number of vaccine doses and increasing infant mortality rates, with r = 0.992 (p = 0.0009). Using the Tukey-Kramer test, statistically significant differences in mean IMRs were found between nations giving 12–14 vaccine doses and those giving 21–23, and 24–26 doses. A closer inspection of correlations between vaccine doses, biochemical or synergistic toxicity, and IMRs is essential.

Woah, that sounds bad! The authors show this plot:

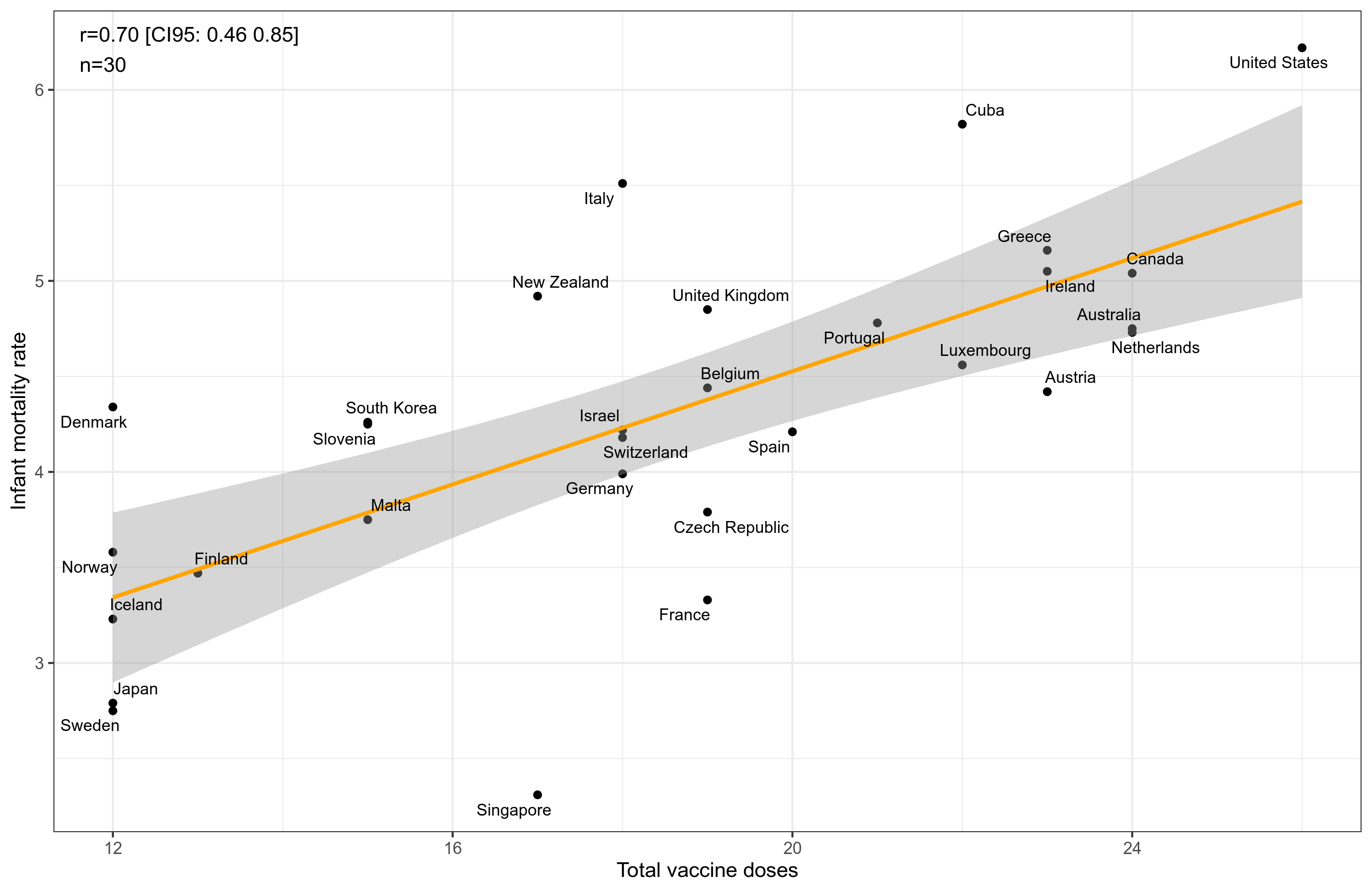

And it looks pretty bad indeed. But how annoying that they did not add country labels, so we can see who is dying a lot. They do provide their data in two tables, which for incomprehensible reasons are provided as pictures, so we have to use OCR to get the data. We first replicate their figure with their own data (the R code and data are here):

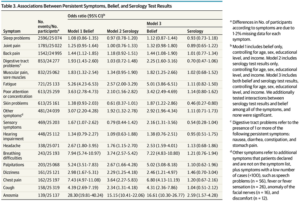

So far so good. Can we replicate this result using modern data? The infant mortality data are easy to look up, Wikipedia has them. What about the vaccine programs? Well, the authors refer to two sources. The first is now a Chinese scam site, and the second is too general (WHO/UNICEF. Immunization Summary: A Statistical Reference Containing Data Through 2008 (The 2010 Edition). www.childinfo.org.). OK, so we try searching, and we find this Europe/EU-wide website. Great! Now we can easily compare the data right? No. They don’t provide counts, just bewildering tables like this one, one for each disease:

But, they also provide country-level overviews, like this one for Bulgaria:

Why not some kind of nicer overview? So I did the boring thing and looked over each country, counting each dose of recommended/mandatory vaccine before 13 months of age, including at birth. The sample is about the same size as before but somewhat different set of countries, as Anglos are missing, and some others are included. Anyway, the results look like this:

ZERO, zilch! The confidence intervals don’t even overlap between studies, despite the small samples. One cannot attribute this to too little variation in the newer data. The standard deviations for total doses are 4.14 and 3.86 across datasets.

What is going on? Are the data even consistent over time? Well…

Alright, so Germany has gone off the rails on their vaccine program, while Netherlands has reversed course and now thinks fewer is the way to go. Aside from these 2 cases, the association is pretty good, r = .82.

The infant data is similarly consistent-inconsistent. The bulk of the data are quite consistent, r = .51, but Malta is really an outlier. Either Maltese infants started dying a lot more than before, or these data have some comparability issues. The authors used CIA. I’ve heard it’s bad, so I used the UN data instead.

Take-away

I don’t really think vaccine programs for infants kill them, though I could be wrong, and maybe they cause a slight increase in mortality risk. I think the main take-away of this replication study is that these kind of national, small n studies are really sensitive to data sources, and even years of data. In this case, on inspection of various plots, we can tell that a few countries behave strangely in the data, and this explains the difference, maybe. I try to think of results like these whenever I see some plausible looking, but small n country-level study. The large majority of studies are never replicated (no one even makes sure the code works and produces the claimed results), we don’t know which % of country-level studies will replicate as well as this one did. Isn’t that just a bit crazy? Also this study has been cited 94 times, so maybe someone already looked more closely at these kind of data, I don’t know. Or maybe 94 other studies are taking the findings seriously and trying to build houses of sand on them.