While looking for peer-review related studies, I came across a meta-analysis of gender bias in grant applications. That sounds good.

Bornmann, L., Mutz, R., & Daniel, H. D. (2007). Gender differences in grant peer review: A meta-analysis. Journal of Informetrics, 1(3), 226-238.

Abstract

Narrative reviews of peer review research have concluded that there is negligible evidence of gender bias in the awarding of grants based on peer review. Here, we report the findings of a meta-analysis of 21 studies providing, to the contrary, evidence of robust gender differences in grant award procedures. Even though the estimates of the gender effect vary substantially from study to study, the model estimation shows that all in all, among grant applicants men have statistically significant greater odds of receiving grants than women by about 7%.

Sounds intriguing? Knowing that publication bias especially on part of the authors would be a problem with these kind of studies (strong political motivation to publish studies finding bias against females), I immediately searched for key words related to publication bias… but didn’t find any. Then I skimmed the article. Very odd? Who does a meta-analysis on stuff like this, or most stuff anyway, without checking for publication bias?

TL;DR funnel plot. Briefly, publication bias happens when authors tend to send in papers that would results they liked rather than studies that failed to find results they liked. It can also result from biased reviewing. Since most social scientists believe in the magic of p<.05, this means scholars tend to publish studies meeting that arbitrary demand and not those who didn’t. Furthermore, when scholars do a lot of sciencing, gathering a lot of data, they are also more likely to submit due to having a larger amount of time invested in the project. These two together means that there is an interaction between effect size and direction and the N. People who spent lots of time doing a huge study will generally be very likely to publish it even tho the results weren’t as expected. But people who run small papers will to a higher degree not bother about writing up a small paper with negative results. This means that there will be a negative correlation between sample size and the preferred outcome, in this case bias against females.

Back to the meta-analysis. Luckily, the authors provide the data to calculate any bias. Their Table 1 has sample size (“Number of submitted applications”) and two datapoints one can calculate effect size from (“Proportion of women among all applicants”, “Proportion of women among approved applicants”). Simply subtract number of women who got a grant from the ones who submitted to get a female disfavor measure. (Well, actually it may just be that women write worse applications, so it does not imply bias at all.) Then correlate this with the sample size.

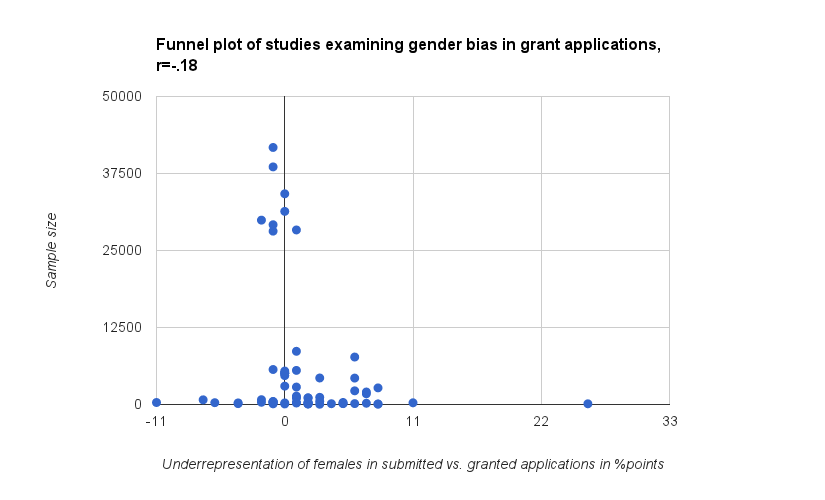

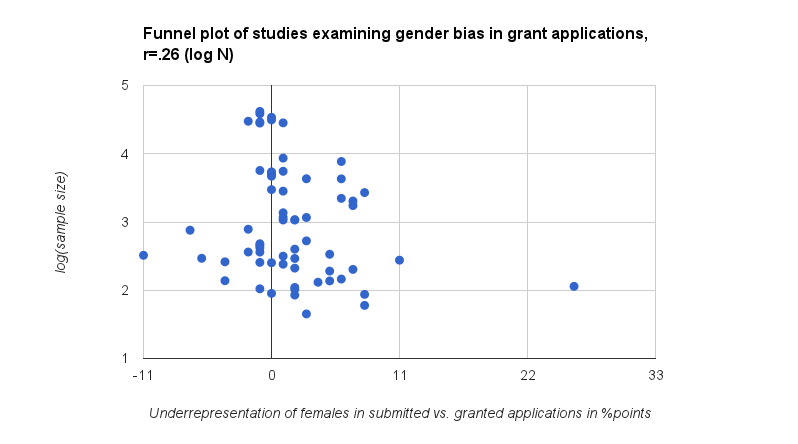

So there data is here. The funnel plot is below.

There was indeed signs of publication bias. The simple N x effect size did not reach p <.05. However there is some question as to which measure of sample size one should use. The distribution is clearly not linear, thus violating the assumptions of linear regression/Pearson correlations. This page lists a variety of other options, the best perhaps being standard error, which however is not given by the authors. Here’s a funnel plot for log transformed sample sizes. This one has p=.04, so barely below the magic line.