Maybe you are thinking: wait I thought IQ was more or less the same as intelligence? IQ tests do measure intelligence well, right?

Well, sometimes it is, sometimes it is not:

- IQ is just an arbitrary scale based on deviation scores from age-norms. It’s how many standard deviations out you are from the average of age-peers on that test rescaled to more human-friendly numbers (i.e., your z score times 15 + 100)

- In the past it was based on a specific formula based on chronological age and testing performance age (“mental age”), hence the ‘intelligence quotient’, quotient means a ratio. This is now a misnomer.

- Intelligence is the trait

- g is the psychological construct intended to capture this trait

- This is what Jensen 1998 is all about

So to be philosophically clear, IQ ≠ g ≠ intelligence. Mixing them up is a category error. However, in many practical contexts, they can stand-in for each other. When we say that IQ is important for life, we talk somewhat loosely in the same way we say that a car’s motor’s liter is important for fuel efficiency. It’s a quantification of an aspect, it’s not exactly a synecdoche, but in that direction. Per the jingle-jangle fallacy, we also call some tests for IQ tests, while others we call scholastic tests, cognitive tests, and health literacy tests and so on. As a matter of fact, all such tests measure mainly g as clearly revealed whenever studies are undertaken to compare them, so they are all intelligence tests, no matter what we call them. This naming obscurantism is probably mostly not on purpose. Cognitive scientists probably believe that their measures of n-back and inspection time and so on really measure distinct brain processes or whatever they label them. Sometimes the obscurantism is on purpose, as when SAT employees keep denying SAT is an intelligence test. This is because intelligence tests are more stigmatized than mere scholastic tests. The name SAT used to stand for Scholastic Aptitude Test (later Assessment), but now it literally stands for nothing (a pseudo-acronym). In the same way, one can get away with measuring intelligence in many surveys, as long as one calls the test something else, like a vocabulary test, figure reasoning test, and so on.

The distinctions between IQ, g, and intelligence are important in certain contexts. Since IQ is literally just a score, it can be easily changed. For instance, if I give you a test, and also the answer sheet for it, you will hopefully attain a perfect score, and get an IQ result of 130-140 — the ceiling of the test. Now, clearly, this does not really mean you are actually very smart. Rather, we have done the measurement incorrectly. It is akin to measuring the temperature of the top of Mount Everest by putting a thermometer in your mouth while standing there. This will give you a temperature measurement for sure, it will be higher than anyone else has measured, and while it was done on the mountain top, but it is very wrong.

Measuring intelligence or g using deviation scores is not as nice as we would like. It’s a mere equal intervals scale. The difference between 90 and 100 IQ, is the same as that between 120 and 130. Not necessary in relation to some outcome (say school graduation which will follow approximately a logistic function), but in relation to certain psychometric properties, and things like regression towards the mean. The IQ score is set at an average of 100 for the norming population (at that time). This is totally arbitrary, and might as well be -200, 1600, or any other number. The standard deviation of 15 is also arbitrary. These numbers do not have any relationship to reality, but are chosen for convenience. As a matter of fact, 100 was chosen to avoid the use of decimals when using the mental age equation. This arbitrariness of the scale means one cannot meaningfully use the mathematical operations of multiplication and division. These can only be used with ratio scales. The closest analogy here is the Celsius scale (or Fahrenheit but we are not that backwards on this blog). You can measure temperature on this scale using any standard thermometer. You also know that 0 Celsius is not really zero temperature because one can go lower. The lack of a true zero with the Celsius scale means one cannot do multiplication/division with it either. 10 C is not 10 times as hot as 1 C, in the same way that 10 C is not -1 times as hot as -10 C. This mathematical operation is not defined. The same with IQ. Someone with 130 IQ is not 30% smarter than someone at 100 IQ. Percentages involve division. For temperature, fortunately, we do have a proper scale called Kelvin. This has a real 0. 0 Kelvin really is zero heat, which is also related to zero movement of particles (reductionism at its finest). 10 K is really 10 times as warm as 1 K. As a matter of fact, we didn’t always have nice temperature scales, we used to use stuff like quicksilver. No one has figured out how to quantify intelligence using a proper ratio scale. This is a very important goal, and overlaps with research in ‘artificial’ and non-human intelligence. Jose Hernandez-Orallo wrote a book on this project (The Measure of All Minds: Evaluating Natural and Artificial Intelligence), I haven’t read it yet.

Back to the point. What about those studies that show education increases IQ? The best meta-analysis of this is:

- Ritchie, S. J., & Tucker-Drob, E. M. (2018). How much does education improve intelligence? A meta-analysis. Psychological science, 29(8), 1358-1369.

Intelligence test scores and educational duration are positively correlated. This correlation could be interpreted in two ways: Students with greater propensity for intelligence go on to complete more education, or a longer education increases intelligence. We meta-analyzed three categories of quasi-experimental studies of educational effects on intelligence: those estimating education-intelligence associations after controlling for earlier intelligence, those using compulsory schooling policy changes as instrumental variables, and those using regression-discontinuity designs on school-entry age cutoffs. Across 142 effect sizes from 42 data sets involving over 600,000 participants, we found consistent evidence for beneficial effects of education on cognitive abilities of approximately 1 to 5 IQ points for an additional year of education. Moderator analyses indicated that the effects persisted across the life span and were present on all broad categories of cognitive ability studied. Education appears to be the most consistent, robust, and durable method yet to be identified for raising intelligence.

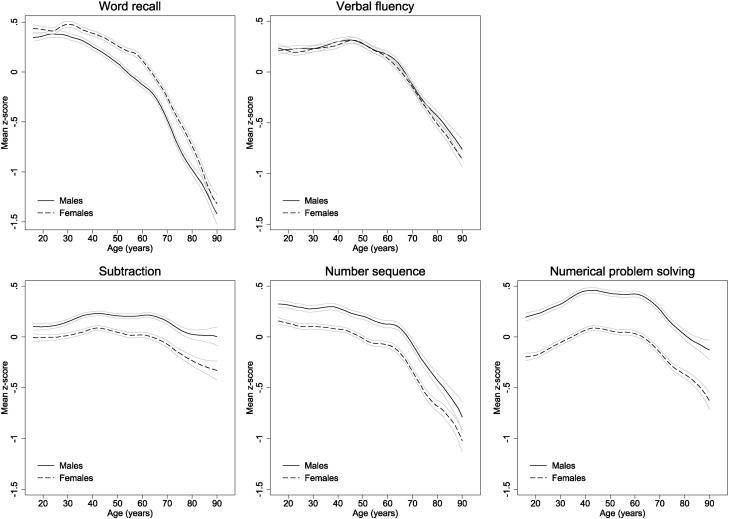

Both authors are solid researchers. I have no doubts in the reliability of the results. Yet, we cannot really believe the conclusions as stated. For instance, the conclusions imply that if we put a person in school their entire adult life, say, 50 years, in addition to regular schooling, their IQ would be boosted by 50 to 250 IQ points. Surely, there will be diminishing returns somewhere. We might also question their conclusion that this holds across any type of intelligence test. Does it really work for reaction time? Clearly, schooling has increased drastically the last 50 years both in terms of years and hours per year, yet we don’t find obvious increases on many basic mental tasks. For instance, here’s data on simple tests given to a large cross-section of the British population:

Subtraction is a pretty simple test. This one involves subtracting 7 from a number multiple times in a row. If education really boosted performance across the board, there would be some more obvious upticks in these plots with younger ages. Here it can be said that we are mixing together age differences in education exposure with other cohort differences in education exposure and age differences in aging. This is true, but the effects claimed are really large, so we should see them despite other influences. Instead we mainly see cognitive decline with age, and only some gains for the numerical problem solving test. This is really just a summary of the Flynn effect debate. Why do some tests show increases, some show roughly no change, and some even decline?

Better would be if we had some people, who had taken multiple tests, and we used structural equation modeling to figure out how the pattern of IQ gains across tests should best be interpreted. There is in fact such a study:

- Ritchie, S. J., Bates, T. C., & Deary, I. J. (2015). Is education associated with improvements in general cognitive ability, or in specific skills?. Developmental psychology, 51(5), 573.

Previous research has indicated that education influences cognitive development, but it is unclear what, precisely, is being improved. Here, we tested whether education is associated with cognitive test score improvements via domain-general effects on general cognitive ability (g), or via domain-specific effects on particular cognitive skills. We conducted structural equation modeling on data from a large (n = 1,091), longitudinal sample, with a measure of intelligence at age 11 years and 10 tests covering a diverse range of cognitive abilities taken at age 70. Results indicated that the association of education with improved cognitive test scores is not mediated by g, but consists of direct effects on specific cognitive skills. These results suggest a decoupling of educational gains from increases in general intellectual capacity.

Wait, this is the same first author, and 3 years prior? Yep!

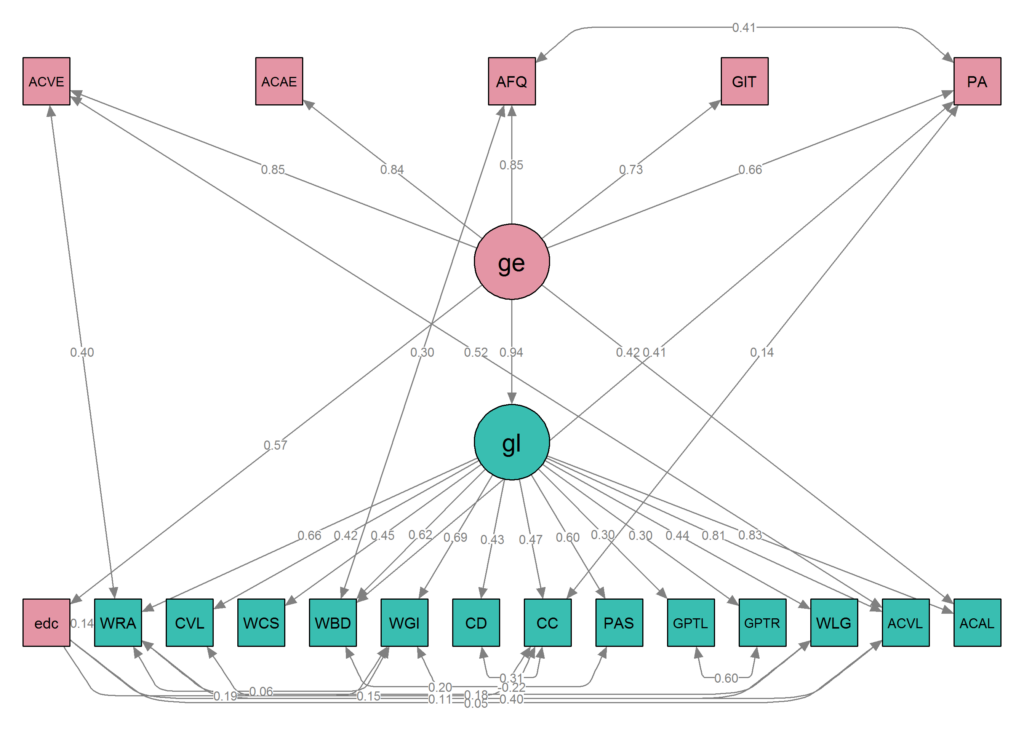

Here’s the model they came up with that fit their data best:

Figure 4. Path diagram of Model C, the best-fitting model, which had no path from education to g, but paths from education to seven cognitive subtests. Values are standardized path coefficients; only significant paths are shown. MHT = Moray House Test; Seq. = Sequencing; Sub. = Substitution; Assoc. = Associates.

In the model we see that intelligence measured at age 11 by the single test (top node) was highly related to intelligence measured in old age (.73) represented by the g factor measured by 10 tests (right), and also caused the educational years (left). Years of education, however, did not cause later life intelligence (no arrow to g), but did cause improvements on several tests, such as matrix reasoning and logical memory, and did not cause improvements on digit span backwards (no arrow from education). Their comparison of models showed that this model was sufficiently better than the alternative model that posits an increase in intelligence, that is, g, itself (p = .001). So, it seems by these data that intelligence is not increased by education, but some aspects of it are. Insofar as these factor into an overall IQ score, there will be overall IQ gains. Thus, there is no conflict between the results of the two Stuart Ritchie papers, but in the theoretical implications and talk about “raising intelligence”. It seems that later Stuart has forgotten the insights of earlier Stuart. Or maybe he now has different coauthors.

As far as I know, there is no published replication of this important study. There is an unpublished one. As in, really, very unpublished, as in a half-done R notebook that only experts can read. Basically, it replicates Stuart’s early results in that education increased some later life test scores, but not all of them, and not g itself when models are compared. The sample is the Vietnam Experience Study, so it’s about 4.4 times larger than the earlier study and used a shorter follow-up of 18 years (age 20 to 38), which should actually increase the precision to detect effects, as fade-out is weaker. The correlation between age 20 and age 38 g is a whopping .945, which leaves not so much variance to work with. Insofar as the study stands, this was the final plot:

Thus, in this final model, there’s a 0.94 correlation between age 20 and 38 intelligence (ge = g early life, gl = g later life). Early g causes education (edc in bottom left) at .57, and education causes some of the tests to increase, but does not cause later g. This is the same pattern as found by Ritchie’s study.

But we can go further. Instead of looking at whether education has increased IQ scores, we can look at life outcomes. After all, we are not interested in improving IQ scores per se. We are interested in whether further increased educational spending will improve people’s life outcomes more generally. That’s the whole point of e.g. Headstart programs. Do they? There’s such a study too:

- Clark, G., & Cummins, N. (2020). Does Education Matter? Tests from Extensions of Compulsory Schooling in England and Wales 1919-22, 1947, and 1972. Popsci write-up.

Schooling and social outcomes correlate strongly. But are these connections causal? Previous papers for England using compulsory schooling to identify causal effects have produced conflicting results. Some found significant effects of schooling on adult longevity and on earnings, others found no effects. Here we measure the consequence of extending compulsory schooling in England to ages 14, 15 and 16 in the years 1919-22, 1947 and 1972. From administrative data these increases in compulsory schooling added 0.43, 0.60 and 0.43 years of education to the affected cohorts. We estimate the effects of these increases in schooling for each cohort on measures of adult longevity, on dwelling values in 1999 (an index of lifetime incomes), and on the the social characteristics of the places where the affected cohorts died. Since we have access to all the vital registration records, and a nearly complete sample of the 1999 electoral register, we find with high precision that all the schooling extensions failed to increase adult longevity (as had been found previously for the 1947 and 1972 extensions), dwelling values, or the social status of the communities people die in. Compulsory schooling ages 14-16 had no effect, at the cohort level, on social outcomes in England.

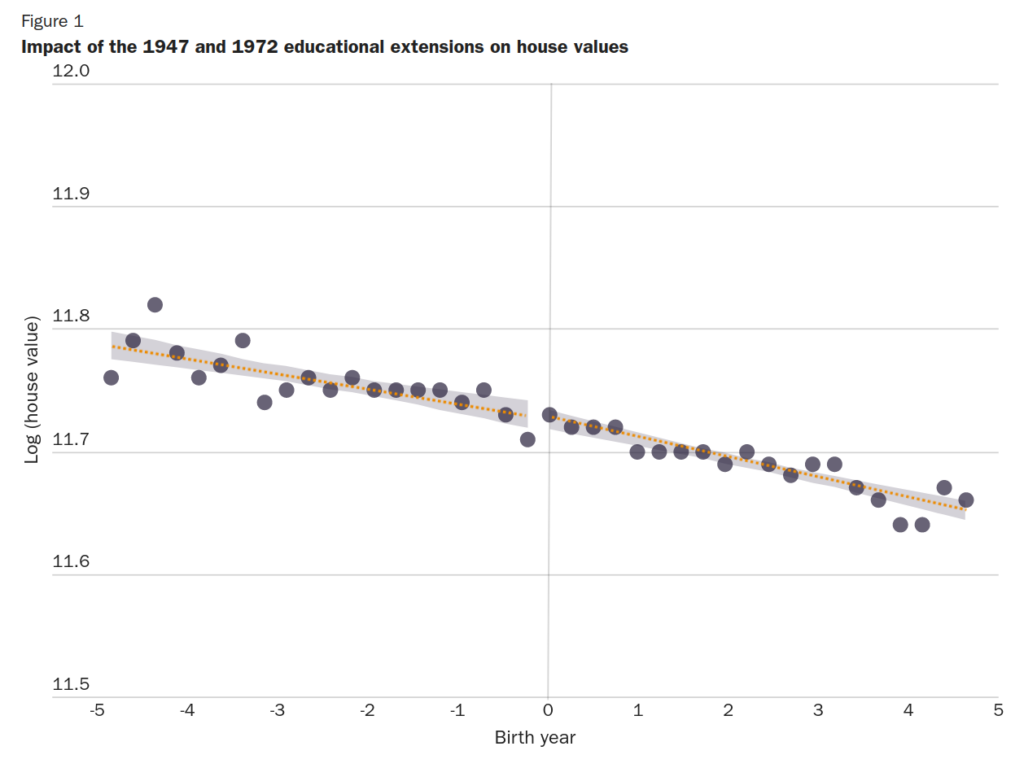

Like Ritchie and Tucker-Drob’s meta-analysis, it uses abrupt changes in schooling duration to check for causal effects (regression discontinuity design). Nothing is found however. First, house values:

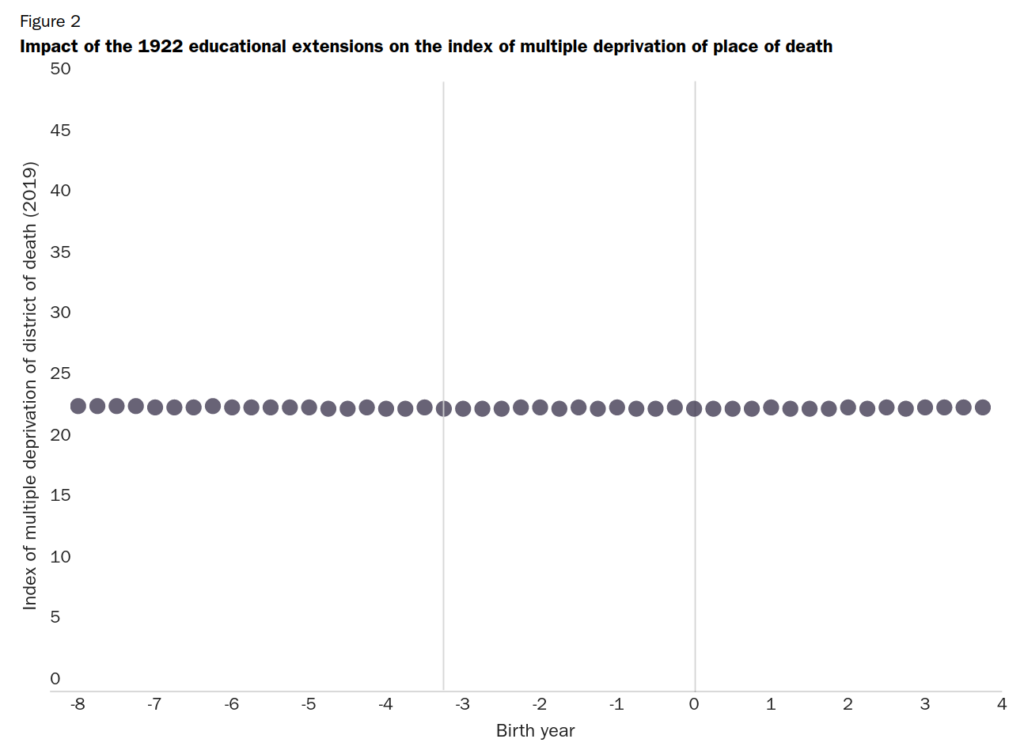

Or an index of deprivation at time of death:

If intelligence was raised by the observed IQ increase of 5 points per school year, this would have resulted in 0.43 * 5 = 2.15 IQ for the 1922 reform, and that would be visible in such a large dataset of house prices. Using the more pessimistic-realistic value of 1 IQ from pre-post design, this would still be 0.43 IQ, which should also be visible. Whatever non-cognitive benefits could be claimed (and these are frequently claimed), should also be visible here, but they are not. Lengthening education didn’t do anything, but it cost a lot of money and robbed children of more of their childhood (forced schooling = child slavery, quite literally, think about it).

Conclusions

It is true: one can make IQ scores increase by increasing people’s exposure to education, at least, to some extent. This is however limited to some kinds of tests. For instance, the recent Danish school reform lengthening the instruction times (more hours per week) did not result in any increases in standardized test scores, and neither did the recent Swedish reform. Because of this lack of generality, the IQ increases cited do not represent an intelligence increase per se, but some more narrow abilities or departures from measurement invariance. They do not appear to transfer into real life outcomes when examining using very large British datasets from 3 school reforms that increased duration of schooling by 0.43, 0.60 and 0.43 years.