Suppose you did some research that you want to publish. Say you investigated the difference between men on women on some number of traits that are relevant to your current thinking, say creativity, or morality, or intelligence, or self-control. After crunching the numbers, you discover that men are better off in one of these traits, say, self-control. How hard would it be to publish this result in a typical scientific journal compared to if the result had been the other way around? How hard would it be to convince the public of your findings? Well, one way to answer this kind of question to make up fake study results (vignettes), then have people read them, and judge them by credibility. There’s a number of studies that have done this kind of thing. Let’s look at them.

-

Stewart‐Williams, S., Chang, C. Y. M., Wong, X. L., Blackburn, J. D., & Thomas, A. G. (2020). Reactions to male‐favouring versus female‐favouring sex differences: A pre‐registered experiment and Southeast Asian replication. British Journal of Psychology, 112(2), 389-411.

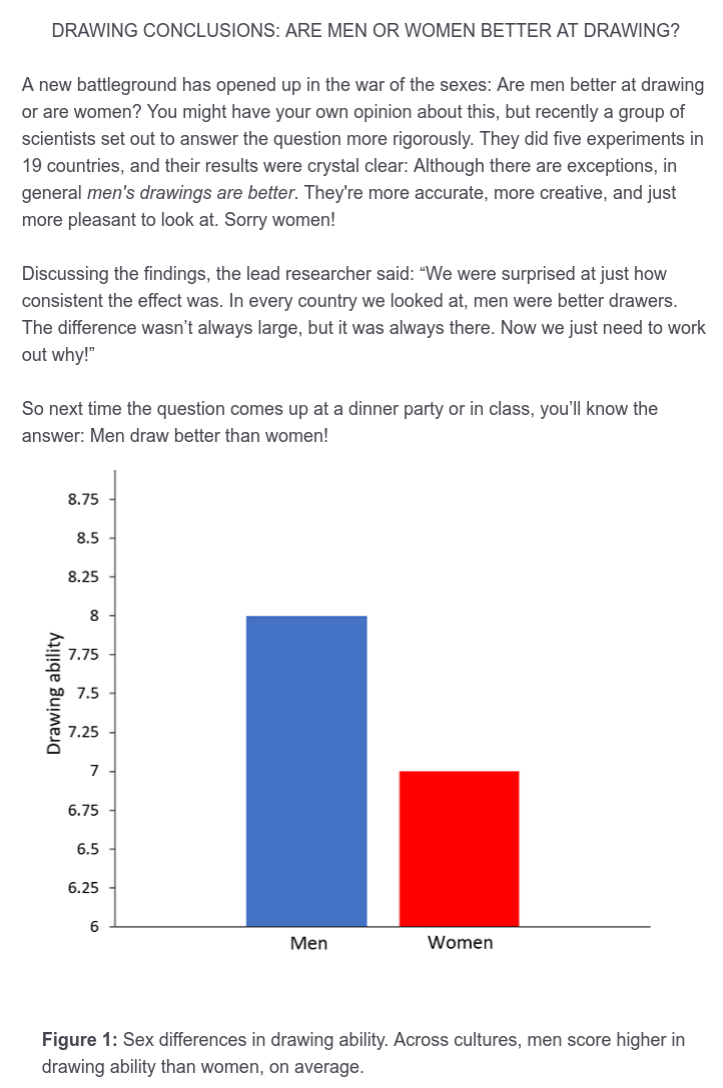

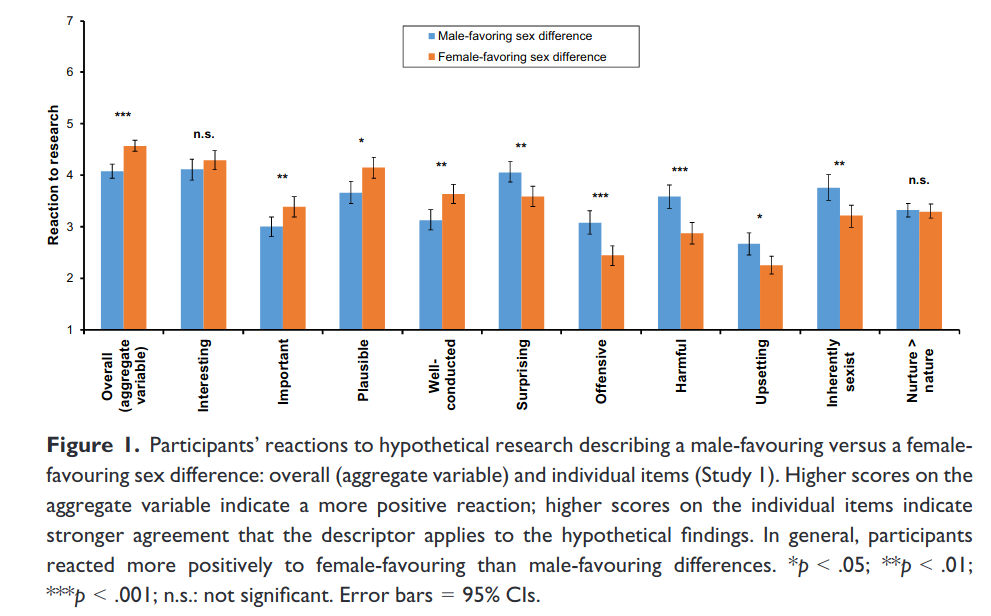

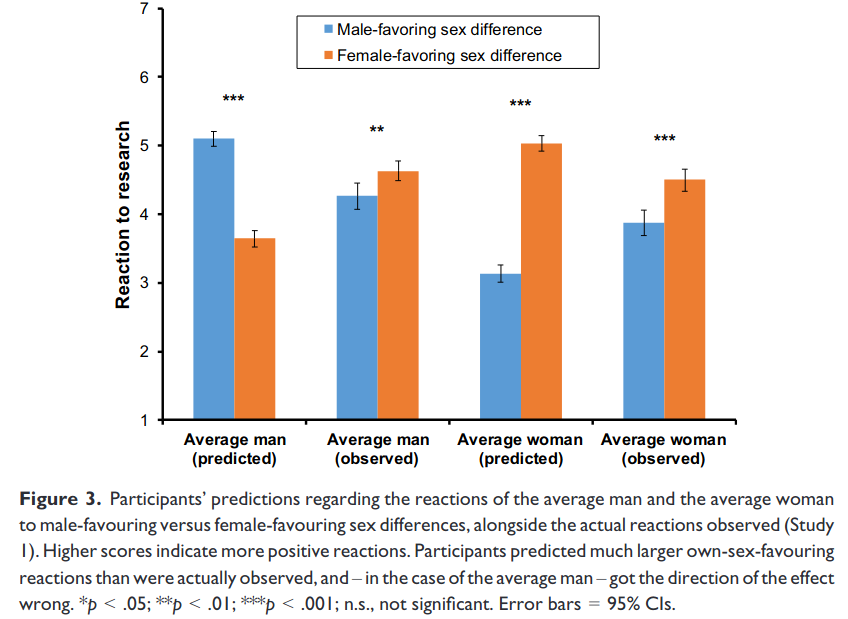

Two studies investigated (1) how people react to research describing a sex difference, depending on whether that difference favours males or females, and (2) how accurately people can predict how the average man and woman will react. In Study 1, Western participants (N = 492) viewed a fictional popular-science article describing either a male-favouring or a female-favouring sex difference (i.e., men/women draw better; women/men lie more). Both sexes reacted less positively to the male-favouring differences, judging the findings to be less important, less credible, and more offensive, harmful, and upsetting. Participants predicted that the average man and woman would react more positively to sex differences favouring their own sex. This was true of the average woman, although the level of own-sex favouritism was lower than participants predicted. It was not true, however, of the average man, who – like the average woman – reacted more positively to the female-favouring differences. Study 2 replicated these findings in a Southeast Asian sample (N = 336). Our results are consistent with the idea that both sexes are more protective of women than men, but that both exaggerate the level of same-sex favouritism within each sex – a misconception that could potentially harm relations between the sexes.

So there are traits, drawing and lying. Here’s the fake study description for drawing, men-are-better version (I found it in their survey materials):

And here’s the version about lying:

The study was fairly large by traditional standards: the participants were “The final sample included 492 individuals: 256 men and 236

women. Of these, 313 came from Prolific.co and 179 came from Reddit”. Here’s the results:

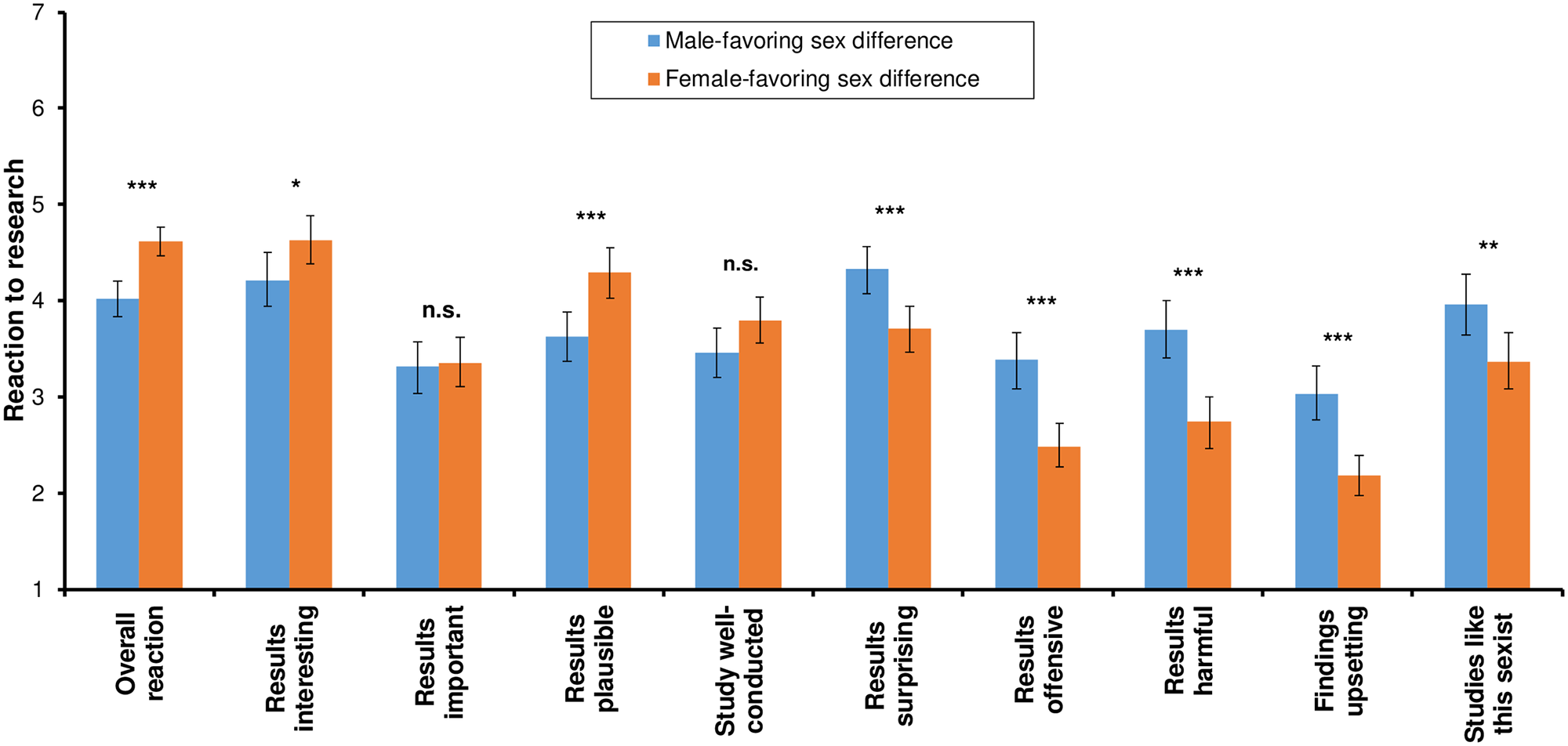

So when people were given a study about drawing ability, with a supposed sex difference and a fake figure too, they rated the research better overall, more important, more plausible, better conducted, less offensive, less harmful, less upsetting, less sexist when the results favored women over men.

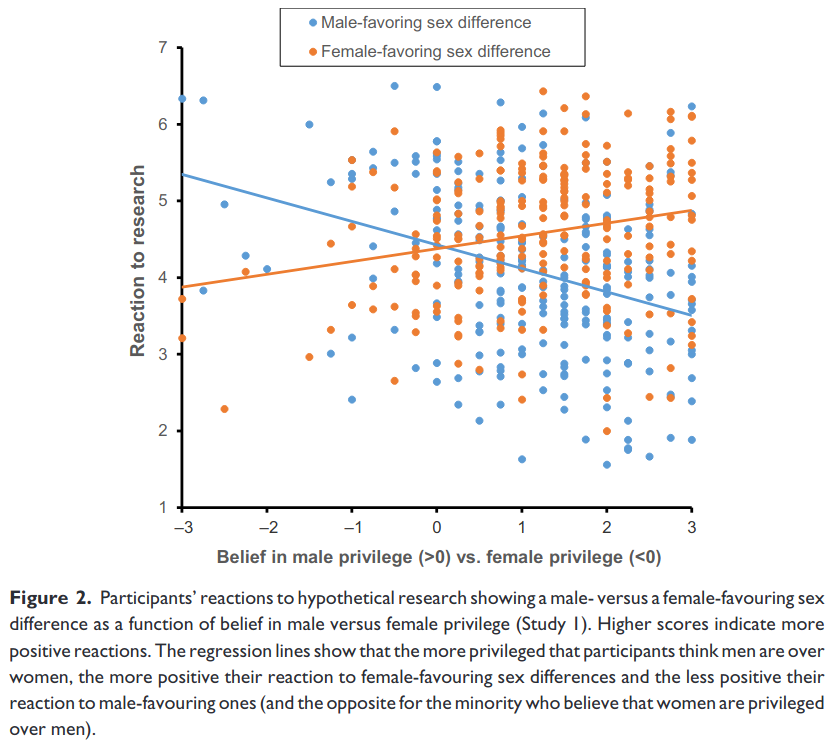

One can interpret the results in whether people believed one sex was worse off in society, i.e., who is privileged:

As very few people believe women are privileged, the left side of the plot is mostly empty. Going further, they asked people to predict what the results would be for the other sex:

And they managed to find a case of stereotype inaccuracy! While men showed slight favoritism to women-favoring results, women predicted them to show a large pro-male preference. Men predicted women would favor women-are-better, which they did, but not as much as men thought.

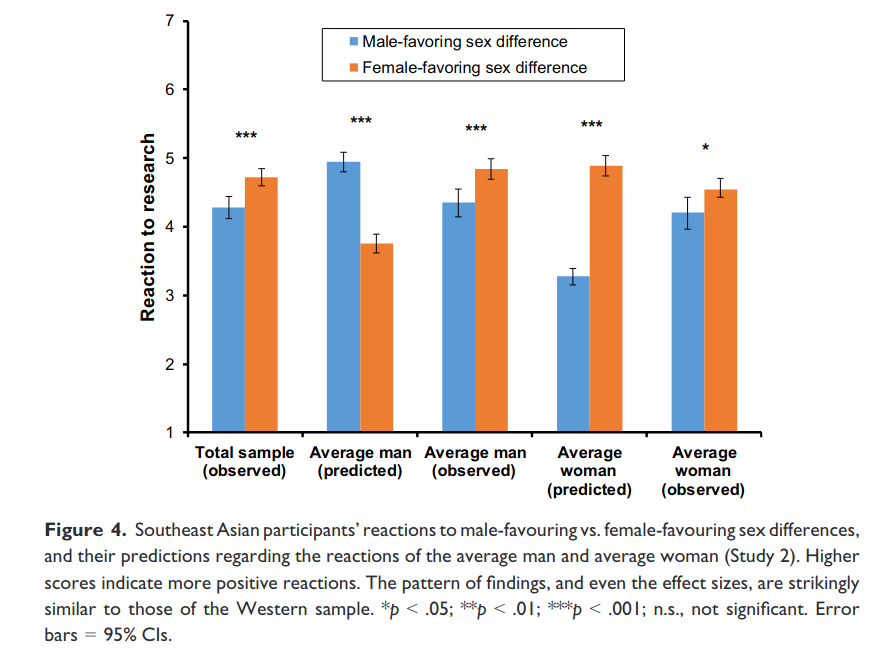

Is this just a Western thing? Authors thought that might be the case, so they recruited some about 135 people from: “Brunei (41.4%), Malaysia (20.8%), Indonesia (10.1%), or the Philippines (8.3%). Ethnically, most were Southeast Asians (69.9%), East Asians (31.8%), or South Asians (4.8%) living in Southeast Asia. Around 38.7% were Muslims, 18.5% were Christians, 12.5% were Buddhists, and 26.2% had no religion.”, and the results:

The results were basically the same. Pro-female preference for drawing ability was seen in Asians too.

The first replication

As their study got a lot of attention on Twitter but not in the news they wanted to try it again to see if it was right, so they did it again:

- Stewart-Williams, S., Wong, X. L., Chang, C. Y. M., & Thomas, A. G. (2022). People react more positively to female-than to male-favoring sex differences: A direct replication of a counterintuitive finding. PloS one, 17(3), e0266171.

We report a direct replication of our earlier study looking at how people react to research on sex differences depending on whether the research puts men or women in a better light. Three-hundred-and-three participants read a fictional popular-science article about fabricated research finding that women score higher on a desirable trait/lower on an undesirable one (female-favoring difference) or that men do (male-favoring difference). Consistent with our original study, both sexes reacted less positively to the male-favoring differences, with no difference between men and women in the strength of this effect. Also consistent with our original study, belief in male privilege and a left-leaning political orientation predicted less positive reactions to the male-favoring sex differences; neither variable, however, predicted reactions to the female-favoring sex differences (in the original study, male-privilege belief predicted positive reactions). As well as looking at how participants reacted to the research, we looked at their predictions about how the average man and woman would react. Consistent with our earlier results, participants of both sexes predicted that the average man and woman would exhibit considerable own-sex favoritism. In doing so, they exaggerated the magnitude of the average woman’s own-sex favoritism and predicted strong own-sex favoritism from the average man when in fact the average man exhibited modest other-sex favoritism. A greater awareness of people’s tendency to exaggerate own-sex bias could help to ameliorate conflict between the sexes.

The sample is about 300 Prolific subjects from the USA and UK. Results:

Not sure why the authors bothered to run a direct replication using the same recruitment service. As the results the first time were reasonably clear, and based on planned analyses, it is hard to argue with the exact findings as being due to a coincidence. One would have to claim there was something wrong with the methods, or the subjects. Thus, repeating the study with the same kind of subjects and the same methods does not remove much doubt about the findings.

The second replication

OK, we get it, but the researchers are back with a third replication, with some variations:

-

Stewart‐Williams, S., Wong, X. L., Chang, C. Y. M., & Thomas, A. G. (2022). Reactions to research on sex differences: Effect of sex favoured, researcher sex, and importance of sex‐difference domain. British Journal of Psychology.

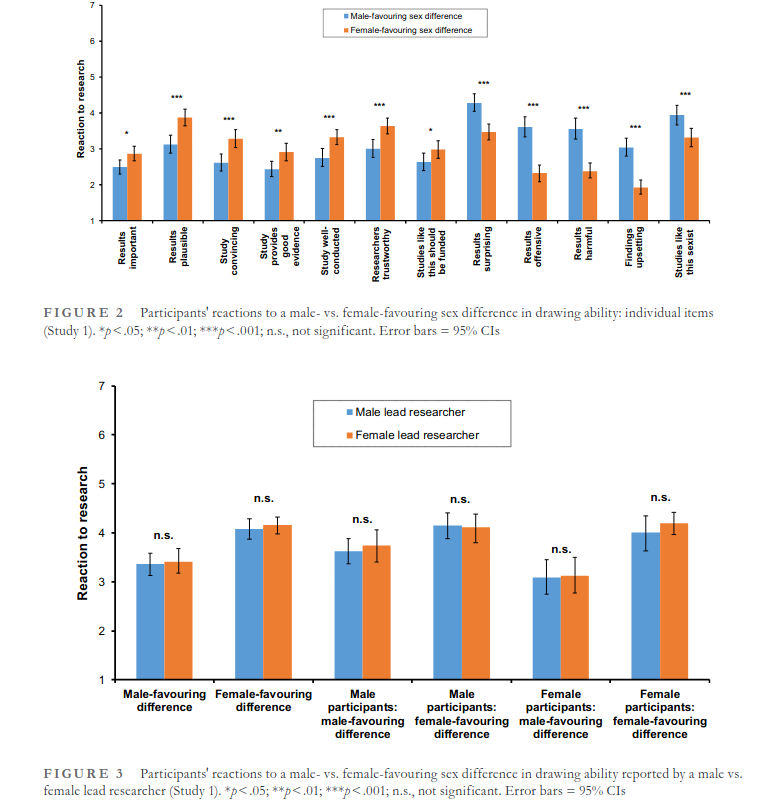

Two studies (total N = 778) looked at (1) how people react to research finding a sex difference depending on whether the research puts men or women in a better light and (2) how well people can predict the average man and average woman’s reactions. Participants read a fictional popular‐science article about fictional research finding either a male‐ or a female‐favouring sex difference. The research was credited to either a male or a female lead researcher. In both studies, both sexes reacted less positively to differences favouring males; in contrast to our earlier research, however, the effect was larger among female participants. Contrary to a widespread expectation, participants did not react less positively to research led by a female. Participants did react less positively, though, to research led by a male when the research reported a male‐favouring difference in a highly valued trait. Participants judged male‐favouring research to be lower in quality than female‐favouring research, apparently in large part because they saw the former as more harmful. In both studies, participants predicted that the average man and woman would exhibit substantial own‐sex favouritism, with both sexes predicting more own‐sex favouritism from the other sex than the other sex predicted from itself. In making these predictions, participants overestimated women’s own‐sex favouritism, and got the direction of the effect wrong for men. A greater understanding of the tendency to overestimate gender‐ingroup bias could help quell antagonisms between the sexes.

Sampling strategy is the same as before: some 350 Prolific subjects from USA and UK. Results were also about the same:

The interesting thing here is that they randomized the sex of the researcher who was mentioned in the article as having lead the research team who did the study. This apparently didn’t affect the results. I would have expected men presenting men-better research to suffer a bit of extra stigma.

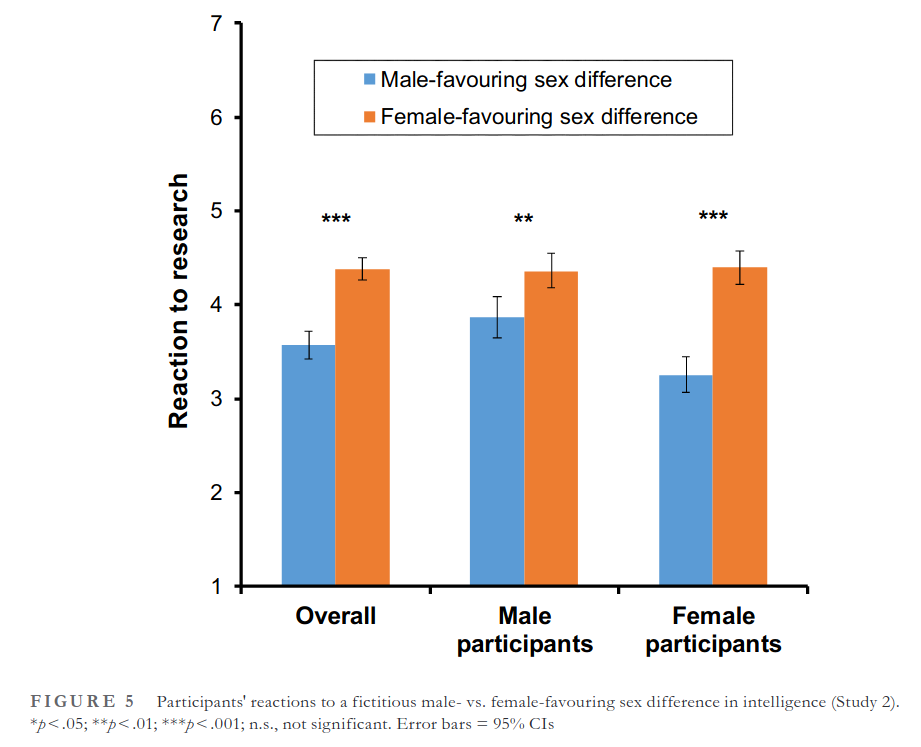

In their second study, they did the same thing, but this time with intelligence as the trait in question. The sample was also from Prolific. Results:

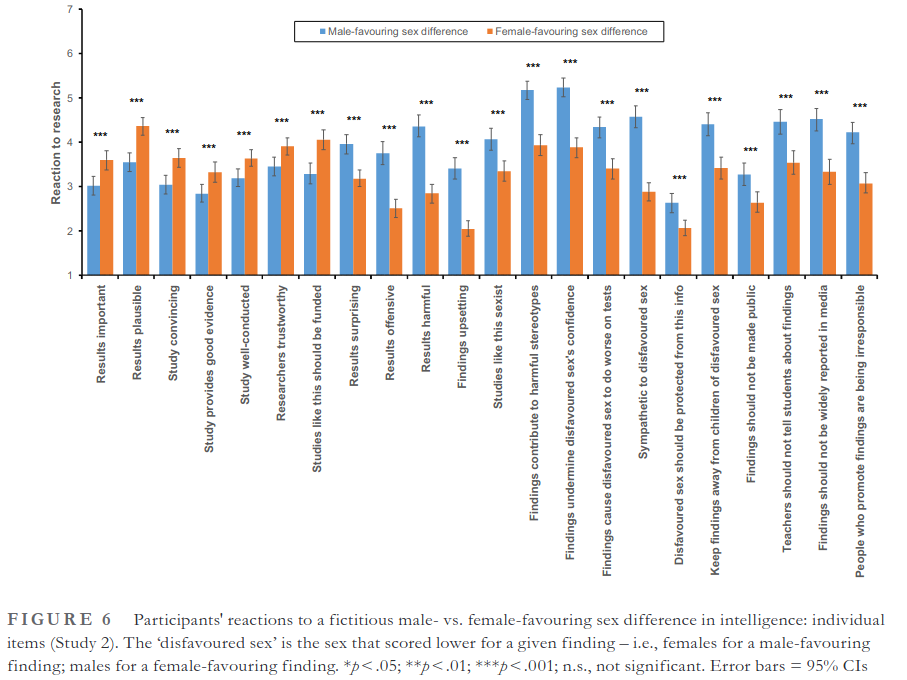

So both men and women rate research overall higher when it shows women are higher in intelligence. This is also true for each of the questions:

In fact, you’ve probably never seen a pre-registered figure with so clean results before. Every comparison is p < .001. People find a study showing greater female average intelligence to be more plausible, more convincing, to be providing better evidence, to have better evidence, to be done by more trustworthy researchers, less surprising, less offensive, less harmful, less upsetting, contributing less to harmful stereotypes and so on.

They also replicated the findings about women incorrectly stereotyping men as finding the study plausible, whereas real men don’t. We’ve seen this results twice already, so I won’t bother with the details. They find some weak evidence of extra negative opinions against men who do research showing men-better results, but it was only p < .05 with this sample size, so probably a small effect.

The big equalitarianism paper

Saving the best for last, let’s look at this eternal preprint from Bo Winegard, Cory Clark, Connor Hasty, Roy Baumeister. Winegard famously got fired for crime think, and Clark suffered some employment issues as well (details sealed). As a matter of fact, the authors have been trying to publish this paper for half a decade at this point. The preprint is dated to 2018, and as of 2022, it is not published. As the other researchers above were able to publish their findings about sex differences, you might wonder why this one is even more difficult to publish.

- Winegard, B., Clark, C., & Hasty, C. R. (2018). Equalitarianism: A Source of Liberal Bias. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3175680

Recent scholarship has challenged the long-held assumption in the social sciences that Conservatives are more biased than Liberals, yet little work deliberately explores domains of liberal bias. Here, we demonstrate that Liberals are particularly prone to bias about victims’ groups (e.g. Blacks, women) and identify a set of beliefs that consistently predict this bias, termed Equalitarianism. Equalitarianism, we believe, stems from an aversion to inequality and a desire to protect relatively low status groups, and includes three interrelated beliefs: (1) demographic groups do not differ biologically; (2) prejudice is ubiquitous and explains existing group disparities; (3) society can, and should, make all groups equal in society. This leads to bias against information that portrays a perceived privileged group more favorably than a perceived victims’ group. Eight studies (n=3,274) support this theory. Liberalism was associated with perceiving certain groups as victims (Studies 1a-1b). In Studies 2-7 and meta-analyses, Liberals evaluated the same study as less credible when the results concluded that a privileged group (men and Whites) had a more desirable quality relative to a victims’ group (women and Blacks) than vice versa. Ruling out alternative explanations of Bayesian (or other normative) reasoning, significant order effects in within-subjects designs in Studies 6 and 7 suggest that Liberals believe they should not evaluate identical information differently depending on which group is portrayed more favorably, yet do so. In all studies, higher equalitarianism mediated the relationship between more liberal ideology and lower credibility ratings when privileged groups were said to score higher on a socially valuable trait. Although not predicted a priori, meta-analyses also revealed Moderates to be the most balanced in their judgments. These findings indicate nothing about whether this bias is morally justifiable, only that it exists.

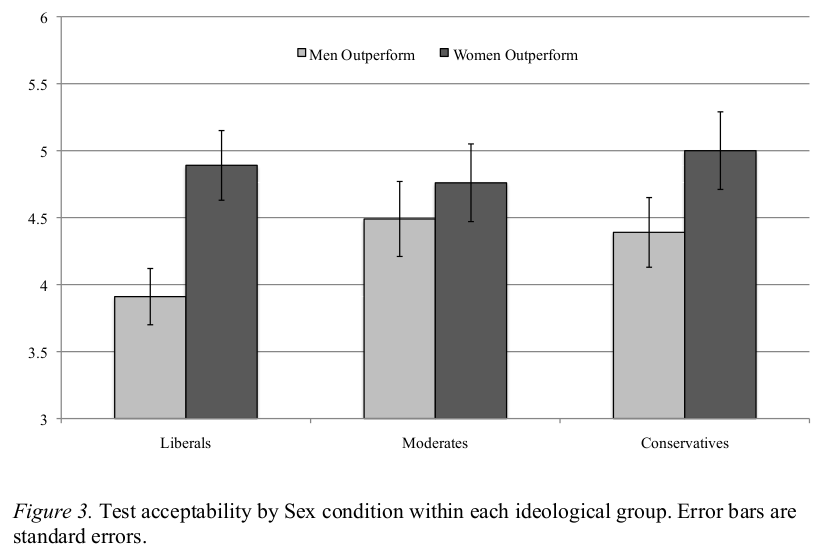

Though it is being blockaded, the study has received a decent amount of attention. In fact, it has about 12k views on Researchgate and 41 citations (53 on GS). The paper is 100 pages long, because it has multiple studies in it. In their 2nd study (n=205, MTurk subjects), they had people read a description of a test, then answered whether it is fair, should be used etc.

In the past decade, the College Entrance Exam (CEE) has been given to high school students. It has been shown to have remarkable accuracy at predicting academic performance in college.

However, universities have been debating whether to use the exam or not because women/(men), on average, score much higher than men/(women) on the exam, leading to the acceptance of more women/(men) to college than men/(women).

The condition was varied at random between subjects (they saw only 1 version). Results:

So both leftists and conservatives were more favorable to a test that favors women than one that favors men but the effect is larger for leftists. The errors bars are too large for moderates due to small sample size. Their third study was similar (n=202 MTurk subjects), but used this study description:

Researchers from a large research institution have discovered a gene that might explain intelligence differences between Blacks and Whites. For many years, researchers have found that Blacks/(Whites) score higher on certain intelligence tests than Whites/(Blacks). Tom Berry and his colleagues have tried to find genetic causes for the disparity in intelligence scores, arguing that environmental explanations cannot explain the IQ gap. “There is simply no reasonable environmental explanation for the IQ gap that we can find or that other researchers have proposed,” Dr. Berry explained.

Berry and his team think they have an answer. They isolated a gene on the 21st chromosome that is reliably associated with higher IQ scores. The gene polymorphism, called THS-56RR, was first found in 1999, but researchers didn’t know that it was related to higher IQ scores. Berry and his team found that it was strongly related to IQ scores.

They also found that the gene is much more common in American Blacks/(Whites) than Whites/(Blacks). “About 93% of Blacks/(Whites) carry the gene,” Dr. Berry said, “whereas only 10% of Whites/(Blacks) carry it. We really think this might explain the IQ gap.”

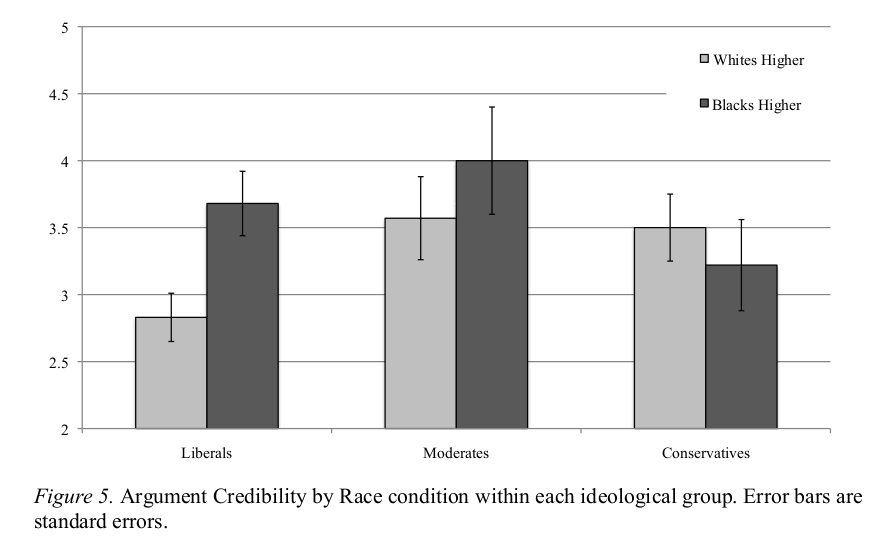

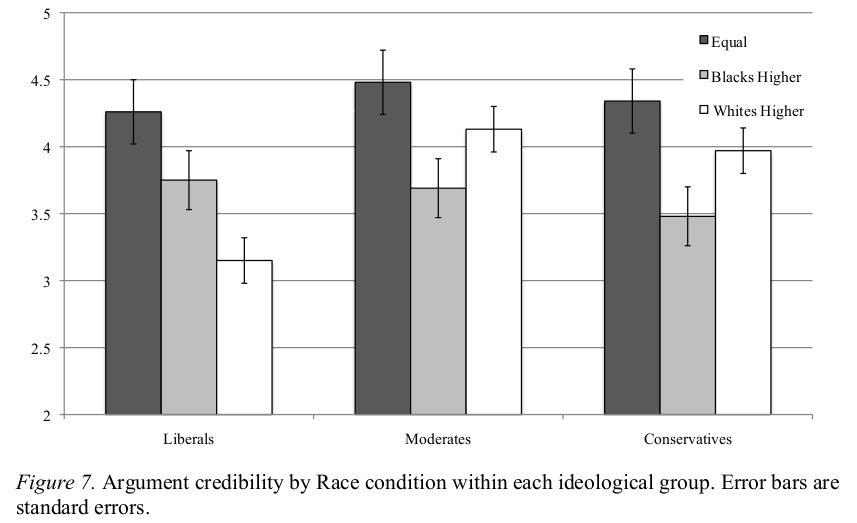

So now you can see why this paper is being censored more than the usual amount: race and intelligence. Results:

Moderates and conservatives assign about equal credibility to the study no matter who is favored, but leftists show a large bias in favor of Africans.

Study 4 (n=452 MTurk subjects) repeated study 3, but adds an group-equality scenario, with this text:

Researchers from a large research institution have discovered a gene that might explain intelligence similarities among Blacks and Whites. For many years, researchers have found that Whites and Blacks score similarly on certain intelligence tests. Tom Berry and his colleagues have tried to find genetic causes for intelligence scores, arguing that environmental factors cannot explain IQ. “There is simply no reasonable environmental explanation for IQ differences within races that we can find or that other researchers have proposed,” Dr. Berry explained.

Berry and his team think they have an answer. They isolated a gene on the 21st chromosome that is reliably associated with higher IQ scores. The gene polymorphism, called THS-56RR, was first found in 1999, but researchers didn’t know that it was related to higher IQ scores. Berry and his team found that it was strongly related to IQ scores.

They also found that the gene is equally common in American Whites and Blacks. “About 60-65% of both Whites and Blacks carry the gene,” Dr. Berry said, “We really think this might explain similarities in intelligence scores between them.”

Results:

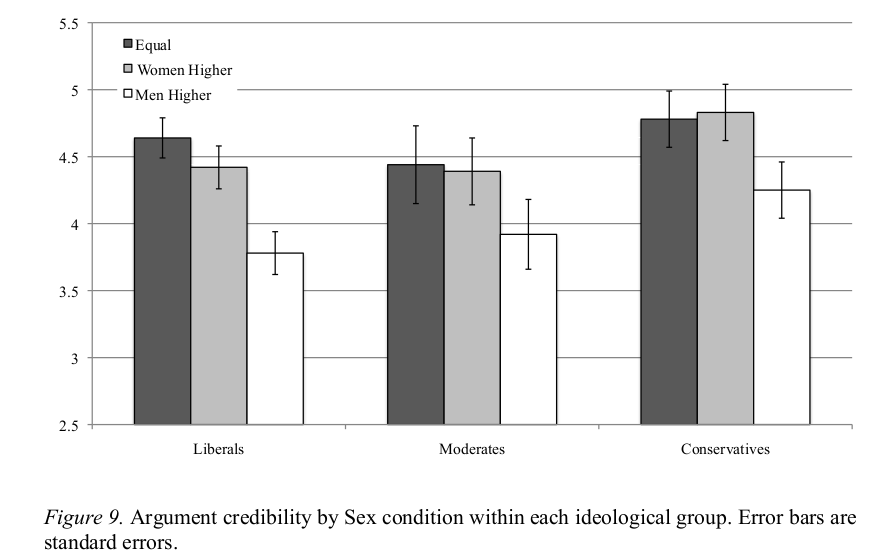

Here the results are very clean. Leftists prefer studies where groups are equal, and if not, then where Africans do better. Moderates and conservatives both show a preference for equal-outcomes, and if not, then Europeans do better. As such, based on these results one might say that leftists show pro-African or anti-European preference, and moderates/conservatives show pro-European or anti-African preference. Study 5 (n=450 MTurkers) is the same as above, but with sexes instead of races:

Oof! Conservatives and moderates prefer intelligence equality or women superiority about equally. Leftists prefer equality even over women superiority, maybe. No one believes male superiority! Very unfortunate as that is of course what the data mostly show. In studies 6 and 7, they used a within-person design, that is, they showed people both variants of the argument, but randomized the order. Some people saw the study description about men being smarter, others about women being smarter. Study 7 is about race, but otherwise works the same. Results:

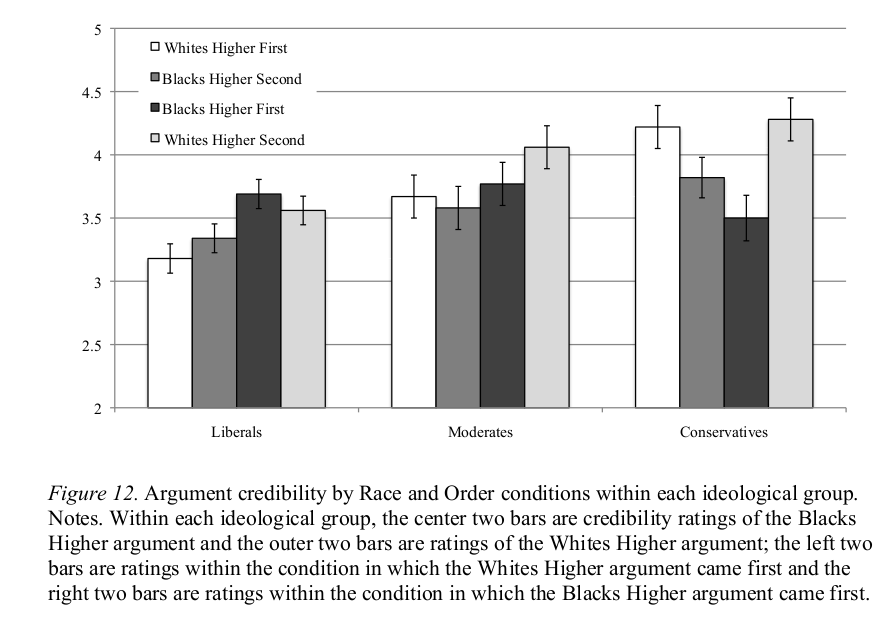

The way to read it is that the first two bars belong together (shown Europeans with higher scores first), and the latter two bars belong together (shown Africans with higher scores first). The trick here is to look at order effects, because this can signal that people are trying to be consistent after having made a rating about the last version. But it doesn’t seem to be much the case though, as leftists rated Africans higher as more credible in both order versions, while conservatives and moderates did the opposite. There is a slight effect of order in that when leftists are shown Europeans higher first, their overall credibility rating is lower than when shown Africans higher. This is also true for moderates, but not for conservatives. So the within-person study just ended up finding about the same as before. Here’s the sex version on the other hand:

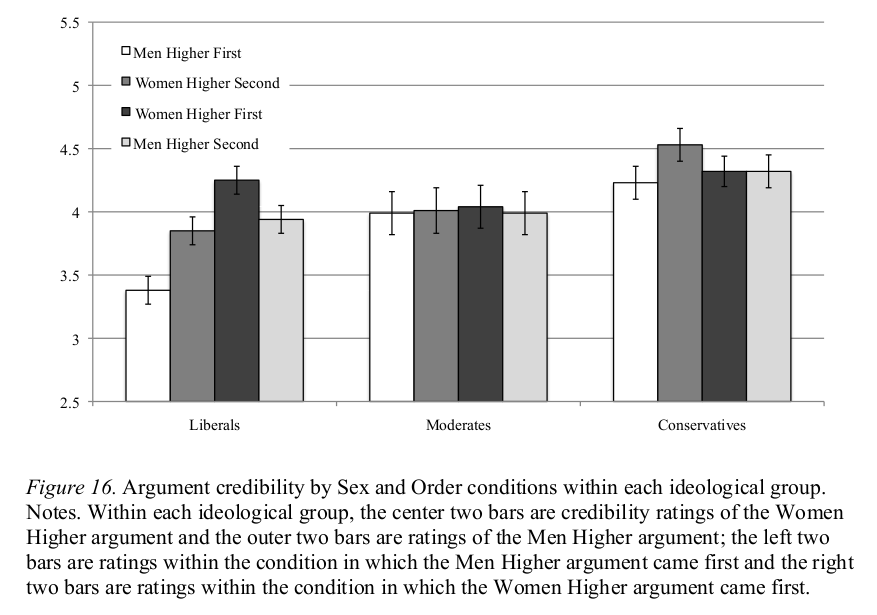

Moderates really are true moderates in this case, showing no order effects of any kind and no preference. True Centrist status achieved. 🧘

Conservatives shown men higher first end up saying women higher is more credible (pro-female) but not when the order is reversed. Hmm? Leftists show a massive effect of order on the overall ratings, but in both cases rated women higher as more credible. So these results just replicate what we saw before.

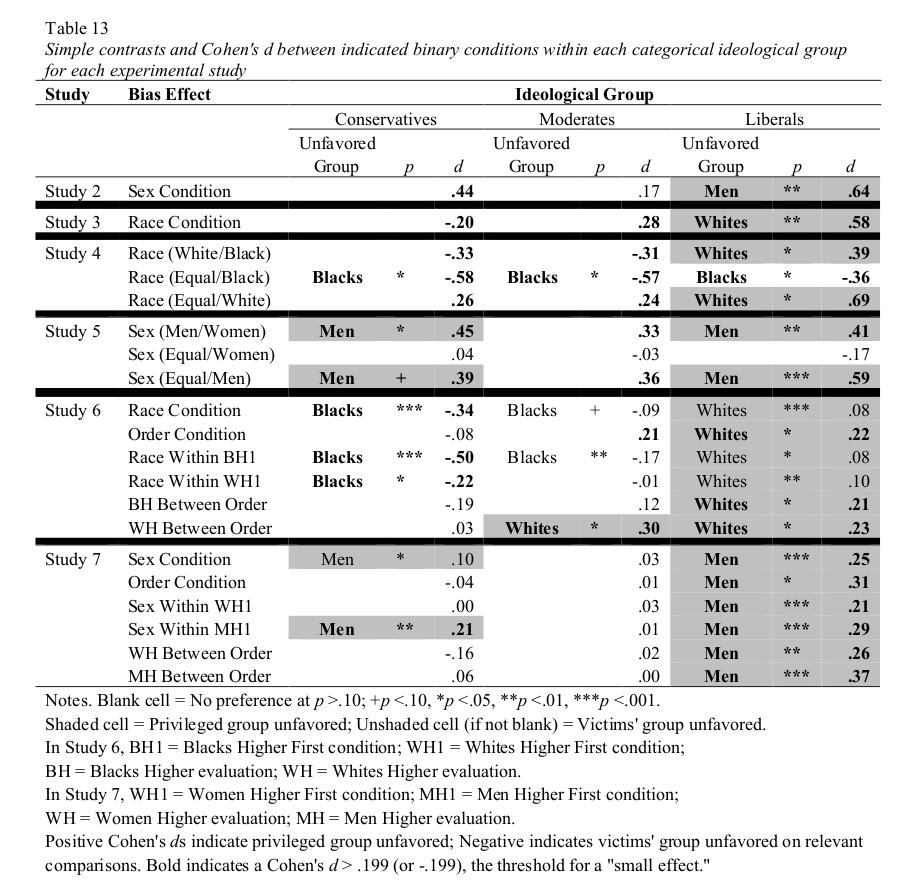

Alright, phew, a lot of results. Here’s a nice table summary:

This just shows the p < .10 ‘hits’. The leftist effect was very clear showing up 19 out of 20 times with effects against Europeans and against men. So pray you don’t do any research that makes these groups look good and have leftist readers! Conservatives were more inconsistent in the results.

What do we make of these results?

One can of course ascribe such results to bias. After all, people were given two otherwise identical descriptions of a study, and only the direction was randomly varied. How could it be due to anything else? This is the line of reasoning used by 95%+ of social science on bias, I would guess. It is faulty. People also rely on their priors. To make it easy to see, suppose you ran a study with fake studies about whether gold or wood weighs more per volume. You randomize the outcomes, and wow, people think research that shows that wood is more dense to be poorly done, done by incompetent researchers etc. How can it be? People already begin with a strong belief that gold is more dense, and so, any study showing the opposite must be faulty in some way, and probably done by a bad researcher. This is perfectly reasonable.

You now see the problem with the research above. I would guess that people think that women are better at drawing and lie less on average. So research showing the opposite must be suspect, done by suspect people, and for suspect reasons. It appears most people think men and women are about equally smart, so research showing something else is at fault. People are particularly hostile to men-smarter findings, which from this Bayesian perspective would imply that they hold a strong prior against this conclusion. Studies like the ones we have reviewed here can show bias insofar as one is willing to concede that research would also show bias against wood-is-more-dense-than-gold. (This is also the same error as with hiring discrimination research trying to show race biases based on unequal callback rates from otherwise identical application letters.) Humans do not rate research in isolation, but take into account the context and background beliefs. To do otherwise would be decoupling, but most people are very poor at this. Overall, men seem to be better at this than women, due to their higher systemizing/autism. Since decoupling leads one to opinions that may be contrary to general opinion in a society, it is not surprising that small brain communist PZ Myers has written apparently the only criticism of this concept.

One major limitation of the research above is that it was all done using online samples. These samples have known leftist bias in their politics, so they will tend to show more or strongest effects of leftist bias, or simply have low statistical power/precision for conservatives. Strangely, the authors didn’t seem to realize this issue, though they did measure the ideology of their participants. It is one of the reasons why the meta-analysis table from the Winegard et al study has so many empty cells for non-leftists: there wasn’t enough of them in the studies to reach p < .05.

In the same vein, it is weird that they only used general population samples, and not researchers. Surely, the more important thing for science would be to know or show that these preferences are not limited to the general population. In other words, if you wrote two scientific articles, but only changed the direction of the result, scientists would probably rate the one finding results they believe in to be more statistically sound etc. Insofar as peer review ratings of statistics are supposed to be objective indicators that don’t take background factors into account (decoupled!), this would show a major failure of the peer review system as it works currently. I realize that sampling academics is more difficult, but one can actually sample several hundred professors on Prolific, and probably some thousands of PhD students, or people who hold PhD degrees. I know because we did this before. I think redoing some of these studies but with academic samples is a high priority research task.

Edited to add: actual scientist ratings

Noah Carl points out that about 2 weeks ago, he wrote a summary of a study doing the thing I just said should be done. I probably read the blogpost too, but forgot!

- Finseraas, H., Midtbøen, A. H., & Thorbjørnsrud, K. (2022). Ideological biases in research evaluations? The case of research on majority–minority relations. Scandinavian Political Studies.

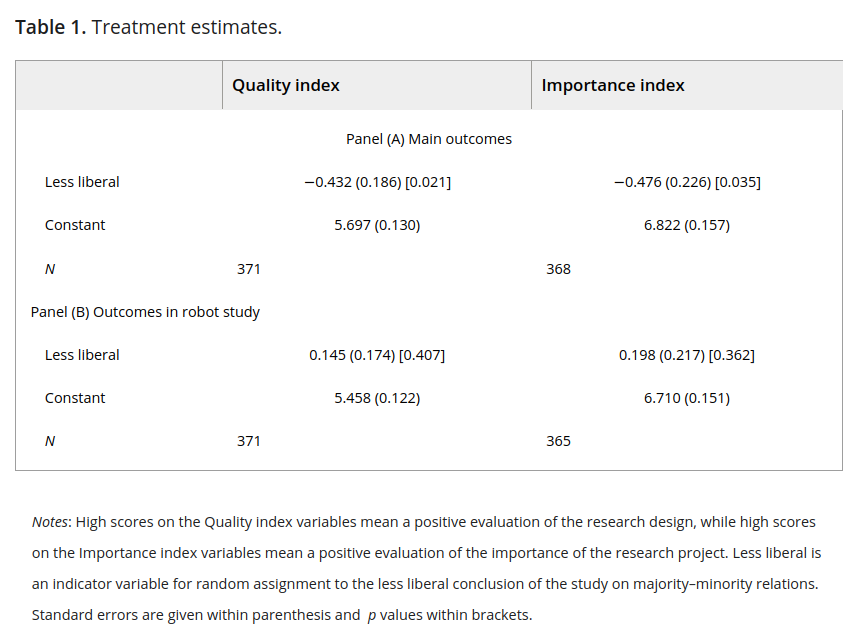

Social science researchers tend to express left-liberal political attitudes. The ideological skew might influence research evaluations, but empirical evidence is limited. We conducted a survey experiment where Norwegian researchers evaluated fictitious research on majority–minority relations. Within this field, social contact and conflict theories emphasize different aspects of majority–minority relations, where the former has a left-liberal leaning in its assumptions and implications. We randomized the conclusion of the research they evaluated so that the research supported one of the two perspectives. Although the research designs are the same, those receiving the social contact conclusion evaluate the quality and relevance of the design more favorably. We do not find similar differences in evaluations of a study on a nonpoliticized topic.

Their sample is 371 social scientists social scientists. They were given this study description:

A group of researchers want to study what shapes anti-immigration attitudes among native-born Norwegians. Drawing on contact and group threat theory, they are interested in whether personal contact between Norwegians and newly arrived refugees will decrease skepticism towards ethnic minorities and make attitudes to immigration more liberal (e.g. because misperceptions about unknown cultures are corrected), or have the reversed effect, leading to increased negativity towards minorities and less liberal attitudes (e.g. because perceptions about the costs of integration change). The research team conducts a randomized controlled trial to study this question. They recruit 160 native-born Norwegians that volunteer to participate in the study. 80 of the participants are randomly chosen to participate in three dialogue meetings with newly arrived refugees. The researchers collect background information about the participants before and after the dialogue meetings. Supporting group threat theory/contact theory, the researchers find that, after the dialogue meetings, the 80 participants report on average less/more liberal attitudes to immigration.

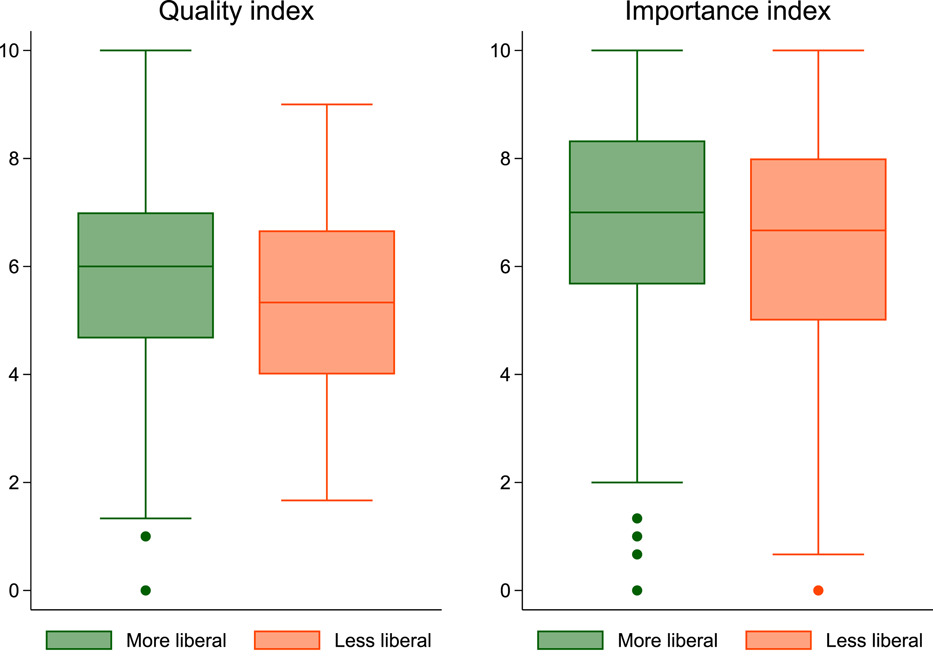

It was randomized in direction across people. The results look like this:

No error bars, are these differences reliable?

Eh, the p values are .021 and .035. The robot study mentioned was a control study without political relevance (robots used in elderly care). Basically this study was low power. It is a pity they didn’t ask about more fictive studies, so they could average across them for more statistical precision, or recruited more people. As it is, this study just looks p-hacked, so we gotta 🤷.