- https://notpoliticallycorrect.me/

- Twitter: RaceRealist88

Since I am repeatedly asked by a bunch of differently looking people to comment on that particular blog. I don’t know the guy, but he’s been quite active in recent times. The blog is kinda odd in that it prominently features race realist stuff, a bunch of prominent race realist academics — Charles Murray, Arthur Jensen, JP Rushton, Richard Lynn, Linda Gottfredson — yet most of the content is actually quite critical of mainstream hereditarian views. Some examples.

(I shall also mention that there is a blogroll for various HBD blogs, including this one.)

Italian north-south intelligence gap

Back in 2010, Lynn became even more notorious when he published a paper showing the usual latitude pattern for IQ holds within Italian regions. There were a bunch of critical replies to this paper, which one can easily locate via GScholar (110 citation as of now). Most of these were variants on the sociologist’s fallacy. For instance, the first argument given by Felice and Giugliano (2011) is that since Lynn relied on PISA test scores, these can be just as well explained by school quality. They then show that school quality is generally higher in the north. A typical case. Lynn would be inclined to say “yeah, of course, since smarter people build better schools”. And in any case, there is little evidence of actual score improvements from school quality metrics, a finding well known since the Coleman report (1966). There is some evidence that length of schooling can increase IQ scores, though estimates vary quite a bit (contrast e.g. Bell Curve estimate vs. others), and it is not clear how these transfer to PISA gains (seems likely), and to which extent they are increases in general intelligence vs. other stuff (see Ritchie et al 2015).

The primary post on the topic seems to be:

- https://notpoliticallycorrect.me/2016/01/31/northsouth-differences-in-italian-iq-is-richard-lynn-right/

which is prominently featured in the top menu. RR88 doesn’t want to accept that one can infer IQ from scholastic tests, despite the well known finding of correlations near unity between them for group level data. There are various other odd choices:

Literacy and average years of schooling are better predictors of income levels than regional IQs.

Where does years of education come from? Literacy in 1871 is a decent IQ proxy (see earlier post).

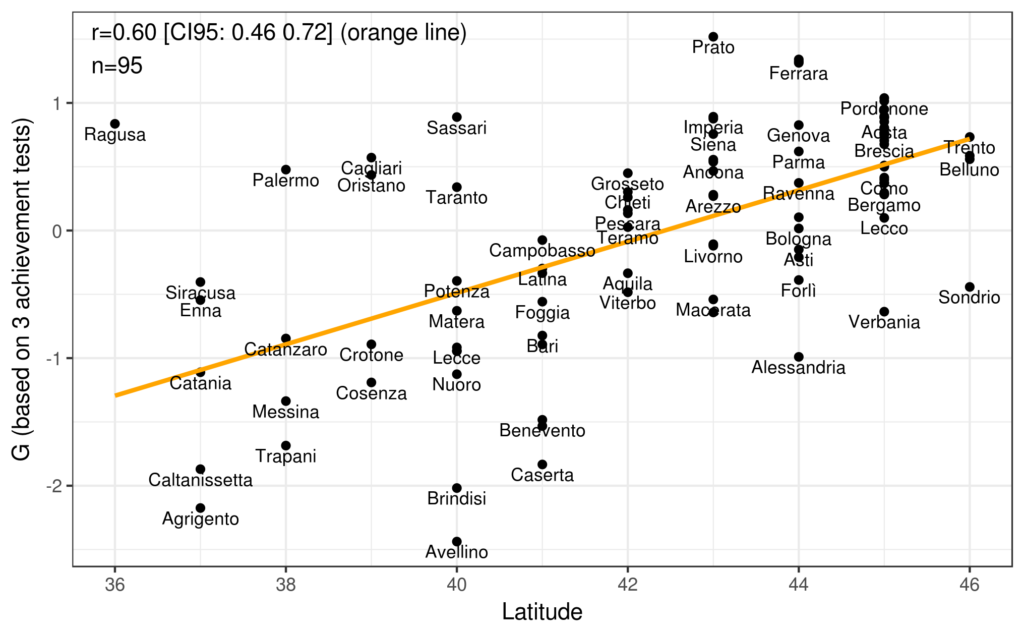

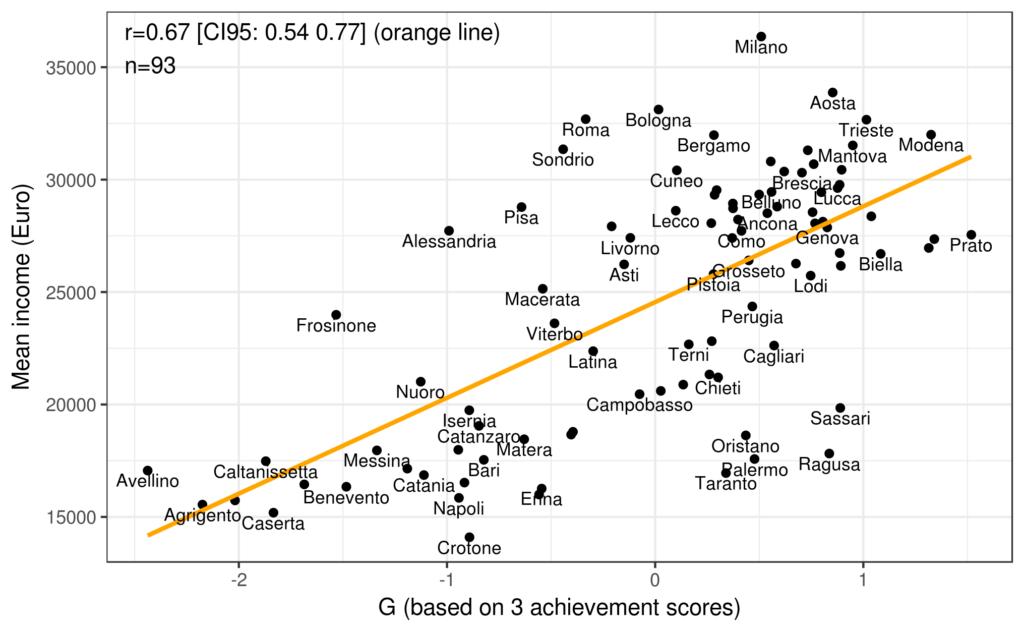

A main issue with the published studies on Italy is that they rely on the region level data (about n = 20). However, one can actually find scholastic (INVALSI) data for the province level too, about n = 100. I published some results from these a while back, but only on Twitter. Here they are. Let’s plot latitude and G (from 3 achievement tests), as well as G and income:

One can also find province level test scores from the late 1800s, so one can do a longitudinal study. Despite the fact that I posted this a few times, no one has stepped in to do anything. I will help out, but I need an active Italian collaborator.

Generally speaking, HH88 takes a quite accusatory and motive-speculative tone:

In sum, PISA is garbage to infer intelligence from as they are tests of achievement and not intelligence. Other tests of achievement show a decrease in the gap and/or Southern Italians scoring higher. Moreover, no substantial genetic differences exist between the North and the South, falsifying Lynn’s thesis for the causality of the differences between the North and the South. The oft-cited GDP difference between Northern and Southern Italy can be accounted for by the presence of the Mafia. Whenever the murder rate rises (due to Mafia activity), the GDP decreases. None of these factors have been taken into account and they explain the difference between the North and the South. It is environmental in nature–not genetic. Lynn’s Italian IQ data is garbage and should not be cited. It’s just a Nordicist fantasy that Southern Italians score lower than Nothern Italians.

It features the usual tactic: pick some outcome and propose other interpretation, usually without any supportive evidence. The obvious reply from hereditarian view is that intelligence explains both of them as it is the root cause of most large-scale social inequality. In the case of the black/shadow economy, one would of course expect this to be negatively related to IQ, which is it also outside Italy (country level study). These kinds of just-so sociological stories cannot explain the generalness of the patterns found in a parsimonious way. There is a very rich literature of the causality of intelligence for social outcomes using a variety of designs. There is usually no such evidence for the proposed alternative interpretation.

Lynn and world-wide IQs

- https://notpoliticallycorrect.me/2017/09/05/worldwide-iq-estimates-based-on-education-data/

This one developed into standard insult style no data argumentation:

The manipulation is quite apparent, Lynn largely over-estimated China (+22), Japan (+7) to make East-Asians cluster on top, thus protecting himself from accusations of nordicism and giving support to the inter-cultural validity of the IQs that he cherry-picked. The western European and Russian data remained mostly unchanged. Vietnam (+11) and Thailand (+5) were given a bonus for their genetic proximity to North-East Asia that is supposed to make them score in the low 90s despite their lack of development. Little changes were brought to the scores of the Latin American, Middle-Eastern and Austronesian countries usually scoring in the mid-80s. Major fraud (+14 in Pakistan, +7 in Bangladesh) was done to lift up South-Asian countries out of the 70s range and excluding Sub-Saharan Africa as the only region scoring 70 or below and downgrading Nigeria (-4) and the DR. Congo (-7) in the process.

By pointing this out, I’m warning honest researchers and laymen about the dangers of relying on data resulting from undisclosed, unsystematic and un-replicable methodology. And although my estimates do not result from any actual IQ measurement beyond the relationship between IQ and schooling evidenced in Norwegian cohorts, my method uses a single, universal conversion factor applied to representative official data collected by professional demographers whereas Lynn’s and the likes’ cherry-picking of samples is only the hobby of a dozen scholars and pseudo-scholars. This is how I found out strong, consistent and meaningful correlations between IQ and various development variables.

Though this post seems to be written by another person (Afrosapiens). It is also particularly stupid in the methods used.

Brain size and intelligence

I covered this one already. RR88 has a reply to my post. Unfortunately, he just repeats his earlier claims. There is little disagreement about the premises, the point is that the argument is invalid. Perhaps a simple numerical experiment will show this. Let’s say that variation in human intelligence is entirely caused by 3 factors, brain size, brain speed and brain efficiency. We furthermore pretend that they are equally important and are uncorrelated (a quite wrong, but simplifying assumption). Thus, each will have a correlation of sqrt(1/3) or about .60 with intelligence. Suppose evolution selected for intelligence. It would do so by increasing the expected value of the trait, which is done by increasing each of the three components equally. This is based on the assumption that they carry equal evolutionary costs, i.e. energy use, developmental delays, pregnancy issues, and so on, and that they are each as easy to improve upon, i.e. have similar easy ways to improve in the genomic space. These assumptions are probably false, but we can’t really say for now. So while one can probably increase intelligence by using only one of the evolutionary pathways, this is not how nature does it — for nature is blind. Thus, it follows that the existence of negative outliers, even quite stark, do not show that brain size, or speed, or efficiency were not targets of selection to improve intelligence. As I pointed out before, the same argument argument would show that any non-perfect physical underpinning of a trait could not have been the target of selection to improve that trait. Which is to just say that evolution is not possible except in very rare cases with 1 to 1 mapping.

I have made a small simulation to illustrate the above. It is hosted on Rpubs: http://rpubs.com/EmilOWK/brain_size_evolution_sim_2017

The TL;DR conclusion is:

(Referring to the last plot) Here we see the final point: that despite 2 generations of strong selection on the trait of interest, there are still negative outliers for brain size that have high intelligence. In this particular dataset, there is a case with -1.32 z brain size, which is about 2 d below the mean. Yet the IQ of the case is 124, 2 points above the average of the third population. How? Well, it has good z scores in the two other physical traits: speed = 3.31 z and efficiency 0.90 z.

That particular case would be classified as having microcephaly, which is sometimes defined as being below 2 d of the mean, yet there is nothing wrong with the brain of that case.

Doctors, intelligence and predictive validity

- https://notpoliticallycorrect.me/2017/10/04/doctors-iq-and-job-performance/

Mainly consists of quoting a bunch of stuff from Richardson. For those who are not familiar, he is a kind of Richard Nisbett-like scholar who claims that:

As we approach the centenary of the first practical intelligence test, there is still little scientific agreement about how human intelligence should be described, whether IQ tests actually measure it, and if they don’t, what they actually do measure. The controversies and debates that result are well known. This paper brings together results and theory rarely considered (at least in conjunction with one another) in the IQ literature. It suggests that all of the population variance in IQ scores can be described in terms of a nexus of sociocognitive-affective factors that differentially prepares individuals for the cognitive, affective and performance demands of the test—in effect that the test is a measure of social class background, and not one of the ability for complex cognition as such. The rest of the paper shows how such factors can explain the correlational evidence usually thought to validate IQ tests, including associations with educational attainments, occupational performance and elementary cognitive tasks, as well as the intercorrelations among tests themselves.

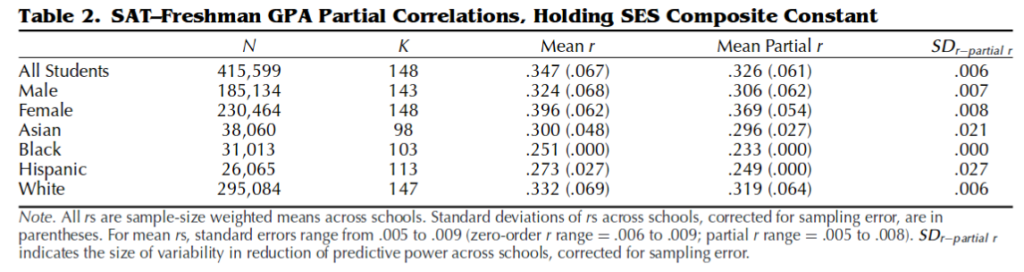

This claim was made in 2002! (Similar one in 1999) It sounds like something from the 1970, which is perhaps not surprising since Richardson seems to have started his decade long anti-IQ argument back then (1972 here). There are two obvious methods one can use to examine his model and both have been used. The first is the usual approach just including various parental measures in regressions and seeing if IQ etc. retains about the same validity. A recent, large-scale study of the SAT did just that. They found:

A stronger method is to employ a family design. In older studies, they usually compared fathers’ and sons’ IQs and social standing. This controls for upbringing by design. One can also use a sibling design, which finds about the same (Murray 1997).

Aside from quoting Richardson, he cites a study by McManus (2003):

Objective To assess whether A level grades (achievement) and intelligence (ability) predict doctors’ careers.

Design Prospective cohort study with follow up after 20 years by postal questionnaire.

Setting A UK medical school in London.

Participants 511 doctors who had entered Westminster Medical School as clinical students between 1975 and 1982 were followed up in January 2002.

Main outcome measures Time taken to reach different career grades in hospital or general practice, postgraduate qualifications obtained (membership/fellowships, diplomas, higher academic degrees), number of research publications, and measures of stress and burnout related to A level grades and intelligence (result of AH5 intelligence test) at entry to clinical school. General health questionnaire, Maslach burnout inventory, and questionnaire on satisfaction with career at follow up.

Results 47 (9%) doctors were no longer on the Medical Register. They had lower A level grades than those who were still on the register (P < 0.001). A levels also predicted performance in undergraduate training, performance in postregistration house officer posts, and time to achieve membership qualifications (Cox regression, P < 0.001; b=0.376, SE=0.098, exp(b)=1.457). Intelligence did not independently predict dropping off the register, career outcome, or other measures. A levels did not predict diploma or higher academic qualifications, research publications, or stress or burnout. Diplomas, higher academic degrees, and research publications did, however, significantly correlate with personality measures.

Conclusions Results of achievement tests, in this case A level grades, which are particularly used for selection of students in the United Kingdom, have long term predictive validity for undergraduate and postgraduate careers. In contrast, a test of ability or aptitude (AH5) was of little predictive validity for subsequent medical careers.

They found about no validity of the AH5, an IQ entry test taken decades back. However, most of the outcomes are things one would not really expect IQ to predict well: time to consultant, time to membership. There are no measures of direct job performance. The closest is research papers, but we already know that IQ works to predict these (SMPY data). So, this study is not particularly convincing of the rather strong conclusion:

The fact of the matter is, job performance and IQ is on shaky ground since IQ tests are not constructed valid, and the job performance ratings are based on supervisor ratings which are highly subjective. Analyses in other locations around the world show that IQ does not predict job performance, however, motivation and effort do. IQ does not predict a doctor’s job performance; job performance tests do not prove the validity of IQ tests.

His interpretation of a second study actually commit a quite similar mistake as the one for brain size and intelligence, though in a different way:

Veena et al, (2015) show that 88 percent of medical students had near average intelligence, putting in 6 hours a day of studying, while 10 percent of students had above average IQ, spent less time studying but were sincere in their classes.

Veena et al (2015) conclude:

Students with near average IQ work hard in their studies and their academic performance was similar to students with higher IQ. So IQ can`t be made the basis for medical entrance; instead giving weight-age to secondary school results and limiting the number of attempts may shorten the time duration for entry and completion of MBBS degree.

So students with average intelligence work just as hard (if not harder) than people with above average IQ and have similar educational achievement. This shows that IQ can’t be the basis for medical school entry.

Yes, above average students in working habits can get away with less intelligence because both are causes of academic performance. The technical reader will note that this is a negative relationship generated by a conditioning on a collider.

By the way, a related and interesting study concerns the bar exam and the performance of lawyers. I found this study some time ago:

The pass rate on the July 2016 California bar exam was the lowest since 1984. The resulting outcry from California law school deans has prompted the State Bar of California to reexamine the bar exam. The deans at schools accredited by the American Bar Association complain that the California bar exam’s passing score is too high and fails too many prospective attorneys.

But lowering the score may have significant costs. The bar exam is designed to be a test of minimum competence. Lowering the cut score means students who performed worse on the bar exam practice law. That may result in lower quality attorneys practicing in California. The California deans are skeptical that the higher cut score has a meaningful consumer protection role. The deans argue that the bar exam does not adequately measure professional competence and that the high California passing score is not necessary to ensure adequate professional responsibility and minimum competence in the practice of law.

In this Essay, we present data suggesting that lowering the bar examination passing score will likely increase the amount of malpractice, misconduct, and discipline among California lawyers. Our analysis shows that bar exam score is significantly related to likelihood of State Bar discipline throughout a lawyer’s career. We investigate these claims by collecting data on disciplinary actions and disbarments among California-licensed attorneys. We find support for the assertion that attorneys with lower bar examination performance are more likely to be disciplined and disbarred than those with higher performance.

Although our measures of bar performance only have modest predictive power of subsequent discipline, we project that lowering the cut score would result in the admission of attorneys with a substantially higher probability of State Bar discipline over the course of their careers. But we admit that our analysis is limited due to the imperfect data available to the public. For a precise calculation, we call on the California State Bar to use its internal records on bar scores and discipline outcomes to determine the likely impact of changes to the passing score.

More on Richardson

I did mention that he is like Nisbett. Here’s how:

In general

RR88’s post generally reflect quite a bit of digging around work, and so while they are often misguided, they are not lazy, and I respect hard work. Sometimes they find something interesting others had missed. AfroSapiens’ content is best avoided based on my limited reading.

ETA. To give an example of an area of agreement between RR88 and me: we don’t like Rushtonian r-k theory. Though, again, I have to point out the logical error at the bottom:

If Rushton’s application of the theory is wrong, then it logically follows that anything based off of his theory is wrong as well.

This fallacy is known as denying the antecedent.